|

|

| (62 intermediate revisions by 2 users not shown) |

| Line 1: |

Line 1: |

| | This article describes OSE's use of the Discourse software. |

| | |

| | For a detailed guide to updating our Discourse server, see [[Discourse/Updating]]. |

| | |

| | For a detailed guide on how we installed Discourse in 2020 on our CentOS 7 server, see [[Discourse/Install]]. |

| | |

| | =Official Discourse Documentation= |

| | |

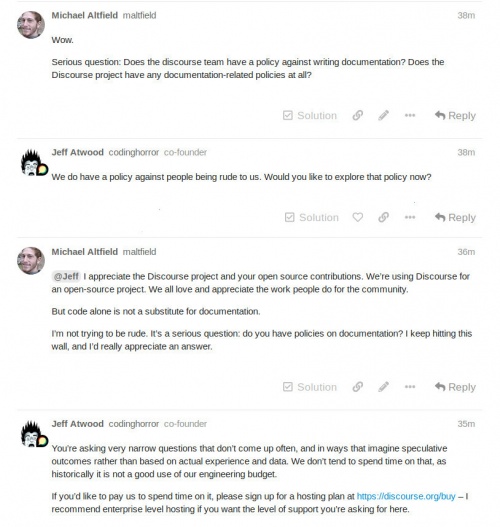

| | Discourse doesn't have any official documentation outside of their [https://docs.discourse.org/ API documentation]. |

| | |

| | It appears that this is intentional to make Discourse admins' lives difficult as a way to increase revenue. See [[#Strategic Open Source]] for more info. |

| | |

| =TODO= | | =TODO= |

|

| |

|

| Line 300: |

Line 312: |

| </pre> | | </pre> |

|

| |

|

| =2019-11 Install Guide= | | =Installing Themes and Components= |

| | |

| In 2019-11, I ([[User:Maltfield|Michael Altfield]]) tested an install of Discourse on the [[OSE Staging Server]]. I documented the install steps here so they could be exactly reproduced on production

| |

| | |

| ==Install Prereqs==

| |

| | |

| First we have to install docker. The version in the yum repos (1.13.1) was too old to be supported by Discourse (which states it requires a minimum of 17.03.1).

| |

| | |

| Note that the install procedure recommended by Docker and Discourse for Docker is a curl piped to shell. This should never, ever, ever be done. The safe procedure is to manually add the gpg key and repo to the server as get.docker.org script should do -- assuming it were not modified in transit. Note that Docker does *not* cryptographically sign their install script in any way, and it therefore cannot be safely validated.

| |

| | |

| The gpg key itself is available at the following URL from docker.com

| |

| | |

| * https://download.docker.com/linux/centos/gpg

| |

| | |

| Please do your due diligence to validate that this gpg key is the official key and was not manipulated in-transit by Mallory. Unfortunately, at the time of writing, this is non-trivial since there's no signatures on the key, the key is not uploaded to any public keyservers, the docker team doesn't have a keybase account, etc. I submitted a feature request to the docker team's 'for-linux' repo asking them to at least upload this gpg key to the keys.openpgp.org keyserver on 2019-11-12 <ref>https://github.com/docker/for-linux/issues/849</ref>

| |

| | |

| After installing the docker gpg file to '<code>/etc/pki/rpm-gpg/docker.gpg</code>', execute the following commands as root to install docker:

| |

| | |

| <pre>

| |

| # first, install the (ASCII-armored) docker gpg key to /etc/pki/rpm-gpg/docker.gpg

| |

| cp docker.gpg /etc/pki/rpm-gpg/docker.gpg

| |

| chown root:root /etc/pki/rpm-gpg/docker.gpg

| |

| chmod 0644 /etc/pki/rpm-gpg/docker.gpg

| |

| | |

| # and install the repo

| |

| cat > /etc/yum.repos.d/docker-ce.repo <<'EOF'

| |

| [docker-ce-stable]

| |

| name=Docker CE Stable - $basearch

| |

| baseurl=https://download.docker.com/linux/centos/7/$basearch/stable

| |

| enabled=1

| |

| gpgcheck=1

| |

| gpgkey=file:///etc/pki/rpm-gpg/docker.gpg

| |

| EOF

| |

|

| |

|

| # finally, install docker from the repos

| | By design, our [[Web_server_configuration|web servers]] can only <em>respond</em> to requests; they cannot initiate requests. And [[Discourse/Install#iptables|Discourse is no different]]. |

| yum install docker-ce

| |

| </pre> | |

|

| |

|

| Now execute the following commands as root to make docker start on system boot & start it up.

| | This means that the usual route of installing themes and components in Discourse via the WUI (<code>Admin -> Customize -> Themes -> Install -> Popular -> Install</code>) won't work. |

|

| |

|

| <pre> | | <pre> |

| systemctl enable docker.service

| | Error cloning git repository, access is denied or repository is not found |

| systemctl start docker.service

| |

| </pre> | | </pre> |

|

| |

|

| ==Install Discourse== | | ==Step #1: Find repo== |

|

| |

|

| In this step we will configure & install the Discourse docker container and all its components

| | To install a theme or theme component in our Discourse, first find its git repo. You can find many Discourse theme repos by listing all repos tagged with the topic '<code>discourse-theme</code>' or '<code>discourse-theme-component</code>' in the [https://github.com/discourse Discourse project on github]. |

|

| |

|

| ===Clone Repo=== | | * https://github.com/search?q=topic%3Adiscourse-theme+org%3Adiscourse+fork%3Atrue |

| | * https://github.com/search?q=topic%3Adiscourse-theme-component+org%3Adiscourse+fork%3Atrue |

|

| |

|

| From the [https://github.com/discourse/discourse/blob/master/docs/INSTALL-cloud.md Disourse Install Guide], checkout the github repo as root to /var/discourse. You'll want to validate that this wasn't modified in transit; there are no cryptographic signatures to validate authenticity of the repo's contents here. A huge failing on Discourse's part (but, again, Discourse's sec is rotten from its foundation in Docker; see above).

| | ==Step #2: Download zip== |

|

| |

|

| Execute the following as root to populate the '<code>/var/discourse/</code>' directory:

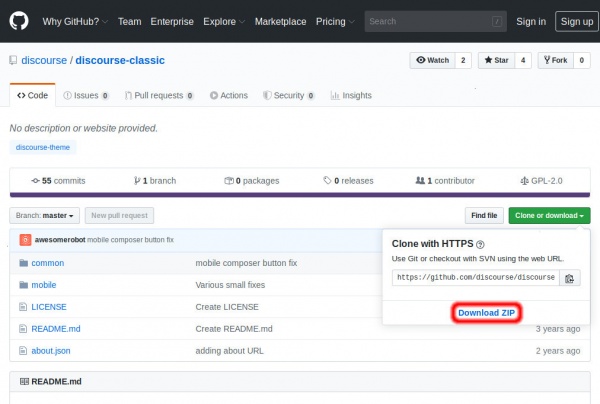

| | [[File:202005_discourseInstallTheme1.jpg|left|600px]] |

|

| |

|

| <pre>

| | For example, here's a link to the github repo for the Discourse "Classic Theme" |

| sudo -s

| |

| git clone https://github.com/discourse/discourse_docker.git /var/discourse

| |

| cd /var/discourse

| |

| </pre>

| |

|

| |

|

| ===Create container yaml===

| | * https://github.com/discourse/discourse-classic |

|

| |

|

| Now, the next step of the official Discourse '<code>INSTALL-cloud</code>' guide is to use the `<code>./discourse-setup</code>` script, but--at the time of writing--that script doesn't support building a config for servers that have an MTA running on the server without auth (ie: most linux servers on the net, whose MTA isn't accessible over the internet and instead just handle traffic over 127.0.0.1 and don't require auth--like our server is setup).

| | From the theme's github repo page, download a zip of the repo by clicking <code>"Clone or download" -> "Download ZIP"</code> |

|

| |

|

| But we need to run this `<code>./discourse-setup</code>` script once just to generate a template '<code>app.yml</code>' config file before proceeding. When it asks you for a hostname, enter '<code>discourse.example.com</code>', which will cause it to fail but generate the '<code>containers/app.yml</code>' file that we need so we can proceed with manual install.

| | <br style="clear:both;" /> |

| | ==Step #3: Upload zip== |

|

| |

|

| Execute the commands shown in the example below as root, inputting to the prompts matching what is shown:

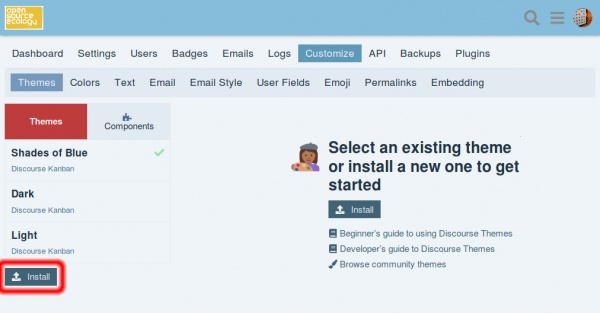

| | [[File:202005_discourseInstallTheme2.jpg|right|600px]] |

|

| |

|

| <pre> | | Now, login to our Discourse site and navigate to <code>Admin -> Customize -> Themes</code> |

| [root@osestaging1 discourse]# ls containers/

| |

| [root@osestaging1 discourse]#

| |

| [root@osestaging1 discourse]# ./discourse-setup

| |

| which: no docker.io in (/sbin:/bin:/usr/sbin:/usr/bin)

| |

| which: no docker.io in (/sbin:/bin:/usr/sbin:/usr/bin)

| |

| ./discourse-setup: line 275: netstat: command not found

| |

| ./discourse-setup: line 275: netstat: command not found

| |

| Ports 80 and 443 are free for use

| |

| ‘samples/standalone.yml’ -> ‘containers/app.yml’

| |

| Found 1GB of memory and 1 physical CPU cores

| |

| setting db_shared_buffers = 128MB

| |

| setting UNICORN_WORKERS = 2

| |

| containers/app.yml memory parameters updated.

| |

|

| |

|

| Hostname for your Discourse? [discourse.example.com]: discourse.example.com

| | Click <code>Install</code> |

|

| |

|

| Checking your domain name . . .

| | <br style="clear:both;" /> |

| WARNING:: This server does not appear to be accessible at discourse.example.com:443.

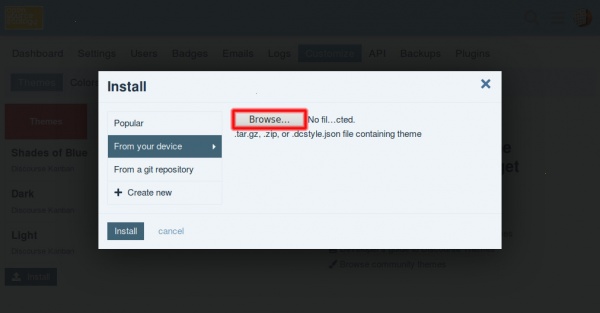

| | [[File:202005_discourseInstallTheme3.jpg|left|600px]] |

|

| |

|

| A connection to http://discourse.example.com (port 80) also fails.

| | In the JS modal "pop-up", choose <code>From your device</code>. |

|

| |

|

| This suggests that discourse.example.com resolves to the wrong IP address

| | Finally, click <code>Browse</code> and upload the <code>.zip</code> file downloaded above. |

| or that traffic is not being routed to your server.

| |

|

| |

|

| Google: "open ports YOUR CLOUD SERVICE" for information for resolving this problem.

| | <br style="clear:both;" /> |

|

| |

|

| If you want to proceed anyway, you will need to

| | =Looking Forward= |

| edit the containers/app.yml file manually.

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

|

| |

|

| Verify the change from the previous commands by confirming the existence of the '<code>containers/app.yml</code>' file.

| | This section will outline possible changes to be made to the Docker install/config in the future |

|

| |

|

| <pre>

| | ==Moving DBs outside docker== |

| [root@osestaging1 discourse]# ls containers/

| |

| app.yml

| |

| [root@osestaging1 discourse]#

| |

|

| |

|

| </pre>

| | It's worthwhile to consider moving the redis and postgresql components of Discourse outside of the docker container <ref>https://meta.discourse.org/t/performance-scaling-and-ha-requirements/60098/8</ref> |

| | |

| The default name of the Discourse docker container is '<code>app</code>'. Let's rename that to '<code>discourse_ose</code>'.

| |

| | |

| Execute the following command as root to update th container's yaml file name:

| |

| | |

| <pre>

| |

| mv containers/app.yml containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the change from the previous command by confirming that the file is now named '<code>discourse_ose.yml</code>'

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# ls containers/

| |

| discourse_ose.yml

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ===SMTP===

| |

| | |

| The Discourse install script doesn't support the very simple config of an smtp server running on localhost:25 without auth. That is to say, Discourse doesn't support the default postfix config for RHEL/CentOS and most web servers on the net..

| |

| | |

| We have to manually edit the /var/discourse/containers/discourse_ose.yml

| |

| | |

| Note that 'localhost' resolves to the IP Address of the container created by docker when referenced from within the context of Discourse, but our smtp server is running on the host server. Therefore, we cannot use 'localhost' for the DISCOURSE_SMTP_ADDRESS. Instead, we use the IP Address of the host server's docker0 interface. In this case, it's 172.17.0.1, and that can be verified via the output of `ip address show dev docker0` run on the host where docker is installed (in this case, osestaging1).

| |

| | |

| First, let's comment-out any existing SMTP-related environment variables defined in the yml file.

| |

| | |

| Execute the following command as root to comment-out the SMTP-related lines in the yaml file:

| |

| | |

| <pre>

| |

| sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^\([^#]*\)\(DISCOURSE_SMTP.*\)$%\1#\2%' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Now execute the following commands as root to add our SMTP settings to the '<code>env:</code>' section:

| |

| | |

| <pre>

| |

| grep 'DISCOURSE_SMTP_ADDRESS: 172.17.0.1' /var/discourse/containers/discourse_ose.yml || sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^env:$%env:\n DISCOURSE_SMTP_ADDRESS: 172.17.0.1 # this is the IP Address of the host server on the docker0 interface\n DISCOURSE_SMTP_PORT: 25\n DISCOURSE_SMTP_AUTHENTICATION: none\n DISCOURSE_SMTP_OPENSSL_VERIFY_MODE: none\n DISCOURSE_SMTP_ENABLE_START_TLS: false\n%' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the previous two changes by confirming that your '<code>discourse_ose.yml</code>' config file now looks something like this. Note the addition of the '<code>DISCOURSE_SMTP_*</code>' lines immediately under the '<code>env:</code>' block and that the following '<code>DISCOURSE_SMTP_*</code>' lines are commented-out.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep -C1 'DISCOURSE_SMTP' /var/discourse/containers/discourse_ose.yml

| |

| env:

| |

| DISCOURSE_SMTP_ADDRESS: 172.17.0.1 # this is the IP Address of the host server on the docker0 interface

| |

| DISCOURSE_SMTP_PORT: 25

| |

| DISCOURSE_SMTP_AUTHENTICATION: none

| |

| DISCOURSE_SMTP_OPENSSL_VERIFY_MODE: none

| |

| DISCOURSE_SMTP_ENABLE_START_TLS: false

| |

| | |

| --

| |

| # WARNING the char '#' in SMTP password can cause problems!

| |

| #DISCOURSE_SMTP_ADDRESS: smtp.example.com

| |

| #DISCOURSE_SMTP_PORT: 587

| |

| #DISCOURSE_SMTP_USER_NAME: user@example.com

| |

| #DISCOURSE_SMTP_PASSWORD: pa$$word

| |

| #DISCOURSE_SMTP_ENABLE_START_TLS: true # (optional, default true)

| |

| | |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| Also note that you will need to the postfix configuration (<code>/etc/postfix/main.cf</code>) to bind on the docker0 interface, change the '<code>mynetworks_style</code>' from '<code>host</code>' to nothing (comment it out), and add the docker0 subnet to the '<code>mynetworks</code>' list to auth the Discourse docker client to be able to send mail through the smtp server.

| |

| | |

| Execute the following commands as root to update the postfix config:

| |

| | |

| <pre>

| |

| grep 'inet_interfaces = localhost, 172.17.0.1' /etc/postfix/main.cf || sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^\(inet_interfaces =.*\)$%#\1\ninet_interfaces = localhost, 172.17.0.1%' /etc/postfix/main.cf

| |

| grep 'mynetworks = 127.0.0.0/8, 172.17.0.0/16' /etc/postfix/main.cf || sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^mynetworks_style = host$%#mynetworks_style = host\nmynetworks = 127.0.0.0/8, 172.17.0.0/16%' /etc/postfix/main.cf

| |

| </pre>

| |

| | |

| Verify the change above by confirming that your postfix '<code>main.cf</code>' file now looks something like this. Note that the '<code>inet_interfaces</code>' is set to <em>both</em> '<code>localhost</code>' and '<code>172.17.0.1</code>', '<code>myhost_style</code>' lines are all commented-out, and that the '<code>mynetworks</code>' line now includes '<code>172.17.0.0/16</code>'.

| |

| | |

| <pre>

| |

| [root@osestaging1 conf.d]# grep -E 'mynetworks|interfaces' /etc/postfix/main.cf

| |

| # The inet_interfaces parameter specifies the network interface

| |

| # the software claims all active interfaces on the machine. The

| |

| # See also the proxy_interfaces parameter, for network addresses that

| |

| #inet_interfaces = all

| |

| #inet_interfaces = $myhostname

| |

| #inet_interfaces = $myhostname, localhost

| |

| #inet_interfaces = localhost

| |

| inet_interfaces = localhost, 172.17.0.1

| |

| # The proxy_interfaces parameter specifies the network interface

| |

| # the address list specified with the inet_interfaces parameter.

| |

| #proxy_interfaces =

| |

| #proxy_interfaces = 1.2.3.4

| |

| # receives mail on (see the inet_interfaces parameter).

| |

| # to $mydestination, $inet_interfaces or $proxy_interfaces.

| |

| # ${proxy,inet}_interfaces, while $local_recipient_maps is non-empty

| |

| # The mynetworks parameter specifies the list of "trusted" SMTP

| |

| # By default (mynetworks_style = subnet), Postfix "trusts" SMTP

| |

| # On Linux, this does works correctly only with interfaces specified

| |

| # Specify "mynetworks_style = class" when Postfix should "trust" SMTP

| |

| # mynetworks list by hand, as described below.

| |

| # Specify "mynetworks_style = host" when Postfix should "trust"

| |

| #mynetworks_style = class

| |

| #mynetworks_style = subnet

| |

| #mynetworks_style = host

| |

| mynetworks = 127.0.0.0/8, 172.17.0.0/16

| |

| # Alternatively, you can specify the mynetworks list by hand, in

| |

| # which case Postfix ignores the mynetworks_style setting.

| |

| #mynetworks = 168.100.189.0/28, 127.0.0.0/8

| |

| #mynetworks = $config_directory/mynetworks

| |

| #mynetworks = hash:/etc/postfix/network_table

| |

| # - from "trusted" clients (IP address matches $mynetworks) to any destination,

| |

| # - destinations that match $inet_interfaces or $proxy_interfaces,

| |

| # unknown@[$inet_interfaces] or unknown@[$proxy_interfaces] is returned

| |

| [root@osestaging1 conf.d]#

| |

| </pre>

| |

| | |

| Finally, we must update our iptables rules to permit connections to postfix from the Discourse docker container

| |

| | |

| Execute the following commands as root to update and persist the iptables rules to permit the docker container to send email through the docker host.

| |

| | |

| <pre>

| |

| tmpDir="/var/tmp/`date "+%Y%m%d_%H%M%S"`_change_discourse_iptables"

| |

| mkdir "${tmpDir}"

| |

| pushd "${tmpDir}"

| |

| iptables-save > iptables_a

| |

| cp iptables_a iptables_b

| |

| grep "INPUT -d 172.17.0.1/32 -p tcp -m state --state NEW -m tcp --dport 25 -j ACCEPT" iptables_b || sed -i 's%^\(.*-A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT\)$%-A INPUT -d 172.17.0.1/32 -p tcp -m state --state NEW -m tcp --dport 25 -j ACCEPT\n\1%' iptables_b

| |

| iptables-restore < iptables_b

| |

| service iptables save

| |

| popd

| |

| </pre>

| |

| | |

| | |

| Verify the change above by confirming that your `<code>iptables-save</code>` output now looks something like this. Note the existance of the line code>-A INPUT -d 172.17.0.1/32 -p tcp -m state --state NEW -m tcp --dport 25 -j ACCEPT</code>'.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# iptables-save

| |

| # Generated by iptables-save v1.4.21 on Mon May 18 16:32:05 2020

| |

| *mangle

| |

| :PREROUTING ACCEPT [621:88454]

| |

| :INPUT ACCEPT [621:88454]

| |

| :FORWARD ACCEPT [0:0]

| |

| :OUTPUT ACCEPT [446:129173]

| |

| :POSTROUTING ACCEPT [446:129173]

| |

| COMMIT

| |

| # Completed on Mon May 18 16:32:05 2020

| |

| # Generated by iptables-save v1.4.21 on Mon May 18 16:32:05 2020

| |

| *nat

| |

| :PREROUTING ACCEPT [0:0]

| |

| :INPUT ACCEPT [0:0]

| |

| :OUTPUT ACCEPT [9:620]

| |

| :POSTROUTING ACCEPT [9:620]

| |

| COMMIT

| |

| # Completed on Mon May 18 16:32:05 2020

| |

| # Generated by iptables-save v1.4.21 on Mon May 18 16:32:05 2020

| |

| *filter

| |

| :INPUT ACCEPT [0:0]

| |

| :FORWARD ACCEPT [0:0]

| |

| :OUTPUT ACCEPT [5:380]

| |

| -A INPUT -s 5.9.144.234/32 -j DROP

| |

| -A INPUT -s 173.234.159.250/32 -j DROP

| |

| -A INPUT -i lo -j ACCEPT

| |

| -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

| |

| -A INPUT -p icmp -j ACCEPT

| |

| -A INPUT -d 172.17.0.1/32 -p tcp -m state --state NEW -m tcp --dport 25 -j ACCEPT

| |

| -A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT

| |

| -A INPUT -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT

| |

| -A INPUT -p tcp -m state --state NEW -m tcp --dport 4443 -j ACCEPT

| |

| -A INPUT -p tcp -m state --state NEW -m tcp --dport 4444 -j ACCEPT

| |

| -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT

| |

| -A INPUT -m limit --limit 5/min -j LOG --log-prefix "iptables IN denied: " --log-level 7

| |

| -A INPUT -j DROP

| |

| -A FORWARD -s 5.9.144.234/32 -j DROP

| |

| -A FORWARD -s 173.234.159.250/32 -j DROP

| |

| -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

| |

| -A OUTPUT -s 127.0.0.1/32 -d 127.0.0.1/32 -j ACCEPT

| |

| -A OUTPUT -d 213.133.98.98/32 -p udp -m udp --dport 53 -j ACCEPT

| |

| -A OUTPUT -d 213.133.99.99/32 -p udp -m udp --dport 53 -j ACCEPT

| |

| -A OUTPUT -d 213.133.100.100/32 -p udp -m udp --dport 53 -j ACCEPT

| |

| -A OUTPUT -m limit --limit 5/min -j LOG --log-prefix "iptables OUT denied: " --log-level 7

| |

| -A OUTPUT -p tcp -m owner --uid-owner 48 -j DROP

| |

| -A OUTPUT -p tcp -m owner --uid-owner 27 -j DROP

| |

| -A OUTPUT -p tcp -m owner --uid-owner 995 -j DROP

| |

| -A OUTPUT -p tcp -m owner --uid-owner 994 -j DROP

| |

| -A OUTPUT -p tcp -m owner --uid-owner 993 -j DROP

| |

| COMMIT

| |

| # Completed on Mon May 18 16:32:05 2020

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ===Other container env vars===

| |

| | |

| Now we will update the container's yaml file with the necessary environment variables.

| |

| | |

| Execute the following commands as root to set the DISCOURSE_DEVELOPER_EMAILS variable (and comment-out the existing one):

| |

| | |

| <pre>

| |

| sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^\([^#]*\)\(DISCOURSE_DEVELOPER_EMAILS.*\)$%\1#\2%' /var/discourse/containers/discourse_ose.yml

| |

| grep '^\s*DISCOURSE_DEVELOPER_EMAILS:' /var/discourse/containers/discourse_ose.yml || sed --in-place=.`date "+%Y%m%d_%H%M%S"` "s%^env:$%env:\n DISCOURSE_DEVELOPER_EMAILS: 'discourse@opensourceecology.org,ops@opensourceecology.org,marcin@opensourceecology.org,michael@opensourceecology.org'\n\n%" /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Execute the following commands as root to set the DISCOURSE_HOSTNAME variable (and comment-out the existing one):

| |

| | |

| <pre>

| |

| sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^\([^#]*\)\(DISCOURSE_HOSTNAME.*\)$%\1#\2%' /var/discourse/containers/discourse_ose.yml

| |

| grep "DISCOURSE_HOSTNAME: 'discourse.opensourceecology.org'" /var/discourse/containers/discourse_ose.yml || sed --in-place=.`date "+%Y%m%d_%H%M%S"` "s%^env:$%env:\n DISCOURSE_HOSTNAME: 'discourse.opensourceecology.org'%" /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the previous two changes by confirming that your '<code>discourse_ose.yml</code>' config file now looks something like this. Note the addition of the '<code>DISCOURSE_HOSTNAME</code>' and '<code>DISCOURSE_DEVELOPER_EMAILS</code>' lines immediately under the '<code>env:</code>' block and that the following '<code>DISCOURSE_HOSTNAME</code>' and '<code>DISCOURSE_DEVELOPER_EMAILS</code>' lines are commented-out.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep -C1 -E 'DISCOURSE_HOSTNAME|DISCOURSE_DEVELOPER_EMAILS' /var/discourse/containers/discourse_ose.yml

| |

| env:

| |

| DISCOURSE_HOSTNAME: 'discourse.opensourceecology.org'

| |

| DISCOURSE_DEVELOPER_EMAILS: 'discourse@opensourceecology.org,ops@opensourceecology.org,marcin@opensourceecology.org,michael@opensourceecology.org'

| |

| | |

| --

| |

| ## Required. Discourse will not work with a bare IP number.

| |

| #DISCOURSE_HOSTNAME: 'discourse.example.com'

| |

| | |

| --

| |

| ## on initial signup example 'user1@example.com,user2@example.com'

| |

| #DISCOURSE_DEVELOPER_EMAILS: 'me@example.com,you@example.com'

| |

| | |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ===inner nginx===

| |

| | |

| This section will setup the "inner" nginx config--that is the nginx config that lives inside the Discourse docker container (as opposed to the "outer" nginx config that lives on the docker host).

| |

| | |

| Also, we already have nginx bound to port 443 as our ssl terminator, so the defaults in our container's yaml file will fail. Instead, we'll setup our "inner nginx" (the one that runs inside the Discourse docker container) to listen on the default port 80 and setup docker to forward port connections to the docker host's 127.0.0.1:8020 to there for the "outer nginx" (the one that runs on the docker host).

| |

| | |

| Execute the following command as root to "expose" block and replace it with our own

| |

| | |

| <pre>

| |

| perl -i".`date "+%Y%m%d_%H%M%S"`" -p0e 's%expose:\n -([^\n]*)\n -([^\n]*)%#expose:\n# -\1\n# -\2\n\nexpose:\n - "8020:80" # fwd host port 8020 to container port 80 (http)\n%gs' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the change from the above command by confirming that the container yaml file now looks something like this. Note that first '<code>expose:</code>' block is fully commented-out and is followed by another '<code>expose:</code>' block that forwards port '<code>8020</code>' to port '<code>80</code>':

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep -C4 expose /var/discourse/containers/discourse_ose.yml

| |

| ## Uncomment these two lines if you wish to add Lets Encrypt (https)

| |

| #- "templates/web.ssl.template.yml"

| |

| #- "templates/web.letsencrypt.ssl.template.yml"

| |

| | |

| ## which TCP/IP ports should this container expose?

| |

| ## If you want Discourse to share a port with another webserver like Apache or nginx,

| |

| ## see https://meta.discourse.org/t/17247 for details

| |

| #expose:

| |

| # - "80:80" # http

| |

| # - "443:443" # https

| |

| | |

| expose:

| |

| - "8020:80" # fwd host port 8020 to container port 80 (http)

| |

| | |

| | |

| params:

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ===Nginx mod_security===

| |

| | |

| In our other sites hosted on this server, we have a nginx -> varnish -> apache architecture. While I'd like to mimic this architecture for all our sites, it's important to note a few things about Apache, Nginx, mod_security, and Discourse that elucidate why we shouldn't do that.

| |

| | |

| # There's a package in the yum repos for adding mod_security to apache. There is no package for adding mod_security to Nginx. Adding mod_security to Nginx requires compiling Nginx from source

| |

| # Discourse is heavily tied to Nginx. It appears that nobody has ever run Discourse on Apache, and doing so would be non-trivial. Moreover, our custom Apache vhost config would likely break in future versions of Discourse <ref>https://meta.discourse.org/t/how-to-run-discourse-in-apache-vhost-not-nginx/133112/11</ref>

| |

| # Putting apache as a reverse proxy in-front of Discourse could add a significant performance issues because of the way Apache handles long polling, which the Discourse message bus uses <ref>https://meta.discourse.org/t/howto-setup-discourse-with-lets-encrypt-and-apache-ssl/46139</ref> <ref>https://stackoverflow.com/questions/14157515/will-apache-2s-mod-proxy-wait-and-occupy-a-worker-when-long-polling</ref> <ref>https://github.com/SamSaffron/message_bus</ref>

| |

| # The Discourse install process already compiles Nginx from source so that it can add the <code>brotli</code> module to nginx <ref>https://github.com/discourse/discourse_docker/blob/416467f6ead98f82342e8a926dc6e06f36dfbd56/image/base/install-nginx#L18</ref>

| |

| | |

| Therefore, I think it makes sense to cut apache out of the architecture for our Discourse install entirely. If we're already forced to compile nginx from source, we might as well just update their <code>install-nginx</code> script and configure mod_security in nginx instead of apache. Then our architecture becomes nginx -> varnish -> nginx.

| |

| | |

| First, execute the following commands as root to update the <code>install-nginx</code> script with the logic for installing the depends on our docker container and compiling nginx with mod_security.

| |

| | |

| <pre>

| |

| cp /var/discourse/image/base/install-nginx /var/discourse/image/base/install-nginx.`date "+%Y%m%d_%H%M%S"`.orig

| |

| | |

| # add a block to checkout the the modsecurity nginx module just before downloading the nginx source

| |

| grep 'ModSecurity' /var/discourse/image/base/install-nginx || sed -i 's%\(curl.*nginx\.org/download.*\)%# mod_security --maltfield\napt-get install -y libmodsecurity-dev modsecurity-crs\ncd /tmp\ngit clone --depth 1 https://github.com/SpiderLabs/ModSecurity-nginx.git\n\n\1%' /var/discourse/image/base/install-nginx

| |

| | |

| # update the configure line to include the ModSecurity module checked-out above

| |

| sed -i '/ModSecurity/! s%^[^#]*./configure \(.*nginx.*\)%#./configure \1\n./configure \1 --add-module=/tmp/ModSecurity-nginx%' /var/discourse/image/base/install-nginx

| |

| | |

| # add a line to cleanup section

| |

| grep 'rm -fr /tmp/ModSecurity-nginx' /var/discourse/image/base/install-nginx || sed -i 's%\(rm -fr.*/tmp/nginx.*\)%rm -fr /tmp/ModSecurity-nginx\n\1%' /var/discourse/image/base/install-nginx

| |

| </pre>

| |

| | |

| The above commands were carefully crafted to be idempotent and robust so that they will still work on future versions of the <code>install-nginx</code> script, but it's possible that they will break in the future. For reference, here is the resulting file.

| |

| | |

| Please verify the change from the above commands by confirming that your new file looks something like this. Note the addition of the '<code>mod-security</code>' block and the changed '<code>./configure</code>' line.

| |

| | |

| <pre>

| |

| [root@osestaging1 base]# cat /var/discourse/image/base/install-nginx

| |

| #!/bin/bash

| |

| set -e

| |

| VERSION=1.17.4

| |

| cd /tmp

| |

| | |

| apt install -y autoconf

| |

| | |

| | |

| git clone https://github.com/bagder/libbrotli

| |

| cd libbrotli

| |

| ./autogen.sh

| |

| ./configure

| |

| make install

| |

| | |

| cd /tmp

| |

| | |

| | |

| # this is the reason we are compiling by hand...

| |

| git clone https://github.com/google/ngx_brotli.git

| |

| | |

| # mod_security --maltfield

| |

| apt-get install -y libmodsecurity-dev modsecurity-crs

| |

| cd /tmp

| |

| git clone --depth 1 https://github.com/SpiderLabs/ModSecurity-nginx.git

| |

| | |

| curl -O https://nginx.org/download/nginx-$VERSION.tar.gz

| |

| tar zxf nginx-$VERSION.tar.gz

| |

| cd nginx-$VERSION

| |

| | |

| # so we get nginx user and so on

| |

| apt install -y nginx libpcre3 libpcre3-dev zlib1g zlib1g-dev

| |

| # we don't want to accidentally upgrade nginx and undo our work

| |

| apt-mark hold nginx

| |

| | |

| # now ngx_brotli has brotli as a submodule

| |

| cd /tmp/ngx_brotli && git submodule update --init && cd /tmp/nginx-$VERSION

| |

| | |

| # ignoring depracations with -Wno-deprecated-declarations while we wait for this https://github.com/google/ngx_brotli/issues/39#issuecomment-254093378

| |

| #./configure --with-cc-opt='-g -O2 -fPIE -fstack-protector-strong -Wformat -Werror=format-security -Wdate-time -D_FORTIFY_SOURCE=2 -Wno-deprecated-declarations' --with-ld-opt='-Wl,-Bsymbolic-functions -fPIE -pie -Wl,-z,relro -Wl,-z,now' --prefix=/usr/share/nginx --conf-path=/etc/nginx/nginx.conf --http-log-path=/var/log/nginx/access.log --error-log-path=/var/log/nginx/error.log --lock-path=/var/lock/nginx.lock --pid-path=/run/nginx.pid --http-client-body-temp-path=/var/lib/nginx/body --http-fastcgi-temp-path=/var/lib/nginx/fastcgi --http-proxy-temp-path=/var/lib/nginx/proxy --http-scgi-temp-path=/var/lib/nginx/scgi --http-uwsgi-temp-path=/var/lib/nginx/uwsgi --with-debug --with-pcre-jit --with-ipv6 --with-http_ssl_module --with-http_stub_status_module --with-http_realip_module --with-http_auth_request_module --with-http_addition_module --with-http_dav_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_v2_module --with-http_sub_module --with-stream --with-stream_ssl_module --with-mail --with-mail_ssl_module --with-threads --add-module=/tmp/ngx_brotli

| |

| ./configure --with-cc-opt='-g -O2 -fPIE -fstack-protector-strong -Wformat -Werror=format-security -Wdate-time -D_FORTIFY_SOURCE=2 -Wno-deprecated-declarations' --with-ld-opt='-Wl,-Bsymbolic-functions -fPIE -pie -Wl,-z,relro -Wl,-z,now' --prefix=/usr/share/nginx --conf-path=/etc/nginx/nginx.conf --http-log-path=/var/log/nginx/access.log --error-log-path=/var/log/nginx/error.log --lock-path=/var/lock/nginx.lock --pid-path=/run/nginx.pid --http-client-body-temp-path=/var/lib/nginx/body --http-fastcgi-temp-path=/var/lib/nginx/fastcgi --http-proxy-temp-path=/var/lib/nginx/proxy --http-scgi-temp-path=/var/lib/nginx/scgi --http-uwsgi-temp-path=/var/lib/nginx/uwsgi --with-debug --with-pcre-jit --with-ipv6 --with-http_ssl_module --with-http_stub_status_module --with-http_realip_module --with-http_auth_request_module --with-http_addition_module --with-http_dav_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_v2_module --with-http_sub_module --with-stream --with-stream_ssl_module --with-mail --with-mail_ssl_module --with-threads --add-module=/tmp/ngx_brotli --add-module=/tmp/ModSecurity-nginx

| |

| | |

| make install

| |

| | |

| mv /usr/share/nginx/sbin/nginx /usr/sbin

| |

| | |

| cd /

| |

| rm -fr /tmp/ModSecurity-nginx

| |

| rm -fr /tmp/nginx

| |

| rm -fr /tmp/libbrotli

| |

| rm -fr /tmp/ngx_brotli

| |

| rm -fr /etc/nginx/modules-enabled/*

| |

| [root@osestaging1 base]#

| |

| </pre>

| |

| | |

| Though unintuitive, Discourse's <code>launcher rebuild</code> script won't actually use these local files in <code>image/base/*</code>, including the <code>install-nginx</code> script modified above. To make sure that our Discourse docker container users a docker image with the nginx changes made above, we have to explicitly specify the image in the hard-coded <code>image</code> variable of the <code>launcher</code> script. This, sadly, is not documented anywhere by the Discourse project, and I only discovered this solution after much trial-and-error.

| |

| | |

| Execute the following commands as root to change the '<code>image</code>' variable in the Discourse '<code>launcher</code>' script.

| |

| | |

| <pre>

| |

| # replace the line "image="discourse/base:<version>" with 'image="discourse_ose"'

| |

| grep 'discourse_ose' /var/discourse/launcher || sed --in-place=.`date "+%Y%m%d_%H%M%S"` '/base_image/! s%^\(\s*\)image=\(.*\)$%#\1image=\2\n\1image="discourse_ose"%' /var/discourse/launcher

| |

| </pre>

| |

| | |

| Verify the change from the above command by confirming that the launcher script now looks something like this. Note the commented-out '<code>image=</code>' line and its replacement below it.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep 'image=' /var/discourse/launcher

| |

| user_run_image=""

| |

| user_run_image="$2"

| |

| #image="discourse/base:2.0.20200220-2221"

| |

| image="discourse_ose"

| |

| run_image=`cat $config_file | $docker_path run $user_args --rm -i -a stdin -a stdout $image ruby -e \

| |

| run_image=$user_run_image

| |

| run_image="$local_discourse/$config"

| |

| base_image=`cat $config_file | $docker_path run $user_args --rm -i -a stdin -a stdout $image ruby -e \

| |

| image=$base_image

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| And now we must build the 'discourse_ose' docker image, which will execute the updated <code>install-nginx</code> script and then become available to the <code>launcher</code> script above. This image build will take 5 minutes to 1 hour to complete.

| |

| | |

| Execute the following command as root to build the custom Discourse docker image:

| |

| | |

| <pre>

| |

| time nice docker build --tag 'discourse_ose' /var/discourse/image/base/

| |

| </pre>

| |

| | |

| Next we create a new yaml template to update the relevant nginx configuration files when bootstrapping the environment.

| |

| | |

| Execute the following commands as root to create the '<code>web.modsecurity.template.yml</code>' file.

| |

| | |

| <pre>

| |

| cat << EOF > /var/discourse/templates/web.modsecurity.template.yml

| |

| run:

| |

| - exec:

| |

| cmd:

| |

| - sudo apt-get update

| |

| - sudo apt-get install -y modsecurity-crs

| |

| - cp /etc/modsecurity/modsecurity.conf-recommended /etc/modsecurity/modsecurity.conf

| |

| - sed -i 's/SecRuleEngine DetectionOnly/SecRuleEngine On/' /etc/modsecurity/modsecurity.conf

| |

| - sed -i 's^\(\s*\)[^#]*SecRequestBodyInMemoryLimit\(.*\)^\1#SecRequestBodyInMemoryLimit\2^' /etc/modsecurity/modsecurity.conf

| |

| - sed -i '/nginx/! s%^\(\s*\)[^#]*SecAuditLog \(.*\)%#\1SecAuditLog \2\n\1SecAuditLog /var/log/nginx/modsec_audit.log%' /etc/modsecurity/modsecurity.conf

| |

| | |

| - file:

| |

| path: /etc/nginx/conf.d/modsecurity.include

| |

| contents: |

| |

| ################################################################################

| |

| # File: modsecurity.include

| |

| # Version: 0.1

| |

| # Purpose: Defines mod_security rules for the discourse vhost

| |

| # This should be included in the server{} blocks nginx vhosts.

| |

| # Author: Michael Altfield <michael@opensourceecology.org>

| |

| # Created: 2019-11-12

| |

| # Updated: 2019-11-12

| |

| ################################################################################

| |

| Include "/etc/modsecurity/modsecurity.conf"

| |

|

| |

| # OWASP Core Rule Set, installed from the 'modsecurity-crs' package in debian

| |

| Include /etc/modsecurity/crs/crs-setup.conf

| |

| Include /usr/share/modsecurity-crs/rules/*.conf

| |

| | |

| SecRuleRemoveById 949110 942360 200004 911100 921130 941250 941180 941160 941140 941130 941100

| |

| | |

| - replace:

| |

| filename: "/etc/nginx/conf.d/discourse.conf"

| |

| from: /server.+{/

| |

| to: |

| |

| server {

| |

| modsecurity on;

| |

| modsecurity_rules_file /etc/nginx/conf.d/modsecurity.include;

| |

| | |

| EOF

| |

| </pre>

| |

| | |

| Execute the following command as root to add the above-created template to our Discourse docker container yaml file's templates list:

| |

| | |

| <pre>

| |

| grep 'templates/web.modsecurity.template.yml' /var/discourse/containers/discourse_ose.yml || sed -i 's%^\([^#].*templates/web.template.yml.*\)$%\1\n - "templates/web.modsecurity.template.yml"%' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the change from the above command by confirming that the <code>containers/discourse_ose.yml</code> file looks something like this. Note the addition of the <code>templates/web.modsecurity.template.yml</code> line.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep -A10 templates /var/discourse/containers/discourse_ose.yml

| |

| templates:

| |

| - "templates/postgres.template.yml"

| |

| - "templates/redis.template.yml"

| |

| - "templates/web.template.yml"

| |

| - "templates/web.modsecurity.template.yml"

| |

| - "templates/web.ratelimited.template.yml"

| |

| ## Uncomment these two lines if you wish to add Lets Encrypt (https)

| |

| #- "templates/web.ssl.template.yml"

| |

| #- "templates/web.letsencrypt.ssl.template.yml"

| |

| | |

| ## which TCP/IP ports should this container expose?

| |

| ## If you want Discourse to share a port with another webserver like Apache or nginx,

| |

| ## see https://meta.discourse.org/t/17247 for details

| |

| #expose:

| |

| # - "80:80" # http

| |

| # - "443:443" # https

| |

| | |

| expose:

| |

| - "8020:80" # fwd host port 8020 to container port 80 (http)

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ===unattended-upgrades===

| |

| | |

| Unfortunately, the Discourse container's [https://wiki.debian.org/UnattendedUpgrades unattended-upgrades] process is broken ootb. Though the `<code>unattended-upgrades</code>` package is installed, it never actually executes because that's setup in a systemd timer config, but <code>systemd</code> is not installed in the Discourse container.

| |

| | |

| Not having `<code>unattended-upgrades</code>` run on a Debian OS is a huge security concern--especially for a machine that is managed by intermittent volunteer OSE developers. I [https://meta.discourse.org/t/does-discourse-container-use-unattended-upgrades raised this concern] to the Discourse team, but they didn't seem to care.

| |

| | |

| Execute the following commands as root to create a template file that will create a cron job on the Discourse container that will execute <code>unattended-upgrades</code> once per day:

| |

| | |

| <pre>

| |

| cat << EOF > /var/discourse/templates/unattended-upgrades.template.yml

| |

| run:

| |

| - file:

| |

| path: /etc/cron.d/unattended-upgrades

| |

| contents: |+

| |

| ################################################################################

| |

| # File: /etc/cron.d/unattended-upgrades

| |

| # Version: 0.2

| |

| # Purpose: run unattended-upgrades in lieu of systemd. For more info see

| |

| # * https://wiki.opensourceecology.org/wiki/Discourse

| |

| # * https://meta.discourse.org/t/does-discourse-container-use-unattended-upgrades/136296/3

| |

| # Author: Michael Altfield <michael@opensourceecology.org>

| |

| # Created: 2020-03-23

| |

| # Updated: 2020-04-23

| |

| ################################################################################

| |

| 20 04 * * * root /usr/bin/nice /usr/bin/unattended-upgrades --debug

| |

|

| |

| | |

| - exec: /bin/echo -e "\n" >> /etc/cron.d/unattended-upgrades

| |

| # fix the Docker cron bug https://stackoverflow.com/questions/43323754/cannot-make-remove-an-entry-for-the-specified-session-cron

| |

| - exec: /bin/sed --in-place=.\`date "+%Y%m%d_%H%M%S"\` 's%^\([^#]*\)\(session\s\+required\s\+pam_loginuid\.so\)$%\1#\2%' /etc/pam.d/cron

| |

| EOF

| |

| </pre>

| |

| | |

| And, finally, execute the following command as root to add the above-created template to our container yaml file's template list:

| |

| | |

| <pre>

| |

| grep 'templates/unattended-upgrades.template.yml' /var/discourse/containers/discourse_ose.yml || sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^templates:$%templates:\n - "templates/unattended-upgrades.template.yml"%' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the change from the above command by confirming that your <code>containers/discourse_ose.yml</code> file looks something like this. Note the addition of the '<code>templates/unattended-upgrades.template.yml</code>' line.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep templates /var/discourse/containers/discourse_ose.yml

| |

| templates:

| |

| - "templates/unattended-upgrades.template.yml"

| |

| - "templates/postgres.template.yml"

| |

| - "templates/redis.template.yml"

| |

| - "templates/web.template.yml"

| |

| - "templates/web.modsecurity.template.yml"

| |

| - "templates/web.ratelimited.template.yml"

| |

| #- "templates/web.ssl.template.yml"

| |

| #- "templates/web.letsencrypt.ssl.template.yml"

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ===iptables===

| |

| | |

| Discourse will run fine with its container having literally no internet access. This is because the communication in/out of the Discourse docker container is done via an nginx reverse proxy on the docker host through a unix socket file on the Discourse docker container.

| |

| | |

| However, the docker container is a whole OS on its own, including its own apt packages. Therefore, it's critical that the docker container running Discourse maintain security patches for its OS via Debian's unattended-upgrades.

| |

| | |

| Therefore, rather than blocking all internet traffic from the Discourse container, it's better to use iptables on the docker host to setup a firewall similar to how we set it up on our other servers (blocking the web server from initiating OUTbound connections). Note that this is necessarily done <em>inside</em> the container so we can create OUTPUT rules that block on a per-user basis, which cannot be done from the docker host. This is critical to prevent nginx/ruby/etc from initiating outbound connections. Indeed, web servers should only be able to <em>respond</em> to requests.

| |

| | |

| Unfortunately, being root in the default Discourse docker container is actually not sufficient to edit iptables rules. You'll still get permission denied errors. To fix this, first we must add the <code>NET_ADMIN</code> capability to the Discourse docker container spawned by the <code>launcher</code> script. The most robust way to add the <code>NET_ADMIN</code> capability to the Discourse docker container is to update your container’s yaml file to include the necessary argument to the <code>docker run ... /sbin/boot</code> command via the <code>docker_args</code> yaml string:

| |

| | |

| Execute this as root to update the container's yaml file:

| |

| | |

| <pre>

| |

| grep 'NET_ADMIN' /var/discourse/containers/discourse_ose.yml || sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^templates:$%docker_args: "--cap-add NET_ADMIN"\n\ntemplates:%' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the change from the above command by confirming that your file now looks something like this. Note the addition of the '<code>docker_args:</code>' line.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep -C2 'templates:' /var/discourse/containers/discourse_ose.yml

| |

| docker_args: "--cap-add NET_ADMIN"

| |

| | |

| templates:

| |

| - "templates/unattended-upgrades.template.yml"

| |

| - "templates/postgres.template.yml"

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| The above change defined <code>--cap-add NET_ADMIN</code> as an extra argument to be passed to the <code>docker run ... /sbin/boot</code> command executed by <code>launcher</code> script's <code>run_start()</code> function via the <code>$user_args</code> variable:

| |

| | |

| * https://github.com/discourse/discourse_docker/blob/87fd7172af8f2848d5118fdebada646c5996821b/launcher#L631-L633

| |

| | |

| <pre>

| |

| run_start() {

| |

| ...

| |

| $docker_path run --shm-size=512m $links $attach_on_run $restart_policy "${env[@]}" "${labels[@]}" -h "$hostname"

| |

| -e DOCKER_HOST_IP="$docker_ip" --name $config -t "${ports[@]}" $volumes $mac_address $user_args \

| |

| $run_image $boot_command

| |

| | |

| )

| |

| exit 0

| |

| | |

| }

| |

| </pre>

| |

| | |

| Now execute the following as root to add a template for setting up iptables in the docker container's runit boot scripts.

| |

| | |

| <pre>

| |

| cat << EOF > /var/discourse/templates/iptables.template.yml

| |

| run:

| |

| - file:

| |

| path: /etc/runit/1.d/01-iptables

| |

| chmod: "+x"

| |

| contents: |

| |

| #!/bin/bash

| |

| ################################################################################

| |

| # File: /etc/runit/1.d/01-iptables

| |

| # Version: 0.4

| |

| # Purpose: installs & locks-down iptables

| |

| # Author: Michael Altfield <michael@opensourceecology.org>

| |

| # Created: 2019-11-26

| |

| # Updated: 2020-05-18

| |

| ################################################################################

| |

| sudo apt-get update

| |

| sudo apt-get install -y iptables

| |

| sudo iptables -A INPUT -i lo -j ACCEPT

| |

| sudo iptables -A INPUT -s 127.0.0.1/32 -j DROP

| |

| sudo iptables -A INPUT -s 172.16.0.0/12 -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT

| |

| sudo iptables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

| |

| sudo iptables -A INPUT -j DROP

| |

| sudo iptables -A OUTPUT -s 127.0.0.1/32 -d 127.0.0.1/32 -j ACCEPT

| |

| sudo iptables -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

| |

| sudo iptables -A OUTPUT -m owner --uid-owner 0 -j ACCEPT

| |

| sudo iptables -A OUTPUT -m owner --uid-owner 100 -j ACCEPT

| |

| sudo iptables -A OUTPUT -d 172.17.0.1 -p tcp -m state --state NEW -m tcp --dport 25 -j ACCEPT

| |

| sudo iptables -A OUTPUT -j DROP

| |

| sudo ip6tables -A INPUT -i lo -j ACCEPT

| |

| sudo ip6tables -A INPUT -s ::1/128 -j DROP

| |

| sudo ip6tables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

| |

| sudo ip6tables -A INPUT -j DROP

| |

| sudo ip6tables -A OUTPUT -s ::1/128 -d ::1/128 -j ACCEPT

| |

| sudo ip6tables -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

| |

| sudo ip6tables -A OUTPUT -m owner --uid-owner 0 -j ACCEPT

| |

| sudo ip6tables -A OUTPUT -m owner --uid-owner 100 -j ACCEPT

| |

| sudo ip6tables -A OUTPUT -j DROP

| |

| | |

| EOF

| |

| </pre>

| |

| | |

| And, finally, execute the following command as root to add the above-created template to our container yaml file's template list:

| |

| | |

| <pre>

| |

| grep 'templates/iptables.template.yml' /var/discourse/containers/discourse_ose.yml || sed --in-place=.`date "+%Y%m%d_%H%M%S"` 's%^templates:$%templates:\n - "templates/iptables.template.yml"%' /var/discourse/containers/discourse_ose.yml

| |

| </pre>

| |

| | |

| Verify the change from the above command by confirming that your <code>containers/discourse_ose.yml</code> file looks something like this. Note the addition of the '<code>templates/iptables.template.yml</code>' line.

| |

| | |

| <pre>

| |

| [root@osestaging1 discourse]# grep -C2 'templates:' /var/discourse/containers/discourse_ose.yml

| |

| docker_args: "--cap-add NET_ADMIN"

| |

| | |

| templates:

| |

| - "templates/iptables.template.yml"

| |

| - "templates/unattended-upgrades.template.yml"

| |

| [root@osestaging1 discourse]#

| |

| </pre>

| |

| | |

| ==discourse.opensourcecology.org DNS==

| |

| | |

| Add 'discourse.opensourceecology.org' to the list of domain names defined for our VPN IP Address in /etc/hosts on the staging server.

| |

| | |

| In production, this will mean actually adding A & AAAA DNS entries for 'discourse' to point to our production server.

| |

| | |

| =="Outer" nginx Vhost Config==

| |

| | |

| Now we will setup our nginx config for the "outer" nginx process--that is the one that runs on the docker host (as opposed to the "inner" nginx process that lives inside the Discourse docker container).

| |

| | |

| Create the following nginx vhost config file to proxy connections sent to '<code>discourse.opensourceecology.org</code>' to the unix socket file created by Discourse. Note that this file exists on the docker server that runs the Discourse container, and it controls _that_ nginx server--which is distinct from the nginx server that runs _inside_ the Discourse docker container.

| |

| | |

| Execute the following commands as root to create the '<code>/etc/nginx/conf.d/discourse.opensourceecology.org.conf</code>' file.

| |

| | |

| <pre>

| |

| cat > /etc/nginx/conf.d/discourse.opensourceecology.org.conf <<'EOF'

| |

| ################################################################################

| |

| # File: discourse.opensourceecology.org.conf

| |

| # Version: 0.4

| |

| # Purpose: Internet-listening web server for truncating https, basic DOS

| |

| # protection, and passing to varnish cache (varnish then passes to

| |

| # apache)

| |

| # Author: Michael Altfield <michael@opensourceecology.org>

| |

| # Created: 2019-11-07

| |

| # Updated: 2020-05-18

| |

| ################################################################################

| |

| | |

| server {

| |

| | |

| access_log /var/log/nginx/discourse.opensourceecology.org/access.log main;

| |

| error_log /var/log/nginx/discourse.opensourceecology.org/error.log;

| |

| | |

| # resetting this back to its nginx default to override our DOS protection

| |

| # since the Discourse developers like to store a ton of data on the URI and

| |

| # directly in client's cookies instead of using POST and server-side storage

| |

| # * https://meta.discourse.org/t/discourse-session-cookies-400-request-header-or-cookie-too-large/137245/6

| |

| large_client_header_buffers 4 8k;

| |

| | |

| # we can't use the global 'secure.include' file for Discourse, which

| |

| # requires use of the DELETE http method, for example

| |

| #include conf.d/secure.include;

| |

| | |

| # whitelist requests to disable TRACE

| |

| if ($request_method !~ ^(GET|HEAD|POST|DELETE|PUT)$ ) {

| |

| # note: 444 is a meta code; it doesn't return anything, actually

| |

| # it just logs, drops, & closes the connection (useful

| |

| # against malware)

| |

| return 444;

| |

| }

| |

| | |

| ## block some bot's useragents (may need to remove some, if impacts SEO)

| |

| if ($blockedagent) {

| |

| return 403;

| |

| }

| |

| | |

| include conf.d/ssl.opensourceecology.org.include;

| |

| | |

| # TODO: change this to production IPs

| |

| listen 10.241.189.11:443;

| |

| #listen [2a01:4f8:172:209e::2]:443;

| |

| | |

| server_name discourse.opensourceecology.org;

| |

| | |

| #############

| |

| # SITE_DOWN #

| |

| #############

| |

| # uncomment this block && restart nginx prior to apache work to display the

| |

| # "SITE DOWN" webpage for our clients

| |

| | |

| # root /var/www/html/SITE_DOWN/htdocs/;

| |

| # index index.html index.htm;

| |

| #

| |

| # # force all requests to load exactly this page

| |

| # location / {

| |

| # try_files $uri /index.html;

| |

| # }

| |

| | |

| ###################

| |

| # SEND TO VARNISH #

| |

| ###################

| |

| | |

| location / {

| |

| proxy_pass http://127.0.0.1:6081;

| |

| proxy_set_header X-Real-IP $remote_addr;

| |

| proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

| |

| proxy_set_header X-Forwarded-Proto https;

| |

| proxy_set_header X-Forwarded-Port 443;

| |

| proxy_set_header Host $host;

| |

| proxy_http_version 1.1;

| |

| }

| |

| | |

| }

| |

| EOF

| |

| </pre>

| |

| | |

| Now execute the following commands as root to create the prerequisite logging directories for nginx:

| |

| | |

| <pre>

| |

| mkdir "/var/log/nginx/discourse.opensourceecology.org"

| |

| chown nginx:nginx "/var/log/nginx/discourse.opensourceecology.org"

| |

| chmod 0755 "/var/log/nginx/discourse.opensourceecology.org"

| |

| </pre>

| |

| | |

| ==Varnish==

| |

| | |

| This section will describe how to setup varnish as a very simple in-memory cache for Discourse.

| |

| | |

| Because Discourse doesn't support the PURGE method (the developers don't seem to care, claiming that their undocumented & non-user-configurable internal caching is sufficient), we only implement a very simple 1-5 minute cache. This will at least help to keep the site online in a reddit "hug-of-death" incident (ie: a Discourse topic goes viral on social media, sending a "thundering herd" of traffic to our site all at once). Without the web app implementing a PURGE call to varnish, the worst case result of this config is that someone is notified that a topic is updated 5 minutes later than it was actually updated. That's OK.

| |

| | |

| * https://meta.discourse.org/t/discourse-purge-cache-method-on-content-changes/132917

| |

| | |

| Execute the following as root to create the Discourse-specific varnish config file:

| |

| | |

| <pre>

| |

| cat > /etc/varnish/sites-enabled/discourse.opensourceecology.org <<'EOF'

| |

| ################################################################################

| |

| # File: discourse.opensourceecology.org.vcl

| |

| # Version: 0.3

| |

| # Purpose: Confg file for ose's discourse site

| |

| # Author: Michael Altfield <michael@opensourceecology.org>

| |

| # Created: 2020-03-23

| |

| # Updated: 2020-05-18

| |

| ################################################################################

| |

| | |

| vcl 4.0;

| |

| | |

| ##########################

| |

| # VHOST-SPECIFIC BACKEND #

| |

| ##########################

| |

| | |

| backend discourse_opensourceecology_org {

| |

| .host = "127.0.0.1";

| |

| .port = "8020";

| |

| }

| |

| | |

| sub vcl_recv {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| set req.backend_hint = discourse_opensourceecology_org;

| |

| | |

| # Serve objects up to 2 minutes past their expiry if the backend

| |

| # is slow to respond.

| |

| #set req.grace = 5m;

| |

| | |

| # append X-Forwarded-For for the backend

| |

| # (note: this is already done by default in varnish >= v4.0)

| |

| #if (req.http.X-Forwarded-For) {

| |

| # set req.http.X-Forwarded-For =

| |

| # req.http.X-Forwarded-For + ", " + client.ip;

| |

| #} else {

| |

| # set req.http.X-Forwarded-For = client.ip;

| |

| #}

| |

| | |

| # This uses the ACL action called "purge". Basically if a request to

| |

| # PURGE the cache comes from anywhere other than localhost, ignore it.

| |

| if (req.method == "PURGE") {

| |

| if (!client.ip ~ purge) {

| |

| return (synth(405, "Not allowed."));

| |

| } else {

| |

| return (purge);

| |

| }

| |

| }

| |

| | |

| # Pass any requests that Varnish does not understand straight to the backend.

| |

| if (

| |

| req.method != "GET" && req.method != "HEAD" &&

| |

| req.method != "PUT" && req.method != "POST" &&

| |

| req.method != "TRACE" && req.method != "OPTIONS" &&

| |

| req.method != "DELETE"

| |

| ) {

| |

| return (pipe);

| |

| } /* Non-RFC2616 or CONNECT which is weird. */

| |

| | |

| # Pass anything other than GET and HEAD directly.

| |

| if (req.method != "GET" && req.method != "HEAD") {

| |

| return (pass);

| |

| } /* We only deal with GET and HEAD by default */

| |

| | |

| # cache static content, even if a user is logged-in (but strip cookies)

| |

| if (req.method ~ "^(GET|HEAD)$" && req.url ~ "\.(jpg|jpeg|gif|png|ico|css|zip|tgz|gz|rar|bz2|pdf|txt|tar|wav|bmp|rtf|js|flv|swf|html|htm)(\?.*)?$") {

| |

| | |

| # if you use a subdomain for admin section, do not cache it

| |

| #if (req.http.host ~ "admin.yourdomain.com") {

| |

| # set req.http.X-VC-Cacheable = "NO:Admin domain";

| |

| # return(pass);

| |

| #}

| |

| # enable this if you want

| |

| #if (req.url ~ "debug") {

| |

| # set req.http.X-VC-Debug = "true";

| |

| #}

| |

| # enable this if you need it

| |

| #if (req.url ~ "nocache") {

| |

| # set req.http.X-VC-Cacheable = "NO:Not cacheable, nocache in URL";

| |

| # return(pass);

| |

| #}

| |

| | |

| set req.url = regsub(req.url, "\?.*$", "");

| |

| | |

| # unset cookie only if no http auth

| |

| if (!req.http.Authorization) {

| |

| unset req.http.Cookie;

| |

| }

| |

| | |

| return(hash);

| |

| | |

| }

| |

| | |

| # Pass requests from logged-in users directly.

| |

| # Only detect cookies with "session" and "Token" in file name, otherwise nothing get cached.

| |

| if (req.http.Authorization || req.http.Cookie ~ "session" || req.http.Cookie ~ "Token") {

| |

| return (pass);

| |

| } /* Not cacheable by default */

| |

| | |

| # Pass any requests with the "If-None-Match" header directly.

| |

| if (req.http.If-None-Match) {

| |

| return (pass);

| |

| }

| |

| | |

| if (req.http.Cache-Control ~ "no-cache") {

| |

| # swap these to respect client "no-cache" requests

| |

| #ban(req.url);

| |

| unset req.http.Cache-Control;

| |

| }

| |

| | |

| return (hash);

| |

| | |

| }

| |

| | |

| }

| |

| | |

| sub vcl_hash {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| # TODO

| |

| | |

| }

| |

| }

| |

| | |

| sub vcl_backend_response {

| |

| | |

| if ( beresp.backend.name == "discourse_opensourceecology_org" ){

| |

| | |

| # Avoid caching error responses

| |

| if ( beresp.status != 200 && beresp.status != 203 && beresp.status != 300 && beresp.status != 301 && beresp.status != 302 && beresp.status != 304 && beresp.status != 307 && beresp.status != 410 && beresp.status != 404 ) {

| |

| set beresp.ttl = 0s;

| |

| set beresp.grace = 15s;

| |

| return (deliver);

| |

| }

| |

| | |

| if (!beresp.ttl > 0s) {

| |

| set beresp.uncacheable = true;

| |

| return (deliver);

| |

| }

| |

| | |

| if (beresp.http.Set-Cookie) {

| |

| set beresp.uncacheable = true;

| |

| return (deliver);

| |

| }

| |

| | |

| | |

| # discourse wasn't designed to play well with reverse proxy caching; ignore

| |

| # its ignorant no-cache headers (instead we just use a very short ttl)

| |

| if (beresp.http.Cache-Control ~ "(private|no-cache|no-store)") {

| |

| unset beresp.http.Cache-Control;

| |

| }

| |

| | |

| if (beresp.http.Authorization && !beresp.http.Cache-Control ~ "public") {

| |

| set beresp.uncacheable = true;

| |

| return (deliver);

| |

| }

| |

| | |

| # always cache for 1-5 minutes with Discourse; we shouldn't set this to longer

| |

| # because Discourse doesn't support PURGE. For more info, see:

| |

| # * https://meta.discourse.org/t/discourse-purge-cache-method-on-content-changes/132917/15

| |

| set beresp.ttl = 1m;

| |

| set beresp.grace = 5m;

| |

| return (deliver);

| |

| | |

| }

| |

| | |

| }

| |

| | |

| sub vcl_synth {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| # TODO

| |

| | |

| }

| |

| | |

| }

| |

| | |

| sub vcl_pipe {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| # Note that only the first request to the backend will have

| |

| # X-Forwarded-For set. If you use X-Forwarded-For and want to

| |

| # have it set for all requests, make sure to have:

| |

| # set req.http.connection = "close";

| |

|

| |

| # This is otherwise not necessary if you do not do any request rewriting.

| |

|

| |

| #set req.http.connection = "close";

| |

| | |

| }

| |

| }

| |

| | |

| sub vcl_hit {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| # this is left-over from copying this config from the wiki's varnish config,

| |

| # but it won't actually be used until Discourse implements PURGE

| |

| | |

| if (req.method == "PURGE") {

| |

| ban(req.url);

| |

| return (synth(200, "Purged"));

| |

| }

| |

| | |

| if (!obj.ttl > 0s) {

| |

| return (pass);

| |

| }

| |

| | |

| }

| |

| }

| |

| | |

| sub vcl_miss {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| if (req.method == "PURGE") {

| |

| return (synth(200, "Not in cache"));

| |

| }

| |

| | |

| }

| |

| }

| |

| | |

| sub vcl_deliver {

| |

| | |

| if ( req.http.host == "discourse.opensourceecology.org" ){

| |

| | |

| # TODO

| |

| | |

| }

| |

| }

| |

| EOF

| |

| </pre>

| |

| | |

| Now execute the following commands as root to enable the above config file:

| |

| | |

| <pre>

| |

| grep 'discourse.opensourceecology.org' /etc/varnish/all-vhosts.vcl || (cp /etc/varnish/all-vhosts.vcl /etc/varnish/all-vhosts.vcl.`date "+%Y%m%d_%H%M%S"` && echo 'include "sites-enabled/discourse.opensourceecology.org";' >> /etc/varnish/all-vhosts.vcl)

| |

| </pre>

| |

| | |

| Verify the change from the previous commands by confirming that your varnish '<code>all-vhosts.vcl</code>' file now looks something like this. Note the addition of the '<code>sites-enabled/discourse.opensourceecology.org</code>' line at the end.

| |

| | |

| <pre>

| |

| [root@osestaging1 sites-enabled]# tail /etc/varnish/all-vhosts.vcl

| |

| include "sites-enabled/www.opensourceecology.org";

| |

| include "sites-enabled/seedhome.openbuildinginstitute.org";

| |

| include "sites-enabled/fef.opensourceecology.org";

| |

| include "sites-enabled/oswh.opensourceecology.org";

| |

| include "sites-enabled/forum.opensourceecology.org";

| |

| include "sites-enabled/wiki.opensourceecology.org";

| |

| include "sites-enabled/phplist.opensourceecology.org";

| |

| include "sites-enabled/microfactory.opensourceecology.org";

| |

| include "sites-enabled/store.opensourceecology.org";

| |

| include "sites-enabled/discourse.opensourceecology.org";

| |

| [root@osestaging1 sites-enabled]#

| |

| </pre>

| |

| | |

| Finally, execute the following command as root to restart varnish

| |

| | |

| <pre>

| |

| varnishd -Cf /etc/varnish/default.vcl > /dev/null && systemctl restart varnish

| |

| </pre>

| |

| | |

| ==Backups==

| |

| | |

| Add this block to /root/backups/backup.sh (TODO: test this)

| |

| | |

| <pre>

| |

| #############

| |

| # DISCOURSE #

| |

| #############

| |

| | |

| # cleanup old backups

| |

| $NICE $RM -rf /var/discourse/shared/standalone/backups/default/*.tar.gz

| |

| time $NICE $DOCKER exec discourse_ose discourse backup

| |

| $NICE $MV /var/discourse/shared/standalone/backups/default/*.tar.gz "${backupDirPath}/${archiveDirName}/discourse_ose/"

| |

| | |

| ...

| |

| | |

| #########

| |

| # FILES #

| |

| #########

| |

| | |

| # /var/discourse

| |

| echo -e "\tINFO: /var/discourse"

| |

| $MKDIR "${backupDirPath}/${archiveDirName}/discourse_ose"

| |

| time $NICE $TAR --exclude "/var/discourse/shared/standalone/postgres_data" --exclude "/var/discourse/shared/standalone/postgres_data/uploads" --exclude "/var/discourse/shared/standalone/backups" -czf ${backupDirPath}/${archiveDirName}/discourse_ose/discourse_ose.${stamp}.tar.gz /var/discourse/*

| |

| </pre>

| |

| | |

| ==SSL Cert==

| |

| | |

| TODO: update the certbot cron script to add a Subject Alt Name for discourse.opensourceecology.org

| |

| | |

| ==Import Vanilla Forums==

| |

| | |

| TODO: attempt to import our old forum's data into Discourse.

| |

| | |

| ==Docker image cleanup cron==

| |

| | |

| A common pitfall when running docker in production is that the images build up over time, eventually filling the disk and breaking the server.

| |

| | |

| Execute the commands below as root to add a script and corresponding cron job & log dir for pruning docker images to prevent disk-fill:

| |

| | |

| <pre>

| |

| cat > /usr/local/bin/docker-purge.sh <<'EOF'

| |

| #!/bin/bash

| |

| set -x

| |

| ################################################################################

| |

| # Author: Michael Altfield <michael at opensourceecology dot org>

| |

| # Created: 2020-03-08

| |

| # Updated: 2020-03-08

| |

| # Version: 0.1

| |

| # Purpose: Cleanup docker garbage to prevent disk fill issues

| |

| ################################################################################

| |

| | |

| ############

| |

| # SETTINGS #

| |

| ############

| |

| | |

| NICE='/bin/nice'

| |

| DOCKER='/bin/docker'

| |

| DATE='/bin/date'

| |

| | |

| ##########

| |

| # HEADER #

| |

| ##########

| |

| | |

| stamp=`${DATE} -u +%Y%m%d_%H%M%S`

| |

| echo "================================================================================"

| |

| echo "INFO: Beginning docker pruning on ${stamp}"

| |

| | |

| ###################

| |

| # DOCKER COMMANDS #

| |

| ###################

| |

| | |

| # automatically clean unused container and images that are >= 4 weeks old

| |

| time ${NICE} ${DOCKER} container prune --force --filter until=672h

| |

| time ${NICE} ${DOCKER} image prune --force --all --filter until=672h

| |

| time ${NICE} ${DOCKER} system prune --force --all --filter until=672h

| |

| | |

| # exit cleanly

| |

| exit 0

| |

| EOF

| |

| chmod +x /usr/local/bin/docker-purge.sh

| |

| mkdir /var/log/docker

| |

| cat << EOF > /etc/cron.d/docker-prune

| |

| ################################################################################

| |

| # File: /etc/cron.d/docker-prune

| |

| # Version: 0.1

| |

| # Purpose: Cleanup docker garbage to prevent disk fill issues. For more info see

| |

| # * https://wiki.opensourceecology.org/wiki/Discourse

| |

| # Author: Michael Altfield <michael@opensourceecology.org>

| |

| # Created: 2020-03-23

| |

| # Updated: 2020-03-23

| |

| ################################################################################

| |

| 40 07 * * * root time /bin/nice /usr/local/bin/docker-purge.sh &>> /var/log/docker/prune.log

| |

| EOF

| |

| </pre>

| |

| | |

| ==Start Discourse==

| |

| | |

| Execute the following command as root to (re)build the container (destroy old, bootstrap, start new). This process may take 5-20 minutes.

| |

| | |

| <pre>

| |

| time /var/discourse/launcher rebuild discourse_ose

| |

| </pre>

| |

| | |

| ==Restart Nginx==

| |

| | |