Maltfield Log/2019 Q3

My work log from the year 2019 Quarter 3. I intentionally made this verbose to make future admin's work easier when troubleshooting. The more keywords, error messages, etc that are listed in this log, the more helpful it will be for the future OSE Sysadmin.

See Also

Sat Sep 07, 2019

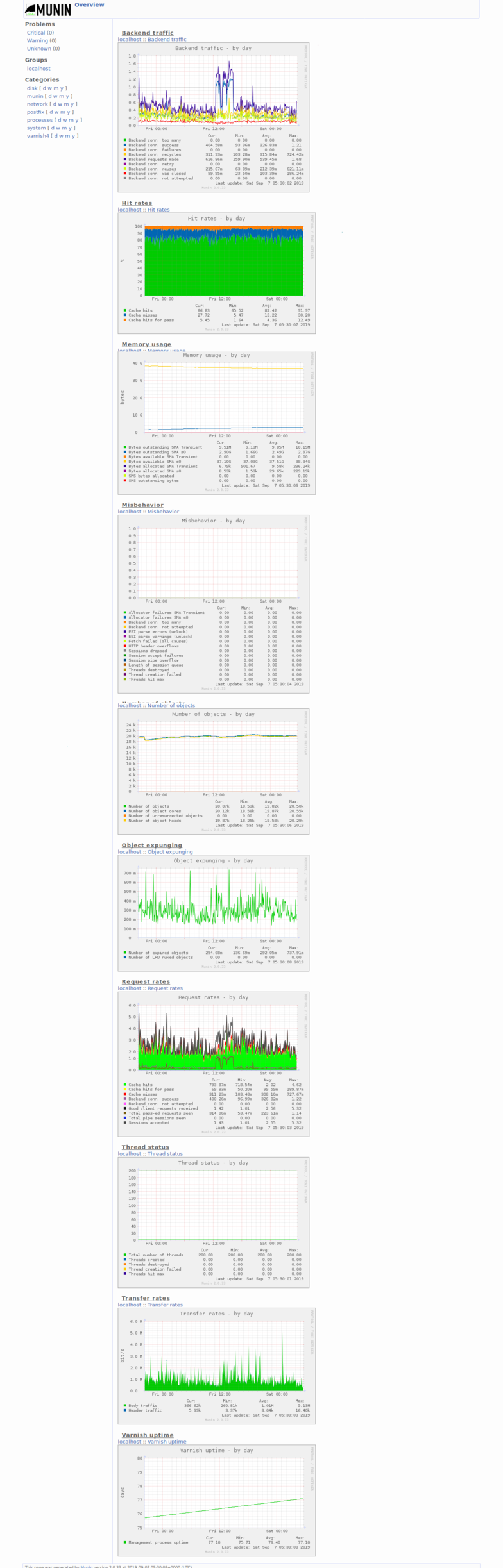

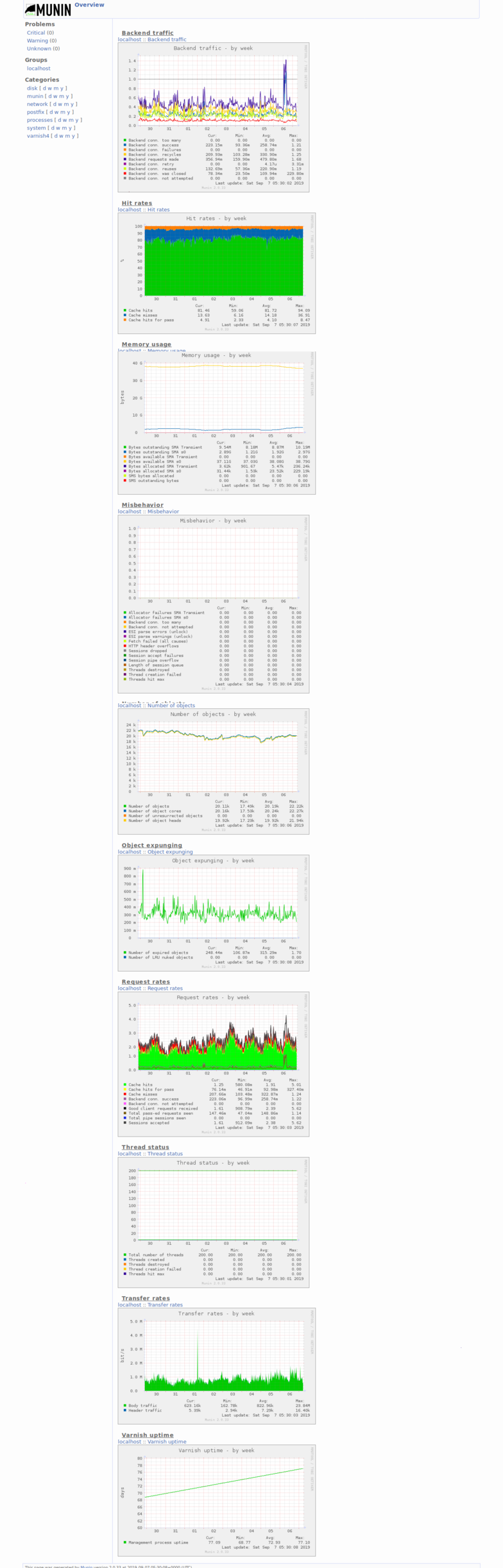

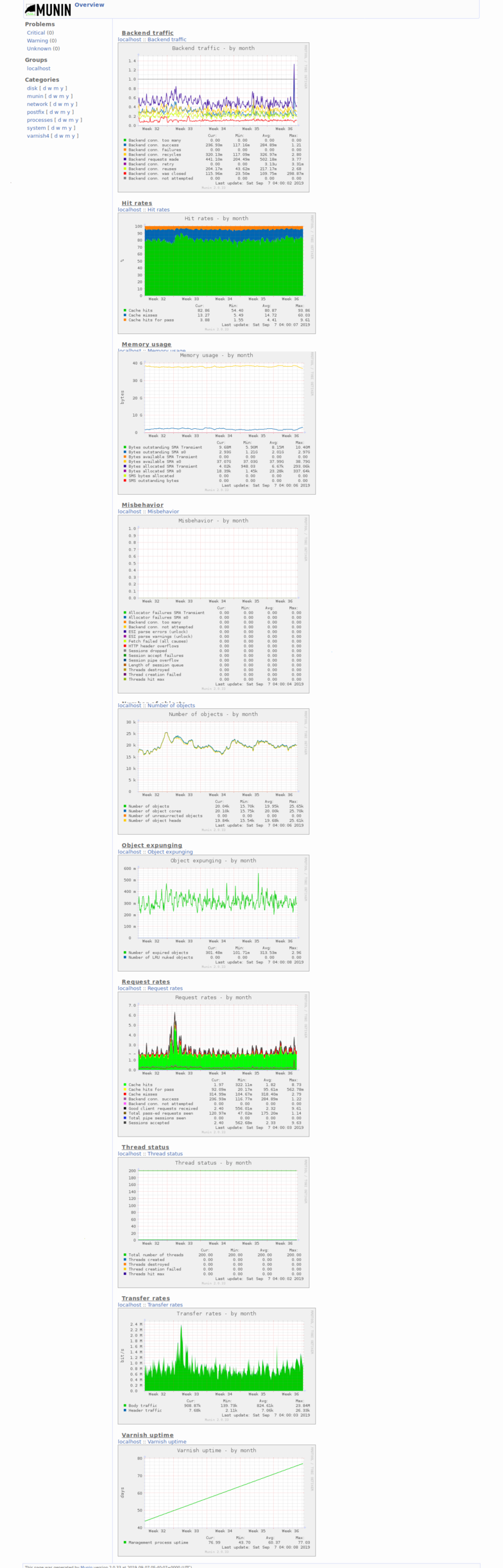

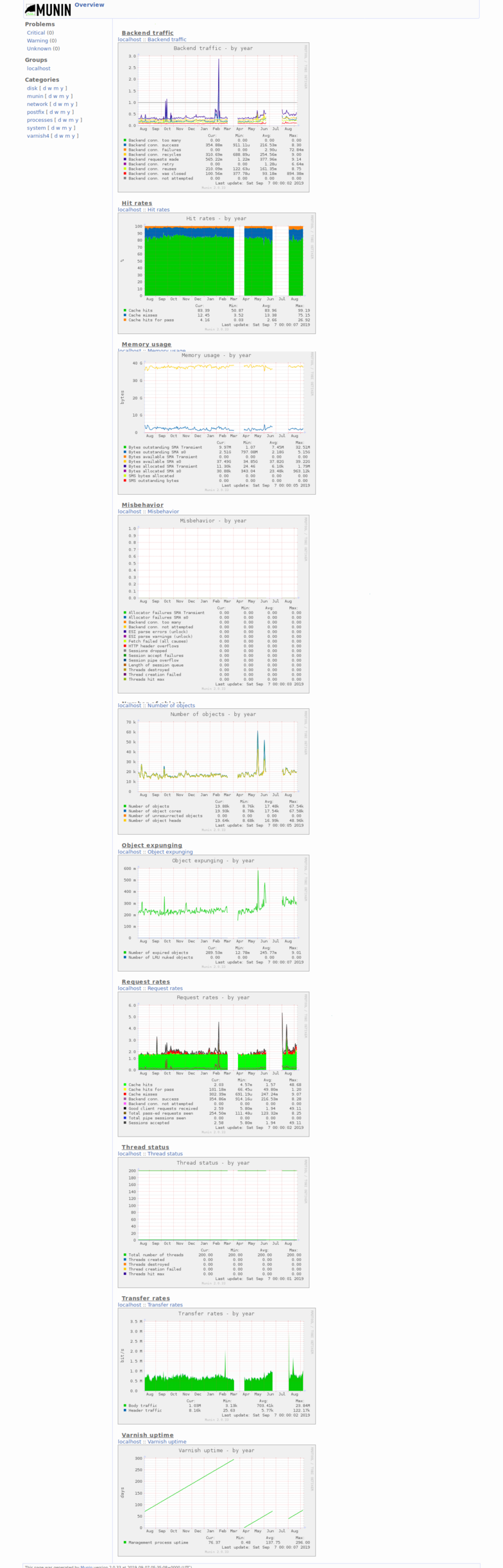

- Marcin asked why the wp backend was slow but the frontend was unaffected & fast

- I reiterated that this was because of the varnish cache, and I decided to grab some screenshots of munin that show a ~80% hit rate (I thought it was 90, but 80 is good enough I suppose)

- I decided to take screenshots to send to him and thought it worthwhile to document this state on the wiki as well

- Created Category: Munin Graphs did some wiki cleanup to make these easier to find

...

Fri Aug 30, 2019

- OSE Social Media Research

- discussion with Marcin about adding plugins to clearly indicate which our official social media accounts are && add buttons to "share" our pages on people's social media

Thr Aug 29, 2019

- Marcin emailed me back about the phplist imports; it was him. I asked him to document the procedure he followed for importing users into phplist.

- After the documentation is up, I'll test the process using a new email address that I own and importing it into phplist. I will confirm that the new user does *not* recieve emails until they've clicked a confirmation link. The first email should also state that the user agrees to our Privacy Policy by clicking the link.

- ...

- I got a concerning email from ossec alerts indicating that someone was attempting to brute force into our vsftpd server

OSSEC HIDS Notification. 2019 Aug 28 14:37:42 Received From: opensourceecology->/var/log/secure Rule: 5551 fired (level 10) -> "Multiple failed logins in a small period of time." Src IP: 2002:7437:e7f9::7437:e7f9 User: opensourceecology.org Portion of the log(s): Aug 28 14:37:42 opensourceecology vsftpd[28344]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecology.org rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:37:38 opensourceecology vsftpd[28339]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecology rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:37:31 opensourceecology vsftpd[28336]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecologyorg rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:37:25 opensourceecology vsftpd[28318]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecology.org rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:37:21 opensourceecology vsftpd[28309]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecology rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:36:34 opensourceecology vsftpd[28281]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecologyorg rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:36:29 opensourceecology vsftpd[28277]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecology.org rhost=2002:7437:e7f9::7437:e7f9 Aug 28 14:36:26 opensourceecology vsftpd[28275]: pam_unix(vsftpd:auth): authentication failure; logname= uid=0 euid=0 tty=ftp ruser=opensourceecology rhost=2002:7437:e7f9::7437:e7f9 ... --END OF NOTIFICATION

- first of all, I didn't even know whe had a fucking ftp server running on this server (I inherented it).

- our iptables should block all traffic flowing to services like this, anyway.

[root@opensourceecology 2019-08-29]# iptables-save # Generated by iptables-save v1.4.21 on Thu Aug 29 05:21:07 2019 *filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [67:4254] -A INPUT -s 173.234.159.250/32 -j DROP -A INPUT -i lo -j ACCEPT -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A INPUT -p icmp -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 4443 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 4444 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT -A INPUT -m limit --limit 5/min -j LOG --log-prefix "iptables IN denied: " --log-level 7 -A INPUT -j DROP -A FORWARD -s 173.234.159.250/32 -j DROP -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A OUTPUT -s 127.0.0.1/32 -d 127.0.0.1/32 -j ACCEPT -A OUTPUT -d 213.133.98.98/32 -p udp -m udp --dport 53 -j ACCEPT -A OUTPUT -d 213.133.99.99/32 -p udp -m udp --dport 53 -j ACCEPT -A OUTPUT -d 213.133.100.100/32 -p udp -m udp --dport 53 -j ACCEPT -A OUTPUT -m limit --limit 5/min -j LOG --log-prefix "iptables OUT denied: " --log-level 7 -A OUTPUT -p tcp -m owner --uid-owner 48 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 27 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 995 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 994 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 993 -j DROP COMMIT # Completed on Thu Aug 29 05:21:07 2019

- But, yeah, I did fuck up: we don't have ip6tables rules established

[root@opensourceecology 2019-08-29]# ip6tables-save # Generated by ip6tables-save v1.4.21 on Thu Aug 29 05:21:09 2019 *filter :INPUT ACCEPT [7078:1038253] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [7648:16015796] COMMIT # Completed on Thu Aug 29 05:21:09 2019 [root@opensourceecology 2019-08-29]#

- I confirmed that we do have a vsftpd server installed. It is running. And it is listening to port 21 bound to all interfaces :facepalm:

[root@opensourceecology 2019-08-29]# rpm -qa | grep -i ftp

ncftp-3.2.5-7.el7.x86_64

vsftpd-3.0.2-22.el7.x86_64

[root@opensourceecology 2019-08-29]# ps -ef | grep -i ftp

root 1087 1 0 Jun22 ? 00:00:00 /usr/sbin/vsftpd /etc/vsftpd/vsftpd.conf

root 19582 18347 0 05:22 pts/17 00:00:00 grep --color=auto -i ftp

[root@opensourceecology 2019-08-29]# ss -plan | grep -i ftp

tcp LISTEN 0 32 :::21 :::* users:(("vsftpd",pid=1087,fd=3))

[root@opensourceecology 2019-08-29]#

- so I will remove the ftp service and establish a whiltelist for incoming ports via ip6tables for ipv6. But first, let's ensure that ossec dealt with this properly. We can see from the logs that (at Aug 28 14:48 UTC) ossec automatically banned the ip address that initiated the brute force attack. That seems to have worked; we didn't get subsequent emails of future attacks from this address or any others. Also no further FIM emails or otherwise that would indicate comprimise. This is why we use the ossec HIDS..

[root@opensourceecology ossec]# grep -irl '2002:7437:e7f9::7437:e7f9' * logs/active-responses.log [root@opensourceecology ossec]# grep -irC10 '2002:7437:e7f9::7437:e7f9' logs/active-responses.log Wed Aug 28 06:27:13 UTC 2019 /var/ossec/active-response/bin/host-deny.sh delete - 2a01:4f8:211:188d::2 1566973002.1538179 31508 Wed Aug 28 06:27:13 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh delete - 2a01:4f8:211:188d::2 1566973002.1538179 31508 Wed Aug 28 07:08:30 UTC 2019 /var/ossec/active-response/bin/host-deny.sh add - 2a01:4f8:211:188d::2 1566976110.1721493 31508 Wed Aug 28 07:08:30 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh add - 2a01:4f8:211:188d::2 1566976110.1721493 31508 Wed Aug 28 07:19:00 UTC 2019 /var/ossec/active-response/bin/host-deny.sh delete - 2a01:4f8:211:188d::2 1566976110.1721493 31508 Wed Aug 28 07:19:00 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh delete - 2a01:4f8:211:188d::2 1566976110.1721493 31508 Wed Aug 28 07:19:05 UTC 2019 /var/ossec/active-response/bin/host-deny.sh add - 2a01:4f8:211:188d::2 1566976745.1749263 31508 Wed Aug 28 07:19:05 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh add - 2a01:4f8:211:188d::2 1566976745.1749263 31508 Wed Aug 28 07:29:35 UTC 2019 /var/ossec/active-response/bin/host-deny.sh delete - 2a01:4f8:211:188d::2 1566976745.1749263 31508 Wed Aug 28 07:29:35 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh delete - 2a01:4f8:211:188d::2 1566976745.1749263 31508 Wed Aug 28 14:37:42 UTC 2019 /var/ossec/active-response/bin/host-deny.sh add - 2002:7437:e7f9::7437:e7f9 1567003062.3419064 5551 Wed Aug 28 14:37:42 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh add - 2002:7437:e7f9::7437:e7f9 1567003062.3419064 5551 Wed Aug 28 14:48:12 UTC 2019 /var/ossec/active-response/bin/host-deny.sh delete - 2002:7437:e7f9::7437:e7f9 1567003062.3419064 5551 Wed Aug 28 14:48:12 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh delete - 2002:7437:e7f9::7437:e7f9 1567003062.3419064 5551 Wed Aug 28 14:50:47 UTC 2019 /var/ossec/active-response/bin/host-deny.sh add - 108.59.8.70 1567003847.3482130 31508 Wed Aug 28 14:50:47 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh add - 108.59.8.70 1567003847.3482130 31508 Wed Aug 28 15:01:17 UTC 2019 /var/ossec/active-response/bin/host-deny.sh delete - 108.59.8.70 1567003847.3482130 31508 Wed Aug 28 15:01:17 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh delete - 108.59.8.70 1567003847.3482130 31508 Wed Aug 28 19:28:50 UTC 2019 /var/ossec/active-response/bin/host-deny.sh add - 167.71.220.178 1567020530.5190967 31104 Wed Aug 28 19:28:50 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh add - 167.71.220.178 1567020530.5190967 31104 Wed Aug 28 19:29:38 UTC 2019 /var/ossec/active-response/bin/host-deny.sh add - 2002:a747:dcb2::a747:dcb2 1567020578.5205329 31104 Wed Aug 28 19:29:38 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh add - 2002:a747:dcb2::a747:dcb2 1567020578.5205329 31104 Wed Aug 28 19:40:08 UTC 2019 /var/ossec/active-response/bin/host-deny.sh delete - 2002:a747:dcb2::a747:dcb2 1567020578.5205329 31104 Wed Aug 28 19:40:08 UTC 2019 /var/ossec/active-response/bin/firewall-drop.sh delete - 167.71.220.178 1567020530.5190967 31104 [root@opensourceecology ossec]#

- it's only 05:30 UTC, but there's no entries regarding ftp from today in the ossec alerts

[root@opensourceecology ossec]# date Thu Aug 29 05:34:10 UTC 2019 [root@opensourceecology ossec]# grep ftp logs/alerts/alerts.log [root@opensourceecology ossec]#

- let's check how often our ftp server was under attack this month; we see there's 'ftp' entries in 14 out of 28 full days of ossec alerts

[root@opensourceecology Aug]# for file in $(ls -1 *.gz); do if grep -il 'ftp'` ; then echo $file; fi ; done; ossec-alerts-01.log.gz ossec-alerts-02.log.gz ossec-alerts-05.log.gz ossec-alerts-08.log.gz ossec-alerts-10.log.gz ossec-alerts-13.log.gz ossec-alerts-14.log.gz ossec-alerts-19.log.gz ossec-alerts-20.log.gz ossec-alerts-21.log.gz ossec-alerts-25.log.gz ossec-alerts-26.log.gz ossec-alerts-27.log.gz ossec-alerts-28.log.gz [root@opensourceecology Aug]# files=$( for file in $(ls -1 *.gz); do if grep -il 'ftp'` ; then echo $file; fi ; done; ) [root@opensourceecology Aug]# echo $files | tr " " "\n" | wc -l 14 [root@opensourceecology Aug]#

- some of these are false-positives matching filenames (ie: '/w/images/4/4e/FTprusa2020jan2016.pdf' at 'Ftprusa')

** Alert 1564648424.2378254: - web,accesslog, 2019 Aug 01 08:33:44 opensourceecology->/var/log/httpd/www.opensourceecology.org/access_log Rule: 31101 (level 5) -> 'Web server 400 error code.' Src IP: 127.0.0.1 127.0.0.1 - - [01/Aug/2019:08:33:43 +0000] "GET /w/images/4/4e/FTprusa2020jan2016.pdf HTTP/1.1" 403 238 "-" "Mozilla/5.0 (compatible; Google AppsViewer; http: //drive.google.com)"

- of the actual brute force attacks on vsftpd, most of these attempts are done on users that do not exist, such as 'opensourceecology' or 'opensourceecologyorg', 'www.opensourceecology.org', etc

- this appears to be a typical "spray & pray" attack, not specifically targeting OSE

- of course, these attacks didn't continue long before the IDS banned the ip address. I coudn't find a single attempt to login with an actual username on our system before the bruteforce attack was stopped by the ossec active response banning the ip address.

- here's the list of usernames that were attempted to login to our ftp server over the month of August; none of these users exist

[root@opensourceecology Aug]# for file in $files; do zcat $file | grep -iC5 'vsftp' | grep 'ruser' | awk '{print $13}'; done | sort -u | uniq

ruser=opensourceecology

ruser=opensourceecologyorg

ruser=opensourceecology.org

ruser=wiki

ruser=wiki.opensourceecology.org

ruser=www.opensourceecology.org

[root@opensourceecology Aug]#

- ok, fuck ftp. First I uninstalled vsftpd

[root@opensourceecology 2019-08-29]# rpm -qa | grep -i ftp

ncftp-3.2.5-7.el7.x86_64

vsftpd-3.0.2-22.el7.x86_64

[root@opensourceecology 2019-08-29]# ps -ef | grep -i ftp

root 1087 1 0 Jun22 ? 00:00:00 /usr/sbin/vsftpd /etc/vsftpd/vsftpd.conf

root 19582 18347 0 05:22 pts/17 00:00:00 grep --color=auto -i ftp

[root@opensourceecology 2019-08-29]# ss -plan | grep -i ftp

tcp LISTEN 0 32 :::21 :::* users:(("vsftpd",pid=1087,fd=3))

[root@opensourceecology 2019-08-29]#

- ps and ss look sane now

[root@opensourceecology 2019-08-29]# rpm -qa | grep -i ftp ncftp-3.2.5-7.el7.x86_64 [root@opensourceecology 2019-08-29]# ps -ef | grep -i ftp root 19646 18347 0 05:24 pts/17 00:00:00 grep --color=auto -i ftp [root@opensourceecology 2019-08-29]# ss -plan | grep -i ftp [root@opensourceecology 2019-08-29]#

- but to plug anything else unexpected, I setup the ipv6 iptables properly

[root@opensourceecology 2019-08-29]# ip6tables -A INPUT -i lo -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p ipv6-icmp -m icmp6 --icmpv6-type 128 -m limit --limit 1/sec -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p ipv6-icmp -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p tcp -m state --state NEW -m tcp --dport 4443 -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p tcp -m state --state NEW -m tcp --dport 4444 -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A INPUT -j DROP [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -i lo -j ACCEPT ip6tables v1.4.21: Can't use -i with OUTPUT Try `ip6tables -h' or 'ip6tables --help' for more information. [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -p tcp -m owner --uid-owner 48 -j DROP [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -p tcp -m owner --uid-owner 27 -j DROP [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -p tcp -m owner --uid-owner 995 -j DROP [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -p tcp -m owner --uid-owner 994 -j DROP [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -p tcp -m owner --uid-owner 993 -j DROP [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -m limit --limit 5/min -j LOG --log-prefix "iptables OUT denied: " --log-level 7 [root@opensourceecology 2019-08-29]# ip6tables -A OUTPUT -p tcp -m owner --uid-owner 994 -j DROP [root@opensourceecology 2019-08-29]# [root@opensourceecology 2019-08-29]# ip6tables-save # Generated by ip6tables-save v1.4.21 on Thu Aug 29 06:13:35 2019 *filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [0:0] -A INPUT -i lo -j ACCEPT -A INPUT -p ipv6-icmp -m icmp6 --icmpv6-type 128 -m limit --limit 1/sec -j ACCEPT -A INPUT -p ipv6-icmp -j ACCEPT -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 4443 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 4444 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT -A INPUT -j DROP -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A OUTPUT -m limit --limit 5/min -j LOG --log-prefix "iptables OUT denied: " --log-level 7 -A OUTPUT -p tcp -m owner --uid-owner 48 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 27 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 995 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 994 -j DROP -A OUTPUT -p tcp -m owner --uid-owner 993 -j DROP COMMIT # Completed on Thu Aug 29 06:13:35 2019 [root@opensourceecology 2019-08-29]#

- the OUTPUT blocks were largely pulled from the iptables (ipv4) rules. here's the relevant uid users = apache, nginx, mysql, hitch, varnish, chrony, and polkitd.

[root@opensourceecology 2019-08-29]# grep -E '48|27|993|994|995' /etc/passwd polkitd:x:997:995:User for polkitd:/:/sbin/nologin chrony:x:996:994::/var/lib/chrony:/sbin/nologin apache:x:48:48:Apache:/usr/share/httpd:/sbin/nologin mysql:x:27:27:MariaDB Server:/var/lib/mysql:/sbin/nologin varnish:x:995:991:Varnish Cache:/var/lib/varnish:/sbin/nologin hitch:x:994:990::/var/lib/hitch:/sbin/nologin nginx:x:993:989:Nginx web server:/var/lib/nginx:/sbin/nologin [root@opensourceecology 2019-08-29]#

- and I persisted these changes

[root@opensourceecology 2019-08-29]# service iptables save iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ] [root@opensourceecology 2019-08-29]# service ip6tables save ip6tables: Saving firewall rules to /etc/sysconfig/ip6table[ OK ] [root@opensourceecology 2019-08-29]#

- I sent an email to Marcin about this incident, my response, and my changes to ossec alerts over the past several weeks that helped elucidate this issue

Wed Aug 28, 2019

- I got a bunch of emails indicating subscribers were added to our phplist db this morning

- I forwarded this email to Marcin asking him to confirm that he was the one that did the import

- I also asked Marcin what the process he did for this import was. iirc, there is some checkbox to toggle whether or not they should be automatically added to the list or if they first must approve the add (which would include them agreeing to our privacy policy). This is critical for GDPR.

- I also asked Marcin where the subscriber's data came from, and mentioned that we should document all imports' data source, such as a scan/picture of a "newsletter signup sheet" form with rows of users' PII. Also important for GDPR.

Tue Aug 27, 2019

- Marcin said the best approach to the comments on wordpress would be to integrate Discouse into wordpress, and he said he'd ask Lex to look into doing a POC for Discourse. Hopefully by the time his POC is done we can

- ...

- Looks like hetzner cloud supports user-specific user accounts, so I created an account on hetzner for myself using my OSE email address. I sent an invintation to myself, and gained access to the 'ose-dev' "project" in hetzner cloud.

- ..

- continuning from yesterday, because our dev server will be holding clients PII, passwords, etc *and* it will be a server that may run untested software that's not properly hardened, the dev server should be accessible only by an intranet; it should not be accessible on the web except for openvpn being exposed

- note that the longer term solution to this hodge-podge VPN network may be to use a mesh VPN (ie: zerotier), but for a VPN network of exactly one node I'll just install openvpn on our dev node and have clients connect to it directly https://serverfault.com/questions/980743/vpn-connection-between-distinct-cloud-instances

- unfortunately everyone these days knows of VPNs as a tool to bypass geo locking, censorship, and provide relative anonymous browsing from your spying ISP. While we're using the same openvpn software, we are *not* using it for this purpsoe. Unforunately, most guides on setting up OpenVPN on CentOS7 are for the former purpose the describe how to setup the firewall and routes such that all of a VPN client's network traffic flows through the VPN out of the Internet-facing the ethernet port on the OpenVPN server. This is *not* what we want. We only want to direct traffic destined for the dev node's OpenVPN tun IP Address to flow over the VPN

- somehow I'm going to have to update the nginx config files (with sed?) to change all of the ip addresses (and server_names?) to which nginx binds to for each vhost to the OpenVPN IP Address instead of our prod server's Internet IP Address (138.201.84.243:443, [2a01:4f8:172:209e::2]:443)

- this will also add complications for ssl. And If the server sits behind an intranet, then it won't be able to get cert updates. If we keep the server_names the same and simply copy the certs from prod, then this may not be an issue

- or to simplify everything, we could also just cut nginx/varnish out of the loop and go directly to the apache backend. I don't like this, however. What if we need to test our vanish/nginx config? That's one reason we need this dev node to begin with..

- another design decision: should our openvpn server be run in bridged or routed mode? Well, I think bridged would only make sense if it could get an internal IP but since our dev server is actually internet-facing (it doesn't *actually* sit behind an OSE private router giving it a local NAT'd IP address), this wouldn't make sense. We only have 1 IP address, and that's Internet-facing. So a bridge would just mean getting an additional Internet-facing IP address--which doesn't make sense. Therefore, we'll use the simpler routing-based (dev tun) config. Our OpenVPN server will get an ip address on some private 10.X.Y.0/24 netblock. And our OpenVPN clients will get another IP Address on that same 10.X.Y.0/8 netblock. All communication across this virtual "local" network will be routed across these IP addresses by OpenVPN

- another design decision: what netblock should we use for our VPN network? Let's use 10.X.Y.0/24, and select X and Y randomly.

[maltfield@osedev1 ~]$ echo $(( ( $RANDOM % 255 ) + 1 )); 241 [maltfield@osedev1 ~]$ echo $(( ( $RANDOM % 255 ) + 1 )); 189 [maltfield@osedev1 ~]$

- ok, we'll use 10.241.189.0/24 for our VPN.

- this is probably the guide we want to follow https://openvpn.net/community-resources/how-to/

- it also has a section on 2FA https://openvpn.net/community-resources/how-to/#how-to-add-dual-factor-authentication-to-an-openvpn-configuration-using-client-side-smart-cards

- and a section on hardening, which includes info on how to make the openvpn server run as an unprivliged user && in a chrooted env, increasing asymmetric (RSA) key size from 1024 to 2048, and increasing symmetric (blowfish) key size from 128 to 256 https://openvpn.net/community-resources/how-to/#hardening-openvpn-security

- there's also this whole hardening guide on the wiki https://community.openvpn.net/openvpn/wiki/Hardening

- it looks like cryptostorm used a --tls-cipher=TLS-DHE-RSA-WITH-AES-256-CBC-SHA --cipher=AES-256-CBC --dh=2048-bit --ca=2048-bit RSA TLS certificate --cert=2048-bit RSA TLS certificate

- in 2018, they switched to --tls-cipher=TLS-ECDHE-RSA-WITH-CHACHA20-POLY1305-SHA256, --cipher=AES-256-GCM, --dh=8192-bit, --ca=521-bit EC (~15360-bit RSA), --cert=8192-bit RSA

- and cryptostorm also uses this for ECC --tls-cipher=TLS-ECDHE-ECDSA-WITH-CHACHA20-POLY1305-SHA256, --cipher=AES-256-GCM, --dh=8192-bit, --ca=521-bit EC (~15360-bit RSA), --cert=521-bit EC (~15360-bit RSA) https://www.cryptostorm.is/blog/new-features

- NordVPN uses AES 256 CBC, AES-256-GCM, SHA2-384, and 3072-bit DH https://nordvpn.com/faq/

- AirVPN uses 4096-bit RSA keys, 4096-bit DH keys, --cipher=AES-256-GCM, and --tls-cipher=TLS-DHE-RSA-WITH-AES-256-GCM-SHA384 or TLS-DHE-RSA-WITH-CAMELLIA-256-CBC-SHA (as well as others) https://airvpn.org/specs/

- I could find no clear page describing the crypto used by mullvad on their site or github https://mullvad.net/en/

- I asked them for this info via twitter https://twitter.com/MichaelAltfield/status/1166262361046552577

Mon Aug 26, 2019

- Marcin asked me what we can do about the 3,327 spam comments built-up into our queue on osemain

- I pointed out that there's no easy/free solution for filtering *existing* spam comments

- I provided 4 suggestions

- We install a free spam filter plugin for wordpress like Antispam Bee and just delete all existing comments, knowing that we may be permanently deleting non-spam comments as well

- We pay akismet $60/year/site and get the gold-standard for spam moderation in our comments

- We disable comments on our blog all-together (possibly with the intention of eventually re-enabling them using an external service better suited to facilitate discussions like Discourse for our comments as other projects have done) https://wordpress.org/plugins/wp-discourse/

- We try to pay akismet $5 for a first month of service to cleanup our existing spam, then cancel our plan, install Antispam Bee, and hope we don't encounter backlog of comments again.

- ...

- I checked our hetzner cloud "usage" section, and it looks like our bill so far is 0.65 EUR for 1 week.

- ...

- ok, so returning to this rsync from dev to prod. ultimately, I think this will have to be automated. Obviously we want our dev server to be as secure as prod, but development is necessarily messy; iptables may have holes poked into it; sketchy software may get installed on it; even unsketchy software will be installed and be in the process of being configured--not yet hardened. Of course, this means that the dev server's chances of being owned are signficantly greater than the prod server. Therefore, this sync should be done such that the dev server does *not* have access to the prod server. I think what I'll do is have prod setup with a root:root 0400 ssh key stored to /root/.ssh/id_rsa (which would probably be unencrypted for automation's sake) that has ssh access to the dev server via a user that has NOPASSWD sudo permissions. Therefore, if prod is owned then dev is owned, but if dev is owned then prod is not *necessarily* owned. That said, if dev is owned, then we'd probably loose a lot of super-critical stuff like passwords to the mysql database, our database itself--with (encrypted) user passwords and PII.

- hmm, I think the only solution here is to make the dev server locked-up in an intranet.

- Perhaps we can install openvpn on on our dev server and setup redundant rules both in the hetzner wui "security groups" and locally via iptables to block all incoming ports to anything except openvpn. From openvpn, we can probably also do fancy stuff that would route dev-specific dns names over the local network patched to nginx, varnish, or directly to the apache backend. This could be good..

- If I made even ssh not publicaly accessible, then I'd have to connect to the openvpn server from the prod server for this sync; I'd have to test that..

- maybe I should actually run the vpn on the prod server and have it chained to the dev server? That would make a more logical architecture if we add future dev or staging nodes. All this is documented here https://openvpn.net/community-resources/how-to/

- other relevant reading on site-to-site VPN connections, though I'm not sure if it would apply where every site is itself just 'a] a distinct vps instance in the cloud mixed with [b] dedicated baremetal servers and [c] developer's laptops https://openvpn.net/vpn-server-resources/site-to-site-routing-explained-in-detail/

- I published this as a question on serverfault.com to crowd source https://serverfault.com/questions/980743/vpn-connection-between-distinct-cloud-instances

- this question answers my question on whether or not I need to add a distinct server just for the openvpn server https://serverfault.com/questions/448873/openvpn-based-vpn-server-on-same-system-its-protecting-feasible?rq=1

- this is an example setup for a typical site-to-site config using OpenVPN https://community.openvpn.net/openvpn/wiki/RoutedLans

- it looks like another option is tinc https://www.linode.com/docs/networking/vpn/how-to-set-up-tinc-peer-to-peer-vpn/

- ffs, that project's website is currently offline https://tinc-vpn.org/

- looks like what I want is also known as a "private mesh network" or "mesh p2p vpn". There's an open-source project for this called zerotier.com. It's free for up-to 100 clients. That's perfect: if we ever broke more than a dozen clients, we'd probably just migrate it all into distinct VPC networks with peering and dedicated OpenVPN nodes. We'd definitely have that infrastructure setup by 100 nodes.. https://blog.reconinfosec.com/build-a-private-mesh-network-for-free/

- it looks like zerotier preforms better than openvpn to https://zerotier.com/benchmarking-zerotier-vs-openvpn-and-linux-ipsec/

- this is relevant too and it recommends tinc, peervpn, and openmesher https://serverfault.com/questions/685820/are-there-any-distributed-mesh-like-p2p-vpns

- peer vpn seemes pretty small & hasn't been updated since 2016 https://peervpn.net/

- same with openmesher; last updated nearly 2 years ago https://github.com/heyaaron/openmesher/

- openmesher mentioned TunnelDigger, which is actively developed https://github.com/wlanslovenija/tunneldigger

- this guide does a good breakdown of VPN topologies: site-to-site, hub-and-spoke, and meshed http://www.internet-computer-security.com/VPN-Guide/VPN-Topologies.html

- so far I think a mesh network with zerotier is the best option. it would be *so* much easier to setup & maintain, and it also doesn't create a single-point-of-failure as the hub-and-spoke solution with openvpn would. The biggest con here is that it's not in the repos, and it's not as popular as openvpn.

- If I go the mesh route, I should consider that I still want the prod server somewhat isolated from the dev server. If I connected the dev & prod servers using zerotier, for example, I think that the prod server would be no more exposed to the dev server than it were before--assuming the servers aren't setup to bind to the new VPN IP address (or that they're not setup to bind to all interfaces, which I did as part of hardening). But the dev server would need to be modified to bind all its servers on the VPN IP address to expose its services to the VPN mesh. I would need to confirm this theory.

- ok, I think I'm jumping down a rabbit hole here. I'll revisit mesh vpn connections when we get >2 servers. Most likely, that won't happen for at least another year. For now, I'll proceed with just running an openvpn server on osedev1 and blocking all services (including ssh) except openvpn. I'll make client certs for the prod server, myself, and other devs. That should make the dev server super-safe. I'll just have to make sure that the sync of prod data to osedev doesn't overwrite the openvpn or iptables rules.

Thr Aug 22, 2019

- Marcin responded to my inquery about licensing of software on github; per this article, software should be GPLv3 and everything else dual-liceensed CC-BY-SA & GFDL

- I did some cleanup of wiki articles on licensing, iner-linking them with categories and "See Also" sections

- Marcin said he'd put together a video showcasing kiwix browsing our zim-ified offline wiki for a blog post if Christian made a write-up for the blog; this is going to be good

- Christian asked about automating the zim creation; I said we should do this on the dev server after it's setup in some time (weeks/months)

Wed Aug 21, 2019

- Marcin made me an owner of the "OpenSourceEcology" github organization, and I successfully gained access

- I created the new 'ansible' repository

- I changed the "display name" of our "OpenSourceEcology" org from "Marcin Jakubowski" to "Open Source Ecology"

- I did some cleanup on our wiki to make the "Github" page easier to find and to more clearly indicate which org on github is ours

- I noticed that none of our repos on gibhub have licenses, and that CC does not recommend using their licenses for software anyway; I asked Marcin what license we should use for our repos

- I also verified our domain 'www.opensourceecology.org' to make our repo more legitimate. This involved adding a NONCE to a new TXT record '_github-challenge-OpenSourceEcology.www.opensourceecology.org.' in our DNS at cloudflare

- Cloudflare is fast! I was able to verify it immediately

- I also added a description to the org indicating that it's the official github org for OSE and linking back to the wiki article that states this https://wiki.opensourceecology.org/wiki/Github

Tue Aug 20, 2019

- emails with Christian about the offline wiki zim archive

- dev server research. I think I should write an ansible playbook that launches a fresh install of a dev server, gets a list of the packages & versions installed on the prod server, installs them on the dev server, then syncs data from prod to dev and then does the necessary ip address & hostname changes to nginx/NetworkManager/etc configs. Ideally both would be controlled by puppet, but--without segregation of stateful & stateless servers from each other and other issues of stale provisioning configs as documented earlier--I don't see that as being realisitc https://wiki.opensourceecology.org/wiki/OSE_Server#Provisioning

- added '/etc/keepass/.passwords.kdbx.lock' to the "ignore" list of file changes in /var/ossec/etc/ossec.conf'

...

- time to create a dev server in hetzner cloud; first I logged into the hetzner robot wui and went to add a cloud server, but it told me to visit the "hetzner cloud console" https://console.hetzner.cloud

- I created a new "project" called "ose-dev"

- we want this new cloud server to be as close to the prod server as possible. I checked robot and found that our prod server is located in 'FSN1-DC8' which appears to be in Falkenstein https://wiki.hetzner.de/index.php/Benennung_Rechenzentren/en

- I added a new server in 'Falkenstein' running "CentOS 7" of type "CX11" (the cheapest) including a 10G volume (the smallest possible; we'll have to increase this to 50G in the near future) named "ose-dev-volume-1" of type "ext4". I did not add a network. I did add my ssh public key. I named the server "osedev1"

- Interesting, hetzner cloud supports cloud-init. imho, this would be great as a basic hardening & bootstrap step where we https://cloudinit.readthedocs.io/en/latest/

- hardened sshd and set it to a non-standard port

- setup basic iptables rules to block all but the new ssh port

- created my user and installed my ssh key

- ... after that, the rest could be done with ansible scripts stored in our git repo

- it only took a few seconds before the hetzner console told me the server was created. Then I clicked the hamburger menu next to the instance and selected "Console". It opened a cli window showing the machine booting. After another 30 sec, I was prompted for the user login. But, of course, I didn't know the credentials. It wasn't even clear what user my ssh key as added to.

- it looks like the new server was put in 'fsn1-dc14' so a distinct DC as our prod server, but at least it's in the same city.

- in the console, we also apparently have the option to "RESET ROOT PASSWORD" under the "RESCUE" tab

- the ip address of osedev1 is 195.201.233.113 = static.113.233.201.195.clients.your-server.de

- it looks like we can create snapshots for €0.01/GB/month. We can create a max of 7 snapshots. After 7, it deletes the oldest snapshots and replaces it with the new one.

- the hetzner console includes some pretty good (though basic) graphs. they appear to only go back 30 days.

- I tried to ssh into the server using root and my personal ssh key. I got it. Yikes, it allows root to login by default?

- according to this, root won't be given a password unless an ssh key was *not* provied https://github.com/hetznercloud/terraform-provider-hcloud/issues/19

- I thought about creating a distinct set of passwords for this server from prod, but my intention is to keep it as similar to prod as possible (like a staging server) so that we can verify if POCs will break existing services or not. For that, I'm going to be copying the configs from prod and keeping it here, so the passwords will all be the same, anyway. Until we grow to the point where we can segregate services on distinct servers (or at least stateless from stateful servers) and make provisioning a thing (where passwords would be added to config files from a vault at provisioning time), our staging server will just have to be a near-copy of our prod server. And, therefore, it should be treated with the same level of caution regarding privacy of data and security of secrets/passwords. The only reduced concern will be availability; we can take down staging when doing test upgrades/POCs/etc without concern of damaging the production services. Again, more info on why we don't have a provisioning solution here https://wiki.opensourceecology.org/wiki/OSE_Server#Provisioning

- I want to store ansible playbooks in git, but I can't create repos in our OSE git org. I emailed marcin asking him which is our cannonical github org page and asked him to make me an owner of it so I can create a repo for ansible

- I did a basic bootstrap so I could get ansible working

[root@osedev1 ~]# useradd maltfield [root@osedev1 ~]# echo "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDGNYjR7UKiJSAG/AbP+vlCBqNfQZ2yuSXfsEDuM7cEU8PQNJyuJnS7m0VcA48JRnpUpPYYCCB0fqtIEhpP+szpMg2LByfTtbU0vDBjzQD9mEfwZ0mzJsfzh1Nxe86l/d6h6FhxAqK+eG7ljYBElDhF4l2lgcMAl9TiSba0pcqqYBRsvJgQoAjlZOIeVEvM1lyfWfrmDaFK37jdUCBWq8QeJ98qpNDX4A76f9T5Y3q5EuSFkY0fcU+zwFxM71bGGlgmo5YsMMdSsW+89fSG0652/U4sjf4NTHCpuD0UaSPB876NJ7QzeDWtOgyBC4nhPpS8pgjsnl48QZuVm6FNDqbXr9bVk5BdntpBgps+gXdSL2j0/yRRayLXzps1LCdasMCBxCzK+lJYWGalw5dNaIDHBsEZiK55iwPp0W3lU9vXFO4oKNJGFgbhNmn+KAaW82NBwlTHo/tOlj2/VQD9uaK5YLhQqAJzIq0JuWZWFLUC2FJIIG0pJBIonNabANcN+vq+YJqjd+JXNZyTZ0mzuj3OAB/Z5zS6lT9azPfnEjpcOngFs46P7S/1hRIrSWCvZ8kfECpa8W+cTMus4rpCd40d1tVKzJA/n0MGJjEs2q4cK6lC08pXxq9zAyt7PMl94PHse2uzDFhrhh7d0ManxNZE+I5/IPWOnG1PJsDlOe4Yqw== michael@opensourceecology.org" > /home/maltfield/.ssh/authorized_keys -bash: /home/maltfield/.ssh/authorized_keys: No such file or directory [root@osedev1 ~]# su - maltfield [maltfield@osedev1 ~]$ pwd /home/maltfield [maltfield@osedev1 ~]$ mkdir .ssh [maltfield@osedev1 ~]$ echo "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDGNYjR7UKiJSAG/AbP+vlCBqNfQZ2yuSXfsEDuM7cEU8PQNJyuJnS7m0VcA48JRnpUpPYYCCB0fqtIEhpP+szpMg2LByfTtbU0vDBjzQD9mEfwZ0mzJsfzh1Nxe86l/d6h6FhxAqK+eG7ljYBElDhF4l2lgcMAl9TiSba0pcqqYBRsvJgQoAjlZOIeVEvM1lyfWfrmDaFK37jdUCBWq8QeJ98qpNDX4A76f9T5Y3q5EuSFkY0fcU+zwFxM71bGGlgmo5YsMMdSsW+89fSG0652/U4sjf4NTHCpuD0UaSPB876NJ7QzeDWtOgyBC4nhPpS8pgjsnl48QZuVm6FNDqbXr9bVk5BdntpBgps+gXdSL2j0/yRRayLXzps1LCdasMCBxCzK+lJYWGalw5dNaIDHBsEZiK55iwPp0W3lU9vXFO4oKNJGFgbhNmn+KAaW82NBwlTHo/tOlj2/VQD9uaK5YLhQqAJzIq0JuWZWFLUC2FJIIG0pJBIonNabANcN+vq+YJqjd+JXNZyTZ0mzuj3OAB/Z5zS6lT9azPfnEjpcOngFs46P7S/1hRIrSWCvZ8kfECpa8W+cTMus4rpCd40d1tVKzJA/n0MGJjEs2q4cK6lC08pXxq9zAyt7PMl94PHse2uzDFhrhh7d0ManxNZE+I5/IPWOnG1PJsDlOe4Yqw== michael@opensourceecology.org" > .ssh/authorized_keys [maltfield@osedev1 ~]$ chmod 0700 .ssh [maltfield@osedev1 ~]$ chmod 0600 .ssh/authorized_keys [maltfield@osedev1 ~]$

- I confirmed that I could ssh-in using my key as maltfield

user@ose:~/ansible$ ssh maltfield@195.201.233.113 whoami maltfield user@ose:~/ansible$ ssh maltfield@195.201.233.113 hostname osedev1 user@ose:~/ansible$

- then I set a password for 'maltfied', and added 'maltfield' to the 'wheel' group to permit sudo

[root@osedev1 ~]# passwd maltfield Changing password for user maltfield. New password: Retype new password: passwd: all authentication tokens updated successfully. [root@osedev1 ~]# gpasswd -a maltfield wheel Adding user maltfield to group wheel [root@osedev1 ~]#

- and I confirmed that I could sudo

user@ose:~/ansible$ ssh maltfield@195.201.233.113 Last login: Tue Aug 20 14:13:50 2019 [maltfield@osedev1 ~]$ groups maltfield wheel [maltfield@osedev1 ~]$ sudo su - [sudo] password for maltfield: Last login: Tue Aug 20 14:10:51 CEST 2019 on pts/1 Last failed login: Tue Aug 20 14:11:57 CEST 2019 from 45.119.209.91 on ssh:notty There was 1 failed login attempt since the last successful login. [root@osedev1 ~]#

- next, I made a backup of the existing sshd config on the new dev server, copied our hardened ssh config from prod in to replace it, added a new group called 'sshaccess' and added 'maltfield' to it, and restarted ssh

- first on prod

user@ose:~$ ssh opensourceecology.org Last login: Tue Aug 20 11:58:33 2019 from 142.234.200.164 [maltfield@opensourceecology ~]$ sudo su - [sudo] password for maltfield: Last login: Tue Aug 20 12:19:07 UTC 2019 on pts/12 [root@opensourceecology ~]# cp /etc/ssh/sshd_config /home/user/maltfield/ cp: cannot create regular file ‘/home/user/maltfield/’: No such file or directory [root@opensourceecology ~]# cp /etc/ssh/sshd_config /home/maltfield/ [root@opensourceecology ~]# chown maltfield /home/maltfield/sshd_config [root@opensourceecology ~]#

- then on dev

user@ose:~/ansible$ ssh -A maltfield@195.201.233.113 Last login: Tue Aug 20 14:15:33 2019 from 142.234.200.164 [maltfield@osedev1 ~]$ ssh-add -l error fetching identities for protocol 1: agent refused operation 4096 SHA256:nbMcwqUz/ouvQwlNXbJtwijJ/0omJKeq5Nqzkus/sNQ guttersnipe@guttersnipe (RSA) [maltfield@osedev1 ~]$ scp -P32415 opensourceecology.org:sshd_config . The authenticity of host '[opensourceecology.org]:32415 ([2a01:4f8:172:209e::2]:32415)' can't be established. ECDSA key fingerprint is SHA256:HclF8ZQOjGqx+9TmwL111kZ7QxgKkoEw8g3l2YxV0gk. ECDSA key fingerprint is MD5:cd:87:b1:bb:c1:3e:d1:d1:d4:5d:16:c9:e8:30:6a:71. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[opensourceecology.org]:32415,[2a01:4f8:172:209e::2]:32415' (ECDSA) to the list of known hosts. sshd_config 100% 4455 1.5MB/s 00:00 [maltfield@osedev1 ~]$ sudo su - [sudo] password for maltfield: Last login: Tue Aug 20 14:15:40 CEST 2019 on pts/1 Last failed login: Tue Aug 20 14:20:25 CEST 2019 from 45.119.209.91 on ssh:notty There was 1 failed login attempt since the last successful login. [root@osedev1 ~]# cd /etc/ssh [root@osedev1 ssh]# mv sshd_config sshd_config.`date "+%Y%m%d_%H%M%S"`.orig [root@osedev1 ssh]# mv /home/maltfield/sshd_config . [root@osedev1 ssh]# ls -lah total 620K drwxr-xr-x. 2 root root 4.0K Aug 20 14:27 . drwxr-xr-x. 72 root root 4.0K Aug 20 14:14 .. -rw-r--r--. 1 root root 569K Apr 11 2018 moduli -rw-r--r--. 1 root root 2.3K Apr 11 2018 ssh_config -rw-------. 1 maltfield maltfield 4.4K Aug 20 14:27 sshd_config -rw-------. 1 root root 3.9K Aug 20 12:16 sshd_config.20190820_142740.orig -rw-r-----. 1 root ssh_keys 227 Aug 20 12:16 ssh_host_ecdsa_key -rw-r--r--. 1 root root 162 Aug 20 12:16 ssh_host_ecdsa_key.pub -rw-r-----. 1 root ssh_keys 387 Aug 20 12:16 ssh_host_ed25519_key -rw-r--r--. 1 root root 82 Aug 20 12:16 ssh_host_ed25519_key.pub -rw-r-----. 1 root ssh_keys 1.7K Aug 20 12:16 ssh_host_rsa_key -rw-r--r--. 1 root root 382 Aug 20 12:16 ssh_host_rsa_key.pub [root@osedev1 ssh]# chown root:root sshd_config [root@osedev1 ssh]# ls -lah total 620K drwxr-xr-x. 2 root root 4.0K Aug 20 14:27 . drwxr-xr-x. 72 root root 4.0K Aug 20 14:14 .. -rw-r--r--. 1 root root 569K Apr 11 2018 moduli -rw-r--r--. 1 root root 2.3K Apr 11 2018 ssh_config -rw-------. 1 root root 4.4K Aug 20 14:27 sshd_config -rw-------. 1 root root 3.9K Aug 20 12:16 sshd_config.20190820_142740.orig -rw-r-----. 1 root ssh_keys 227 Aug 20 12:16 ssh_host_ecdsa_key -rw-r--r--. 1 root root 162 Aug 20 12:16 ssh_host_ecdsa_key.pub -rw-r-----. 1 root ssh_keys 387 Aug 20 12:16 ssh_host_ed25519_key -rw-r--r--. 1 root root 82 Aug 20 12:16 ssh_host_ed25519_key.pub -rw-r-----. 1 root ssh_keys 1.7K Aug 20 12:16 ssh_host_rsa_key -rw-r--r--. 1 root root 382 Aug 20 12:16 ssh_host_rsa_key.pub [root@osedev1 ssh]# grep AllowGroups sshd_config AllowGroups sshaccess [root@osedev1 ssh]# grep sshaccess /etc/group [root@osedev1 ssh]# groupadd sshaccess [root@osedev1 ssh]# gpasswd -a maltfield sshaccess Adding user maltfield to group sshaccess [root@osedev1 ssh]# grep sshaccess /etc/group sshaccess:x:1001:maltfield [root@osedev1 ssh]# systemctl restart sshd [root@osedev1 ssh]# logout [maltfield@osedev1 ~]$ logout Connection to 195.201.233.113 closed. user@ose:~/ansible$ ssh -A maltfield@195.201.233.113 ssh: connect to host 195.201.233.113 port 22: Connection refused user@ose:~/ansible$ ssh -p 32415 maltfield@195.201.233.113 Last login: Tue Aug 20 14:17:07 2019 from 142.234.200.164 [maltfield@osedev1 ~]$ echo win win [maltfield@osedev1 ~]$ sudo su - [sudo] password for maltfield: Last login: Tue Aug 20 14:27:12 CEST 2019 on pts/1 Last failed login: Tue Aug 20 14:28:43 CEST 2019 from 45.119.209.91 on ssh:notty There was 1 failed login attempt since the last successful login. [root@osedev1 ~]# echo woot woot [root@osedev1 ~]# logout [maltfield@osedev1 ~]$ logout Connection to 195.201.233.113 closed. user@ose:~/ansible$

- finally cleanup on prod

user@ose:~$ ssh opensourceecology.org Last login: Tue Aug 20 12:19:23 2019 from 142.234.200.164 [maltfield@opensourceecology ~]$ ls sshd_config sshd_config [maltfield@opensourceecology ~]$ rm sshd_config [maltfield@opensourceecology ~]$ ls sshd_config ls: cannot access sshd_config: No such file or directory [maltfield@opensourceecology ~]$ logout Connection to opensourceecology.org closed. user@ose:~$

- I confirmed that I could no longer login as root

user@ose:~$ ssh -i .ssh/id_rsa.ose root@195.201.233.113 ssh: connect to host 195.201.233.113 port 22: Connection refused user@ose:~$ ssh -p 32415 -i .ssh/id_rsa.ose root@195.201.233.113 Permission denied (publickey). user@ose:~$

- and, final step of the pre-ansible, bootstrap: iptables

- first I confirmed that there's no existing rules

[root@osedev1 ~]# iptables-save # Generated by iptables-save v1.4.21 on Tue Aug 20 14:35:43 2019 *filter :INPUT ACCEPT [69:4056] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [38:3704] COMMIT # Completed on Tue Aug 20 14:35:43 2019 [root@osedev1 ~]# ip6tables-save # Generated by ip6tables-save v1.4.21 on Tue Aug 20 14:35:55 2019 *filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [0:0] COMMIT # Completed on Tue Aug 20 14:35:55 2019 [root@osedev1 ~]#

- then I added the basic iptables rules for ipv4 to block everything in except ssh

[root@osedev1 ~]# iptables -A INPUT -i lo -j ACCEPT [root@osedev1 ~]# iptables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT [root@osedev1 ~]# iptables -A INPUT -p icmp -j ACCEPT [root@osedev1 ~]# iptables -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT [root@osedev1 ~]# iptables -A INPUT -j DROP [root@osedev1 ~]# iptables-save # Generated by iptables-save v1.4.21 on Tue Aug 20 14:39:12 2019 *filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [15:1180] -A INPUT -i lo -j ACCEPT -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A INPUT -p icmp -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT -A INPUT -j DROP COMMIT # Completed on Tue Aug 20 14:39:12 2019 [root@osedev1 ~]#

- and I added the same for ipv6

[root@osedev1 ~]# ip6tables -A INPUT -i lo -j ACCEPT [root@osedev1 ~]# ip6tables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT [root@osedev1 ~]# ip6tables -A INPUT -p icmp -j ACCEPT [root@osedev1 ~]# ip6tables -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT [root@osedev1 ~]# ip6tables -A INPUT -j DROP [root@osedev1 ~]# ip6tables-save # Generated by ip6tables-save v1.4.21 on Tue Aug 20 14:41:12 2019 *filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [0:0] -A INPUT -i lo -j ACCEPT -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A INPUT -p icmp -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT -A INPUT -j DROP COMMIT # Completed on Tue Aug 20 14:41:12 2019 [root@osedev1 ~]#

- note that I had to install 'iptables-services' in order to save these rules

[root@osedev1 ~]# service iptables save The service command supports only basic LSB actions (start, stop, restart, try-restart, reload, force-reload, status). For other actions, please try to use systemctl. [root@osedev1 ~]# systemctl save iptables Unknown operation 'save'. [root@osedev1 ~]# yum install iptables-services Loaded plugins: fastestmirror Determining fastest mirrors * base: mirror.wiuwiu.de * extras: mirror.netcologne.de * updates: mirror.netcologne.de base | 3.6 kB 00:00:00 extras | 3.4 kB 00:00:00 updates | 3.4 kB 00:00:00 (1/2): extras/7/x86_64/primary_db | 215 kB 00:00:01 (2/2): updates/7/x86_64/primary_db | 7.4 MB 00:00:01 Resolving Dependencies --> Running transaction check ---> Package iptables-services.x86_64 0:1.4.21-28.el7 will be installed --> Finished Dependency Resolution Dependencies Resolved =============================================================================================================== Package Arch Version Repository Size =============================================================================================================== Installing: iptables-services x86_64 1.4.21-28.el7 base 52 k Transaction Summary =============================================================================================================== Install 1 Package Total download size: 52 k Installed size: 26 k Is this ok [y/d/N]: y Downloading packages: iptables-services-1.4.21-28.el7.x86_64.rpm | 52 kB 00:00:05 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : iptables-services-1.4.21-28.el7.x86_64 1/1 Verifying : iptables-services-1.4.21-28.el7.x86_64 1/1 Installed: iptables-services.x86_64 0:1.4.21-28.el7 Complete! [root@osedev1 ~]# service iptables save iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ] [root@osedev1 ~]#

- that's enough; the rest I can do from ansible!

- I created a new ansible dir with a super simple inventory file and successfully got it to ping

user@ose:~/ansible$ cat hosts

195.201.233.113 ansible_port=32415 ansible_user=maltfield

user@ose:~/ansible$ ansible -i hosts all -m ping

195.201.233.113 | SUCCESS => {

"changed": false,

"ping": "pong"

}

user@ose:~/ansible$

- ok, I think I'm actually just going to rsync a ton of stuff over, cross my fingers, and reboot. I'll exclude the following dirs from the rsync https://linuxadmin.io/hot-clone-linux-server/

- /dev

- /sys

- /proc

- /boot/

- /etc/sysconfig/network*

- /tmp

- /var/tmp

- /etc/fstab

- /etc/mtab

- /etc/mdadm.conf

- I think it makes more sense for the prod server to push to the dev server (using my ssh forwarded key) than for dev to pull from prod

- many of these files are actually root-only, so we must be root on both systems but since we don't permit root to ssh, we need a way to sudo from 'maltfield' to 'root' on the dev system. I tested that this works

[maltfield@opensourceecology syncToDev]$ ssh -p 32415 maltfield@195.201.233.113 sudo whoami The authenticity of host '[195.201.233.113]:32415 ([195.201.233.113]:32415)' can't be established. ECDSA key fingerprint is SHA256:U99nmyy5WJZMQ6qQL7vofldQJcpztHzCEzO6OuHuLd4. ECDSA key fingerprint is MD5:3c:37:06:50:4d:48:0c:f4:c1:fe:98:d8:99:fa:7a:14. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[195.201.233.113]:32415' (ECDSA) to the list of known hosts. sudo: no tty present and no askpass program specified [maltfield@opensourceecology syncToDev]$ ssh -t -p 32415 maltfield@195.201.233.113 sudo whoami [sudo] password for maltfield: root Connection to 195.201.233.113 closed. [maltfield@opensourceecology syncToDev]$

- and I did a simple test rsync of a new dir at /root/rsyncTest/

[maltfield@opensourceecology syncToDev]$ sudo su - [sudo] password for maltfield: Last login: Tue Aug 20 12:36:43 UTC 2019 on pts/0 [root@opensourceecology ~]# cd /root [root@opensourceecology ~]# mkdir rsyncTest [root@opensourceecology ~]# echo "test1" > /root/rsyncTest/testFile [root@opensourceecology ~]# logout

- but I couldn't quite get the rsync syntax correct

[maltfield@opensourceecology syncToDev]$ rsync -avvvv --progress --rsync-path="sudo rsync" /home/maltfield/syncToDev/dirOwnedByMaltfield/ maltfield@195.201.233.133:32415/root/

cmd=<NULL> machine=195.201.233.133 user=maltfield path=32415/root/

cmd[0]=ssh cmd[1]=-l cmd[2]=maltfield cmd[3]=195.201.233.133 cmd[4]=sudo rsync cmd[5]=--server cmd[6]=-vvvvlogDtpre.iLsf cmd[7]=. cmd[8]=32415/root/

opening connection using: ssh -l maltfield 195.201.233.133 "sudo rsync" --server -vvvvlogDtpre.iLsf . 32415/root/

note: iconv_open("UTF-8", "UTF-8") succeeded.

packet_write_wait: Connection to 138.201.84.243 port 32415: Broken pipe

user@ose:~$

- I think this is compounded by ssh agent forwarding issues with stale env vars after reconnecting to a screen session; I fixed that with grabssh and fixssh

https://samrowe.com/wordpress/ssh-agent-and-gnu-screen/

[maltfield@opensourceecology syncToDev]$ rsync -e 'ssh -p 32415' -av --progress dirOwnedByMaltfield/ maltfield@195.201.233.113: sending incremental file list ./ testFileOwnedByMaltfield 6 100% 0.00kB/s 0:00:00 (xfer#1, to-check=0/2) sent 134 bytes received 34 bytes 112.00 bytes/sec total size is 6 speedup is 0.04 [maltfield@opensourceecology syncToDev]$

- adding sudo made it fail; so how can I make the sudo reach back to the $SUDO_USER's env vars to connect to the ssh-agent forwarded by my machine?

[maltfield@opensourceecology syncToDev]$ sudo rsync -e 'ssh -p 32415' -av --progress /home/maltfield/dirOwnedByMaltfield/ maltfield@195.201.233.113: [sudo] password for maltfield: The authenticity of host '[195.201.233.113]:32415 ([195.201.233.113]:32415)' can't be established. ECDSA key fingerprint is SHA256:U99nmyy5WJZMQ6qQL7vofldQJcpztHzCEzO6OuHuLd4. ECDSA key fingerprint is MD5:3c:37:06:50:4d:48:0c:f4:c1:fe:98:d8:99:fa:7a:14. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[195.201.233.113]:32415' (ECDSA) to the list of known hosts. Permission denied (publickey). rsync: connection unexpectedly closed (0 bytes received so far) [sender] rsync error: unexplained error (code 255) at io.c(605) [sender=3.0.9] [maltfield@opensourceecology syncToDev]$

- ok, apparenty I somehow fucked up the permisions on '/home/maltfield/'. When I changed it back from 0775 to 0700 it worked. Note that here I also added the '-E' arg to `sudo` to make it keep the env vars needed to get my forwarded ssh key

[maltfield@opensourceecology syncToDev]$ sudo rsync -e 'ssh -p 32415' -av --progress /home/maltfield/dirOwnedByMaltfield/ maltfield@195.201.233.113: [sudo] password for maltfield: The authenticity of host '[195.201.233.113]:32415 ([195.201.233.113]:32415)' can't be established. ECDSA key fingerprint is SHA256:U99nmyy5WJZMQ6qQL7vofldQJcpztHzCEzO6OuHuLd4. ECDSA key fingerprint is MD5:3c:37:06:50:4d:48:0c:f4:c1:fe:98:d8:99:fa:7a:14. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[195.201.233.113]:32415' (ECDSA) to the list of known hosts. Permission denied (publickey). rsync: connection unexpectedly closed (0 bytes received so far) [sender] rsync error: unexplained error (code 255) at io.c(605) [sender=3.0.9] [maltfield@opensourceecology syncToDev]$

- trying with sudo on the destination too, but this fails because I can't enter the password

[maltfield@opensourceecology syncToDev]$ sudo -E rsync -e 'ssh -p 32415' --rsync-path="sudo rsync" -av --progress dirOwnedByMaltfield/ maltfield@195.201.233.113:syncToDev/ sudo: no tty present and no askpass program specified rsync: connection unexpectedly closed (0 bytes received so far) [sender] rsync error: error in rsync protocol data stream (code 12) at io.c(605) [sender=3.0.9] [maltfield@opensourceecology syncToDev]$

- I confirmed that I can get sudo to work over plain ssh using the '-t' option

[maltfield@opensourceecology syncToDev]$ ssh -t -p 32415 maltfield@195.201.233.113 sudo whoami [sudo] password for maltfield: root Connection to 195.201.233.113 closed. [maltfield@opensourceecology syncToDev]$

- but it won't let me use this option with rsync

[maltfield@opensourceecology syncToDev]$ sudo -E rsync -e 'ssh -t -p 32415' --rsync-path="sudo rsync" -av --progress dirOwnedByMaltfield/ maltfield@195.201.233.113:syncToDev/ Pseudo-terminal will not be allocated because stdin is not a terminal. sudo: no tty present and no askpass program specified rsync: connection unexpectedly closed (0 bytes received so far) [sender] rsync error: error in rsync protocol data stream (code 12) at io.c(605) [sender=3.0.9] [maltfield@opensourceecology syncToDev]$

- I think the solution is just to give a whitelist of users with NOPASSWD sudo access. This list would be special users whoose private keys are on lockdown. They are encrypted with a damn good passphrase, never stored on servers, etc.

Sun Aug 18, 2019

- I found this shitty Help Desk article on BackBlaze B2's non-payment procedure to determine at what point they delete all our precious backup data if we accidentally don't pay again. Answer: After 1.5 months. In this case, we discovered the issue after 1.25 months; that was close! https://help.backblaze.com/hc/en-us/articles/219361957-B2-Non-payment-procedures

- but the above article says that all the dates are subject to change, so who the fuck knows *shrug*

- I recommended to Marcin that he setup email forwards and filters from our backblaze b2 google account so that he can be notified more sooner in the 1-month grace period. Personally, I can't fucking login to that account anymore due to google "security features" even though, yeah, I'm the G Suite Admin *facepalm*

...

- I had some emails with Chris about the wiki archival process, which is also an important component of backups and OSE's mission in general

- same as I did back in 2018-05, I created a new snapshot for him since he lost the old version https://wiki.opensourceecology.org/wiki/Maltfield_Log/2018_Q2#Sat_May_26.2C_2018

# DECLARE VARS

snapshotDestDir='/var/tmp/snapshotOfWikiForChris.20190818'

wikiDbName='osewiki_db'

wikiDbUser='osewiki_user'

wikiDbPass='CHANGEME'

stamp=`date +%Y%m%d_%T`

pushd "${snapshotDestDir}"

time nice mysqldump --single-transaction -u"${wikiDbUser}" -p"${wikiDbPass}" --databases "${wikiDbName}" | gzip -c > "${wikiDbName}.${stamp}.sql.gz"

time nice tar -czvf "${snapshotDestDir}/wiki.opensourceecology.org.vhost.${stamp}.tar.gz" /var/www/html/wiki.opensourceecology.org/*

Sat Aug 17, 2019

- I mentioned to Marcin that we may loose all our prescious backup data if our B2 account becomes unpaid (& unoticed) for some time again, so it may be a good idea to be super-cautious and keep a once-yearly backup at FeF. There's the most recent 2 days of backups live on the server, but they're owned by root (so getting Marcin access would be nontrivial)

[b2user@opensourceecology ~]$ ls -lah /home/b2user/sync total 17G drwxr-xr-x 2 root root 4.0K Aug 16 07:45 . drwx------ 7 b2user b2user 4.0K Aug 16 11:06 .. -rw-r--r-- 1 b2user root 17G Aug 16 07:45 daily_hetzner2_20190816_072001.tar.gpg [b2user@opensourceecology ~]$ ls -lah /home/b2user/sync.old total 17G drwxr-xr-x 2 root root 4.0K Aug 15 07:46 . drwx------ 7 b2user b2user 4.0K Aug 16 11:06 .. -rw-r--r-- 1 b2user root 17G Aug 15 07:46 daily_hetzner2_20190815_072001.tar.gpg [b2user@opensourceecology ~]$

- I asked if Marcin has ~20G somewhere he can store a yearly backup at FeF. It's not free download from B2 (it costs $0.01/G), so at 17G (<$0.20), I asked Marcin to try to download one of our server's backups from the B2 WUI for yearly archival on-site at FeF https://wiki.opensourceecology.org/wiki/Backblaze#Download_from_WUI

...

- I decided to update the local rules to silent alerts to change the level from 0 to 2 to ensure that it at least gets logged

- added another rule = "High amount of POST requests in a small period of time (likely bot)" to the list of overwrites to level 2 (so stop sending email alerrts)

- I noticed that I haven't been recieving (real-time) file integrity monitoring alerts, which is pretty critical. A quick check shows that the syscheck db *is* storing info on these files, for example this obi apache config file

[root@opensourceecology ossec]# bin/syscheck_control -i 000 -f /etc/httpd/conf.d/00-www.openbuildinginstitute.org.conf Integrity checking changes for local system 'opensourceecology.org - 127.0.0.1': Detailed information for entries matching: '/etc/httpd/conf.d/00-www.openbuildinginstitute.org.conf' 2017 Nov 24 17:25:33,0 - /etc/httpd/conf.d/00-www.openbuildinginstitute.org.conf File added to the database. Integrity checking values: Size: 1817 Perm: rw-r--r-- Uid: 0 Gid: 0 Md5: a6cddb9c598ddcd7bf08108e7ca53381 Sha1: 5110018b79f0cd7ae12a2ceb14e357b9c0e2804a 2017 Dec 01 19:39:23,0 - /etc/httpd/conf.d/00-www.openbuildinginstitute.org.conf File changed. - 1st time modified. Integrity checking values: Size: >1824 Perm: rw-r--r-- Uid: 0 Gid: 0 Md5: >647f8e256bd3cd4930b7b7bf54967527 Sha1: >c29ad2e25d9481f7b782f1a9ea1d04a15029ab37 2017 Dec 07 16:30:48,2 - /etc/httpd/conf.d/00-www.openbuildinginstitute.org.conf File changed. - 2nd time modified. Integrity checking values: Size: >1831 Perm: rw-r--r-- Uid: 0 Gid: 0 Md5: >61126df96f2249b917becce25566eb85 Sha1: >9426ac50df19dfccd478a7c65b52525472de1349 2017 Dec 07 16:35:59,3 - /etc/httpd/conf.d/00-www.openbuildinginstitute.org.conf File changed. - 3rd time modified. Integrity checking values: Size: >1838 Perm: rw-r--r-- Uid: 0 Gid: 0 Md5: >cdd2f08b506885a44e4d181d503cca19 Sha1: >f6069ce29ac13259f450d79eaff265971bbf6829 [root@opensourceecology ossec]#

- I think this may be because it auto-ignores files changed after 3 changes. A fix is to change auto_ignore to "no". I also added "alert_new_files" to "yes" https://github.com/ossec/ossec-hids/issues/779

...

<syscheck>

<!-- Frequency that syscheck is executed - default to every 22 hours -->

<frequency>79200</frequency>

<!-- Directories to check (perform all possible verifications) -->

<directories report_changes="yes" realtime="yes" check_all="yes">/etc,/usr/bin,/usr/sbin</directories>

<directories report_changes="yes" realtime="yes" check_all="yes">/bin,/sbin,/boot</directories>

<directories report_changes="yes" realtime="yes" check_all="yes">/var/ossec/etc</directories>

<alert_new_files>yes</alert_new_files>

<auto_ignore>no</auto_ignore>

...

- as soon as I restarted ossec after adding these options, I got a ton of alerts on integrity changes to files like, for example, /etc/shadow! We def always need an email alert sent when /etc/shadow changes..

OSSEC HIDS Notification. 2019 Aug 17 06:03:39 Received From: opensourceecology->syscheck Rule: 550 fired (level 7) -> "Integrity checksum changed." Portion of the log(s): Integrity checksum changed for: '/etc/passwd' ... OSSEC HIDS Notification. 2019 Aug 17 06:01:31 Received From: opensourceecology->syscheck Rule: 550 fired (level 7) -> "Integrity checksum changed." Portion of the log(s): Integrity checksum changed for: '/etc/shadow'

Fri Aug 16, 2019

- added an ossec local rule to prevent emails alerts from being triggered on modsec rejecting queries as they're too many and hide more important alerts

- added an ossec local rule to prevent email alerts from being triggered on 500 errors as they're too many and hide more important alerts

Thr Aug 15, 2019

- confirmed that ose backups are working again. we're missing the first-of-the-month, but the past few days look good

[root@opensourceecology ~]# sudo su - b2user Last login: Sat Aug 3 05:57:40 UTC 2019 on pts/0 [b2user@opensourceecology ~]$ ~/virtualenv/bin/b2 ls ose-server-backups daily_hetzner2_20190813_072001.tar.gpg daily_hetzner2_20190814_072001.tar.gpg monthly_hetzner2_20181001_091809.tar.gpg monthly_hetzner2_20181101_091810.tar.gpg monthly_hetzner2_20181201_091759.tar.gpg monthly_hetzner2_20190201_072001.tar.gpg monthly_hetzner2_20190301_072001.tar.gpg monthly_hetzner2_20190401_072001.tar.gpg monthly_hetzner2_20190501_072001.tar.gpg monthly_hetzner2_20190601_072001.tar.gpg monthly_hetzner2_20190701_072001.tar.gpg weekly_hetzner2_20190812_072001.tar.gpg yearly_hetzner2_20190101_111520.tar.gpg [b2user@opensourceecology ~]$

- I also documented these commands on the wiki for future, easy reference https://wiki.opensourceecology.org/wiki/Backblaze

- re-ran backup report

- fixed error in backup report

- re-ran backup report, looks good

[root@opensourceecology backups]# ./backupReport.sh INFO: email body below ATTENTION: BACKUPS MISSING! WARNING: First of this month's backup (20190801) is missing! See below for the contents of the backblaze b2 bucket = ose-server-backups daily_hetzner2_20190813_072001.tar.gpg daily_hetzner2_20190814_072001.tar.gpg monthly_hetzner2_20181001_091809.tar.gpg monthly_hetzner2_20181101_091810.tar.gpg monthly_hetzner2_20181201_091759.tar.gpg monthly_hetzner2_20190201_072001.tar.gpg monthly_hetzner2_20190301_072001.tar.gpg monthly_hetzner2_20190401_072001.tar.gpg monthly_hetzner2_20190501_072001.tar.gpg monthly_hetzner2_20190601_072001.tar.gpg monthly_hetzner2_20190701_072001.tar.gpg weekly_hetzner2_20190812_072001.tar.gpg yearly_hetzner2_20190101_111520.tar.gpg --- Note: This report was generated on 20190815_084159 UTC by script '/root/backups/backupReport.sh' This script was triggered by '/etc/cron.d/backup_to_backblaze' For more information about OSE backups, please see the relevant documentation pages on the wiki: * https://wiki.opensourceecology.org/wiki/Backblaze * https://wiki.opensourceecology.org/wiki/OSE_Server#Backups [root@opensourceecology backups]#

- confirmed that our accrued bill of $2.57 was paid with Marcin's updates. backups are stable again!

- I emailed Chris asking about the status of the wiki archival process -> archive.org

- I did some fixing to the ossec email alerts

Sat Aug 03, 2019

- we just got an email from the server stating that there was errors with the backups

ATTENTION: BACKUPS MISSING! WARNING: First of this month's backup (20190801) is missing! WARNING: First of last month's backup (20190701) is missing! WARNING: Yesterday's backup (20190802) is missing! WARNING: The day before yesterday's backup (20190801) is missing! See below for the contents of the backblaze b2 bucket = ose-server-backups

- note that there was no contents under the "see below for the contents of the backblaze b2 bucket = ose-server-backups"

- this error was generated by the cron job /etc/cron.d/backup_to_backblaze and the script /root/backups/backupReport.sh. This is the first time I've seen it return a critical failure like this.

- the fact that the output is totally empty and it states that we're missing all the backups even though this s the first time we've recieved this, suggests it's a false-positive

- I logged into the server, changed to the 'b2user' and ran the command to get a listing of the contens of the bucket, and--sure enough--I got an error

[b2user@opensourceecology ~]$ ~/virtualenv/bin/b2 ls ose-server-backups ERROR: Unknown error: 403 account_trouble Account trouble. Please log into your b2 account at www.backblaze.com. [b2user@opensourceecology ~]$

- Per the error message, I logged-into the b2 website. As soon as I authenticated, I saw this pop-up

B2 Access Denied Your access to B2 has been suspended because your account has not been in good standing and your grace period has now ended. Please review your account and update your payment method at payment history, or contact tech support for assistance. B2 API Error Error Detail: Account trouble. Please log into your b2 account at www.backblaze.com. B2 API errors happen for a variety of reasons including failures to connect to the B2 servers, unexpectedly high B2 server load and general networking problems. Please see our documentation for more information about specific errors returned for each API call. You should also investigate our easy-to-use command line tool here: https://www.backblaze.com/b2/docs/quick_command_line.html

- I'm sure they sent us alerts to our account email (backblaze at opensourcecology dot org), but I can't fucking check because gmail demands 2fa via sms that isn't tied to the account. ugh.

- I made some improvements to the backupReport.sh script.

- it now redirects STDERR to STDOUT, so the any errors are captured & sent with the email where the backup files usually appear

- it now has a footer that includes the timestamp of when the script was executed

- it now lists the path of the script itself, to help future admins debug issues

- it now lists the path of the cron that executes the script, to help future admins debug issues

- it now prints links to two relevant documenation pages on the wiki, to help future admins debug issues

- The new email looks like this

ATTENTION: BACKUPS MISSING! ERROR: Unknown error: 403 account_trouble Account trouble. Please log into your b2 account at www.backblaze.com. WARNING: First of this month's backup (20190801) is missing! WARNING: First of last month's backup (20190701) is missing! WARNING: Yesterday's backup (20190802) is missing! WARNING: The day before yesterday's backup (20190801) is missing! See below for the contents of the backblaze b2 bucket = ose-server-backups ERROR: Unknown error: 403 account_trouble Account trouble. Please log into your b2 account at www.backblaze.com. --- Note: This report was generated on 20190803_071847 UTC by script '/root/backups/backupReport.sh' This script was triggered by '/etc/cron.d/backup_to_backblaze' For more information about OSE backups, please see the relevant documentation pages on the wiki: * https://wiki.opensourceecology.org/wiki/Backblaze * https://wiki.opensourceecology.org/wiki/OSE_Server#Backups

Thr Aug 01, 2019

- with Tom on DR & bus factor contingency planning

- Marcin asked if our server could handle thousands of concurrent editors on the wiki for the upcoming cordless drill microfactory contest

- hetzner2 is basically idle. I'm not sure where its limits are, but we're nowhere near it. With varnish in-place, writes are much more costly than concurrent readers. I explained to Marcin that scaling hetzner2 would be dividing up parts (add 1 or more DB servers, 1+ memcache (db cache) servers, 1+ apache backend servers, 1+ nginx ssl terminator servers, 1+ haproxy load balancing servers, 1+ mail servers, 1+ varnish frontend caching servers, etc)

- I went to check munin, but it the graphs were bare! Looks like our server rebooted, and munin wasn't enabled to start at system boot. I fixed that.

[root@opensourceecology init.d]# systemctl enable munin-node Created symlink from /etc/systemd/system/multi-user.target.wants/munin-node.service to /usr/lib/systemd/system/munin-node.service. [root@opensourceecology init.d]# systemctl status munin-node ● munin-node.service - Munin Node Server. Loaded: loaded (/usr/lib/systemd/system/munin-node.service; enabled; vendor preset: disabled) Active: inactive (dead) Docs: man:munin-node [root@opensourceecology init.d]# systemctl start munin-node [root@opensourceecology init.d]# systemctl status munin-node ● munin-node.service - Munin Node Server. Loaded: loaded (/usr/lib/systemd/system/munin-node.service; enabled; vendor preset: disabled) Active: active (running) since Thu 2019-08-01 10:17:09 UTC; 2s ago Docs: man:munin-node Process: 20015 ExecStart=/usr/sbin/munin-node (code=exited, status=0/SUCCESS) Main PID: 20016 (munin-node) CGroup: /system.slice/munin-node.service └─20016 /usr/bin/perl -wT /usr/sbin/munin-node Aug 01 10:17:09 opensourceecology.org systemd[1]: Starting Munin Node Server.... Aug 01 10:17:09 opensourceecology.org systemd[1]: Started Munin Node Server.. [root@opensourceecology init.d]#

- yearly grahps are available showing the data cutting off sometime in June

- Marcin said Discourse is no replacement for Askbot, so we should go with both.

- Marcin approved my request for $100/yr for a dev server in the hetzner cloud. I'll provision a CX11 w/ 50G block storage when I get back from my upcoming vacation

Wed Jul 31, 2019

- Discussion with Tom on DR & bus factor contingency planning

- Wiki changes

Tue Jul 30, 2019

1. Stack Exchange & Askbot research 2. I told Marcin that I think Discourse is the best option, but the dependencies may break our prod server, and I asked for a budget for a dev server 3. the dev server woudn't need to be very powerful, but it does need to have the same setup & disk as prod. 4. I checked the current disk on our prod server, and it has 145G used a. 34G are in /home/b2user = redundant backup data. b. Wow, there's also 72G in /tmp/systemd-private-2311ab4052754ae68f4a114aefa85295-httpd.service-LqLH0q/tmp/ a. so this appears to be caused by a "PrivateTemp" feature of systemd because many apps like httpd will create files in the 777'd /tmp dir. At OSE, I hardened php so that it writes temp files *not* in this dir, anyway. I found several guides on how to disable PrivateTemp, but preventing apache from writing to a 777 dir doesn't sound so bad.https://gryzli.info/2015/06/21/centos-7-missing-phpapache-temporary-files-in-tmp-systemd-private-temp/ b. better question: how do I just cleanup this shit? I tried `systemd-tmpfiles --clean` & `systemd-tmpfiles --remove` to no avail

[root@opensourceecology tmp]# systemd-tmpfiles --clean [root@opensourceecology tmp]# du -sh /tmp 72G /tmp [root@opensourceecology tmp]# systemd-tmpfiles --remove [root@opensourceecology tmp]# du -sh /tmp 72G /tmp [root@opensourceecology tmp]#

6. I also confirmed that the above script *should* be being run every day, anyway https://unix.stackexchange.com/questions/489940/linux-files-folders-cleanup-under-tmp

[root@opensourceecology tmp]# systemctl list-timers NEXT LEFT LAST PASSED UNIT ACTIVATES n/a n/a Sat 2019-06-22 03:11:54 UTC 1 months 7 days ago systemd-readahead-done.timer systemd-readahead-done Wed 2019-07-31 03:29:18 UTC 21h left Tue 2019-07-30 03:29:18 UTC 2h 25min ago systemd-tmpfiles-clean.timer systemd-tmpfiles-clean 2 timers listed. Pass --all to see loaded but inactive timers, too. [root@opensourceecology tmp]#

8. to make matters worse, it does appear that we have everything on one partition

[root@opensourceecology tmp]# cat /etc/fstab proc /proc proc defaults 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 tmpfs /dev/shm tmpfs defaults 0 0 sysfs /sys sysfs defaults 0 0 /dev/md/0 none swap sw 0 0 /dev/md/1 /boot ext3 defaults 0 0 /dev/md/2 / ext4 defaults 0 0 [root@opensourceecology tmp]# mount [root@opensourceecology tmp]# mount sysfs on /sys type sysfs (rw,relatime) proc on /proc type proc (rw,relatime) devtmpfs on /dev type devtmpfs (rw,nosuid,size=32792068k,nr_inodes=8198017,mode=755) securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime) tmpfs on /dev/shm type tmpfs (rw,relatime) devpts on /dev/pts type devpts (rw,relatime,gid=5,mode=620,ptmxmode=000) tmpfs on /run type tmpfs (rw,nosuid,nodev,mode=755) tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755) cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd) pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime) cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids) cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpuacct,cpu) cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer) cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_prio,net_cls) cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory) cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb) cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices) cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event) cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio) cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset) configfs on /sys/kernel/config type configfs (rw,relatime) /dev/md2 on / type ext4 (rw,relatime,data=ordered) systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=10157) debugfs on /sys/kernel/debug type debugfs (rw,relatime) mqueue on /dev/mqueue type mqueue (rw,relatime) hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime) /dev/md1 on /boot type ext3 (rw,relatime,stripe=4,data=ordered) tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=6563484k,mode=700) tmpfs on /run/user/1005 type tmpfs (rw,nosuid,nodev,relatime,size=6563484k,mode=700,uid=1005,gid=1005) binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,relatime) [root@opensourceecology tmp]#

10. It appears there's just a ton of cachegrind files here 444,670 files to be exact (all <1M)

[root@opensourceecology tmp]# ls -lah | grep -vi cachegrind total 72G drwxrwxrwt 2 root root 22M Jul 30 06:02 . drwx------ 3 root root 4.0K Jun 22 03:11 .. -rw-r--r-- 1 apache apache 5 Jun 22 03:12 dos-127.0.0.1 -rw-r--r-- 1 apache apache 112M Jul 30 06:02 xdebug.log [root@opensourceecology tmp]# ls -lah | grep "M" drwxrwxrwt 2 root root 22M Jul 30 06:02 . -rw-r--r-- 1 apache apache 112M Jul 30 06:02 xdebug.log [root@opensourceecology tmp]# ls -lah | grep "G" total 72G [root@opensourceecology tmp]# ls -lah | grep 'cachegrind.out' | wc -l 444670 [root@opensourceecology tmp]# pwd /tmp/systemd-private-2311ab4052754ae68f4a114aefa85295-httpd.service-LqLH0q/tmp [root@opensourceecology tmp]# date Tue Jul 30 06:04:26 UTC 2019 [root@opensourceecology tmp]#

13. These files should be deleted after 30 days, and that appears to be the case https://bugzilla.redhat.com/show_bug.cgi?id=1183684#c4 14. A quick search for xdebug shows that I enabled it for phplist that's probably what's generating these cachegrind files. I uncommented the lines enabling xdebug in the phplist apache vhost config file and gave httpd a restart. That cleared the tmp files. Now the disk usage is down to 73G used and 11M in /tmp