OSE Server

As of 2024, Open Source Ecology has exactly one production server, and this article is specifically about this production server.

For information about OSE's development server, see OSE Development Server.

For information about OSE's staging server, see OSE Staging Server.

For information about the provisioning of OSE's current prod server in 2024, see Hetzner3.

Introduction

The OSE Server is a critical piece of the OSE Development Stack - thus making the (1) OSE Software Stack and the OSE Server Stack the 2 critical components of OSE's development infrastructure.

Uptime & Status Checks

If you think one of the OSE websites or services may be offline, you can verify their status at the following site:

* http://status.opensourceecology.org/

Note that this URL is just a convenient CNAME to uptime.statuscake.com, which is configured to redirect our CNAME to our Public Reporting Dashboard here:

* https://uptime.statuscake.com/?TestID=itmHX7Pfj2

It may be a good idea to bookmark the above URL in the event that our site goes down, possibly including DNS issues preventing the CNAME redirect from status.opensourceecolgy.org

Note that Statuscake also tracks uptime over months, and can send monthly uptime reports, as well as immediate email alerts when the site(s) go down. If you'd like to receive such alerts, contact the OSE System Administrator.

Adding Statuscake Checks

To modify our statuscake checks, you should login to the statuscake website using the credentials stored in keepass.

If you want the test to be public (appearing on http://status.opensourceecology.org), you should add it by editing the Public Reporting Dashboard.

OSE Server Management

Working Doc - edit

Working Presentation -

Assessment of Server Options

In 2011, OSE used a shared hosting plan (on a dedicated host, but without root access) from hetzner for 74.79 EUR/mo (AMD Athlon 64 X2 5600+ Processor, 4 GB RAM, 2x 400 GB Harddisks, 1 Gbit/s Connection). We called this server Hetzner1.

In 2016, OSE purchased a dedicated hosting plan from Hetzner for 39.00 EUR/mo (CentOS 7 dedicated bare-metal server with 4 CPU cores, 64G RAM, and 2x 250G SSDs in a software RAID1). This was a much cheaper *and* more powerful server than hetzner1. We called this server Hetzner2.

In 2018, we consolidated all our sites onto the under-utilized hetzner2 & canceled hetzner1.

In 2024, CentOS 7 no longer received updates, and OSE purchased a discounted dedicated server at auction for 37.72 EUR/mo (Debian 12 dedicated bare-metal server with 4 CPU cores, 64G RAM, and 2x 512G NVMe SSDs in a software RAID1). We call this server Hetzner3

OSE Server and Server Requirements

As of 2024, the server (hetzner3) is fairly overprovisioned.

The 4 core system's load averages about 0.3 and occasionally spikes to about 2-3 several times per week. Therefore, we could maybe run this site on a 2 cores, but 4 cores gives us some breathing room.

This idle CPU is largely due to running a large varnish cache in memory. The system has 62G of usable RAM. I set varnish to use 40G, but that's more than is needed. We could probably run on a system with 10G RAM and large enough disks for swap (varnish w/ spill-over to swap is still probably faster than rendering the page over-and-over on the apache backend with mediawiki or plugin-bogged-down wordpress installs). For reference, mysqld uses ~0.9G, httpd uses ~5.5G, nginx uses ~0.2G, and system-journal uses 0.1G.

On hetzner2, the disks were about 50% full, but we've filled them many times when creating & restoring backups, migrating around the wiki, creating stating sites, etc. 512G feels about right. I don't think we should go smaller. For reference, /var/www is 32G, /var/log is 0.8G, /var/tmp is 0.6G, /var/lib/mysql is 6.7G, /var/ossec is 5.3G, and /home/b2user is 41G.

In conclusion, we require 2-4 cores, 10-20 G RAM, and ~512G disk.

For more info, see the analysis on hetzner2 that was done before it was replaced

Looking Forward

![]() Hint: Note that in 2022, we can get 2x the hard drive for the same price - 1TB instead of 500GB - Hetzner 2022 Dedicated Server

Hint: Note that in 2022, we can get 2x the hard drive for the same price - 1TB instead of 500GB - Hetzner 2022 Dedicated Server

First, about Hetzner. Within my (Michael Altfield)'s first few months at OSE (in mid 2017), our site went down for what was probably longer than 1 day (1)(2). We got no notification from Hetzner, no explanation why, and no notice when it came back. I emailed them asking for this information, and they weren't helpful. So the availability & customer support of Hetzner is awful, but we've been paying 40 EUR per month for a dedicated server with 64G of RAM since 2016. And the datacenter is powered by renewable energy. That's hard to beat. At this time, I think Hetzner is our best option.

That said, after being a customer with Hetzner for 5 years (from 2011), we bought a new plan (in 2016). The 2016 plan cost the same as our 2011 plan, but the RAM on our system, for example, jumped from 4G to 64G! Therefore, it would be wise to change plans on Hetzner every few years so that we get a significant upgrade on resources for the same fee.

Hetzner Cloud

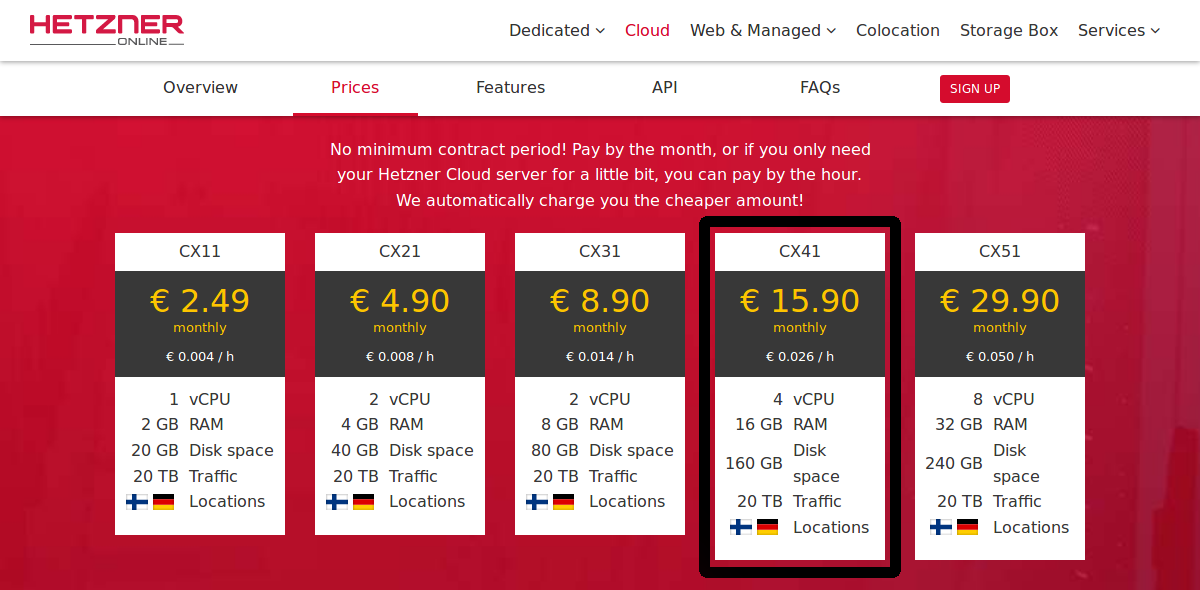

Based on the calculations above, their cloud platform seems tempting.

- CX51 - For 30 EUR/month, we can get a Hetzner cloud machine with 8 vCPU, 32G RAM, 240G disk space, & 20T traffic. If we migrated to that now, we'd save 120 EUR/year and still be overprovisioned

- CX41 - Or for 16 EUR/month, we can get a 4 vCPU, 16G RAM, 160G disk, & 20T traffic node. That provisioning sounds about right with just enough headroom to be safe. Disk may be a concern, but we can mount up to 10T for an additional fee--though it's not clear how optimized & cost-effective that would be to an actual local disk on a dedicated server..

Dedicated Servers

Moving to the cloud may not be the best option from a maintenance perspective. Dividing things up into microservices certainly has advantages, most of which are realized when you are serving millions of customers on a cyclic cycle, so you can spin up capacity during the day and down at night. At OSE, we wouldn't realize many of these benefits, and therefore it may not be worth it.

Just for an example, our current web server configuration has https terminated by nginx. Traffic to varnish and to the apache backend doesn't have to be encrypted because it's all occurring on the same machine over loopback interface. Once we start moving traffic between many distinct cloud instances, all that traffic would have to be encrypted again, which some items in the stack (varnish) don't even have the capability to do with their free version (but a hack could be built with stunnel). This is just one example of the changes that would be necessary and the complexity that would be introduced by moving to the cloud.

My (Michael Altfield) advice: don't migrate to the cloud until OSE has at least 1 full-time IT staff to manage that complexity. Otherwise you're setting yourself up for failure.

A simpler solution would be to just stick with Dedicated Servers. As shown above, our current server as of 2019-12 is super idle. The only thing that's reaching capacity is our default RAID1 250G SSD disks. But we can easily just add up to two additional disks up to 12T in space to our dedicated server and move everything from /var/ onto them if we hit this limit

* https://wiki.hetzner.de/index.php/Root_Server_Hardware/en#Drives

And as of 2019-12, we can "upgrade" to a Dedicated server for the same price that we're currently paying and get a better processor, same RAM, and 8x the disk space, albeit HDD instead of SSD (AX41[1])

* https://www.hetzner.com/dedicated-rootserver * https://www.hetzner.com/sb

Provisioning

OSE's current server was provisioned in 2024 with ansible. You can find the ansible playbook on our GitHub:

* https://github.com/OpenSourceEcology/ansible

While Ansible works great for quickly setting-up a base-install after installing the Operating System (eg Debian), it's highly likely that -- by the time you read this -- config rot has lead to a large drift between what our Ansible config and the server's actual config today.

At the time of writing, OSE is a small non-profit with 0 full-time sysadmins. Most OSE work is done by unpaid volunteers. A consequence of these facts means that there's a high rate of churn, with volunteers frequently coming and going. In this environment, it's almost certain that some volunteers will make changes directly to the server without bothering to make the changes to Ansible.

Provisioning tools like Ansible work best in large environments with many nodes -- where nodes are constantly being destroyed & launched with the provisioning tool.

Therefore, you would be wise to be cautious about using Ansible on an existing server -- you might end-up overwriting functional configs with now-broken configs. Always, always, always make backups. Do a dry-run first. Be ready for everything to horribly break, leaving you with a mountain of manual-restores & config-merging to do.

If our server experiences a catastrophic failure requiring a rebuild, you can use ansible to speed-up provisioning of a replacement server -- but be prepared to merge files into our ansible config from the server backups. This is time-consuming (taking maybe a few days of work), but the data in the backups is the most trustworthy.

If we get to the point where we actually have >1 full-time sysadmin, we should investigate setting-up autoscaling stateless servers behind a load balancer. And we can then intentionally destroy & rebuild those servers at least a few times per week to prevent provisioning config rot/drift. That's the only way to have a trustworthy provisioning tool.

In the meantime, rebuilding our server after catastrophic failure means:

- Downloading the most recent full backup of our server (Hopefully nightlies are available)

- Installing a fresh server with the OS matching the previous server's OS (eg Debian), perhaps using a newer version

- Using Ansible to do a base-provisioning of the OS

- Installing any additional packages needed on the new server

- Copy the config files from the backups to the new server

- Copying & restoring the db contents to the new server

- Copying & restoring the web roots to the new server

- Test, fix, reboot, etc. Until it can reboot & work as expected.

Here are some package hints that you'll want to ensure are installed (probably in this order). Be sure to grep the backups for their config files, and restore the configs. But, again, this doc itself is going to rot; the source-of-truth is the backups.

- sshd

- iptables

- ip6tables

- Wazuh

- our backup scripts

- crond

- mariadb

- certbot (let's encrypt)

- nginx

- varnish - allows 90% of requests to be cached

- php-fpm

- apcu

- apache

- logrotated - prevents disks from getting filled up.

- awstats - hit statistics

- munin - for graphs: load over time, etc.

Important files to restore include

- /root/backups/backup.settings

- /root/backups/*.key

- /home/b2user/.config/rclone/rclone.conf

Don't forget to test & verify backups are working on your new server!

SSH

Our server has ssh access. If you require access to ssh, contact the OSE System Administrator with subject "ssh access request," and include the following information in the body of the email:

- An explanation as to why you need ssh access

- What you need access to

- Provide a link to a portfolio of prior experience working with linux over command line that demonstrates your experience & competency using the command line safely

- Provide a few references for previous work in which you had experience working with linux over command line

Add new users

The following steps will add a new user to the OSE Server.

First, create the new user. Generate & set a temporary, 100-character, random, alpha-numeric password for the user.

useradd <new_username> passwd <new_username>

Only if it's necessary, send this password to the user through a confidential/encrypted medium (ie: the Wire app). They would need it if they want to reset their password. Note that they will not be able to authenticate with their password over ssh, and this is intentional. In fact, it is unlikely they will need their password at all, unless perhaps they will require sudo access. For this reason, it's best to set this password "just in case," not save it, and not send it to the user--it's more likely to confuse them. If they need their password for some reason in the future, you can reset it to a new random password in the future as the root user, and send it to them over an encrypted medium.

If the user needs ssh access, add them to the 'sshaccess' group.

gpasswd -a <new_username> sshaccess

Have the user generate a strong rsa keypair using the following command. Make sure they have it encrypted with a strong passphrase--to ensure they have 2FA. Then have them send you their new public key. The following commands should be run on the new user's computer, not the server:

ssh-keygen -t rsa -b 4096 -o -a 100 cat /home/<username>/.ssh/id_rsa.pub

The output from the `cat` command above is their public key. Have them send this to you. They can use an insecure medium such as email, as there is no reason to keep the public key confidential. They should never, ever send their private key (/home/<username>/.ssh/id_rsa) to anyone. Moreover, the private key should not be copied to any other computer, except in an encrypted backup. Note this means that the user should not copy their private key to OSE servers--that's what ssh agents are for.

Now, add the ssh public key provided by the user to their authorized_keys file on the OSE Server, and set the permissions:

cd /home/<new_username> mkdir /home/<new_username>/.ssh vim /home/<new_username>/.ssh/authorized_keys chown -R <new_username>:<new_username> /home/<new_username>/.ssh chmod 700 /home/<new_username>/.ssh chmod 644 /home/<new_username>/.ssh/authorized_keys

If the user needs sudo permissions, edit the sudoers file. This should only be done in very, very, very rare cases for users who have >5 years of experience working as a Linux Systems Administrator. Users with sudo access must be able to demonstrate a very high level of trust, experience, and competence working on the command line in a linux environment.

Backups

![]() Hint: Backup milestone reached on Feb 16, 2019. Now the daily, weekly, monthly, and

yearly backups appear to be accumulating (and self-deleting) as desired

in our Backblaze B2 'ose-server-backups' bucket.

Hint: Backup milestone reached on Feb 16, 2019. Now the daily, weekly, monthly, and

yearly backups appear to be accumulating (and self-deleting) as desired

in our Backblaze B2 'ose-server-backups' bucket.

How to obtain & decrypt a backup from Backblaze B2

* https://wiki.opensourceecology.org/wiki/Backblaze

Our bill last month was $0.78, and it's estimating that the upcoming monthly bill will be $0.86. Surely, this will continue to rise (we should expect to store 500-1000G on B2; currently we just have 16 backups totaling to 193G), but we should be spending far less on B2 than the >$100/year that was estimated for Amazon Glacier, considering their minimum-archive-lifetime fine print.

I think this is the most important thing that I have achieved at OSE. I still want to add some logic to the backup script that will email us when a nightly backup fails for some reason, but our backup solution (and therefore all of OSE Server's data) has never been as safe & stable as it is today.

We actively backup our server's data on a daily basis.

Logging In

We use a shared Keepass file that lives on the OSE server for server-related logins. The password for Backblaze for server backups is on this shared Keepass file.

Important Files & Directories

The following files/directories are related to the daily backup process:

- /root/backups/backup.sh This is the script that preforms the backups

- /root/backups/backupReport.sh This is the script that preforms sanity checks on the remote backups and sends emails with the results

- /root/backups/sync/ This is where backup files are stored before they're rsync'd to the storage server. '/root/backups/sync*' is explicitly excluded from backups itself to prevent a recursive nightmare.

- /root/backups/sync.old/ This is where the files from the previous backup are stored; they're deleted by the backup script at the beginning of a new backup, and replaced by the files from 'sync'

- /root/backups/backup.settings This holds important variables for the backup script. Note that this file should be on heavy lockdown, as it contains critical credentials (passwords).

- /etc/cron.d/backup_to_backblaze This file tells the cron server to execute the backup script at 07:20 UTC, which is roughly midnight in North America--a time of low traffic for the OSE Server

- /var/log/backups/backup.log The backup script logs to this file

What's Backed-Up

Here is what is being backed-up:

- mysqldump of all databases - including phpList?

- all files in /etc/* - these files include many os-level config files

- all files in /home/* (except the '/home/b2user/sync*' dirs) - these files include our users' home directories

- all files in /var/log/* - these files include log files

- all files in /root/* (except the 'backups/sync*' dirs) - these files include the root user's home directory

- all files in /var/www/* - these files include our web server files. The picture & media file storage location is application-specific. For wordpress, they're in

wp-content/<year>/<month>/<file>. For mediawiki, they're inimages/<dir>/<dir>/. For phplist, they're inuploadimages/. Etc.

Backup Server

OSE uses Backblaze B2 to store our encrypted backup archives on the cloud. For documentation on how to restore from a backup stored in Backblaze B2, see Backblaze#Restore_from_backups

Note that prior to 2019, OSE stored some backups to Amazon Glacier, but the billing fine-print of Glacier (minimum data retention) made Glacier unreasonably expensive for our daily backups.

Also note that, as a nonprofit, we're eligible for "unlimited" storage account with dreamhost. But, in fact, this isn't actually unlimited. Indeed, storing backups on Dreamhost is a violation of their policy, and OSE has been contacted by Dreamhost for violating this policy by storing backups on our account in the past.

Maintenance Mode

If you're doing maintenance on the OSE Server, use this simple script to temporarily put all of the server's websites in "maintenance mode"

root@hetzner3 ~ # website_maintenance.sh on INFO: Enabling Maintenance Mode Syntax OK INFO: Maintenance Mode is now on root@hetzner3 ~ #

When finished, simply run it again with 'off'

root@hetzner3 ~ # website_maintenance.sh off INFO: Disabling Maintenance Mode Syntax OK INFO: Maintenance Mode is now off root@hetzner3 ~ #

This script simply overrides the apache config file (via swapping symlinks /etc/apache2/apache_prod.conf and /etc/apache2/apache_main.conf) to *not* include all the vhosts in /etc/apache/sites-enabled/ and instead enables just one vhost = /etc/apache2/sites-available/000-maintenance.conf -- which servers a simple document root at /var/www/html/SITE_DOWN/htdocs/.

To change the message displayed on all the websites when OSE Server is in "Maintenance Mode," simply edit the following file

/var/www/html/SITE_DOWN/htdocs/index.html

https

In 2017 & 2018, Michael Altfield migrated OSE sites to use https with Let's Encrypt certificates.

Nginx's https config was hardened using Mozilla's ssl-config-generator and the Qualys ssllabs.com SSL Server Test.

For more information on our https configuration, see Web server configuration#Nginx

Keepass

Whenever possible, we should utilize per-user credentials for logins so there is a user-specific audit trail and we have user-specific authorization-revocation abilities. However, where this is not possible, we should store usernames & passwords that our OSE Server infrastructure depends on in a secure & shared location. At OSE, we store such passwords in an encrypted keepass database that lives on the server.

passwords.kdbx file

The passwords.kdbx file is encrypted; if an attacker obtains this file, they will not be able to access any useful information. That said, we keep it in a central location on the OSE Server behind lock & key for a few reasons:

- The OSE Server already has nightly backups, so keeping the passwords.kdbx on the server simplifies maintenance by reusing existing backup procedures for the keepass file

- By keeping the file in a central location & updating it with sshfs, we can prevent forks & merges of per-person keepass files, which would complicate maintenance. Note that writes to this file are extremely rare, so multi-user access to the same file is greatly simplified.

- The keepass file is available on a need-to-have basis to those with ssh authorization that have been added to the 'keepass' group.

The passwords.kdbx file should be owned by the user 'root' and the group 'keepass'. It should have the file permissions of 660 (such that it can be read & written by 'root' and users in the 'keepass' group, but not accessible in any way from anyone else).

The passwords.kdbx file should exist in a directory '/etc/keepass', which is owned by the user 'root' and the group 'keepass'. This directory should have permissions 770 (such that it can be read, written, & executed by 'root' and users in the 'keepass' group, but not accessible in any way from anyone else).

Users should not store a copy of the passwords.kdbx file on their local machines. This file should only exist on the OSE Server (and therefore also in backups).

Unlocking passwords.kdbx

In order to unlock the passwords.kdbx file, you need

- Keepass software on your personal computer capable of reading Keepass 2.x DB files

- sshfs installed on your personal computer

- ssh access to the OSE Server with a user account added to the 'keepass' group

- the keepass db password

- the keepass db key file

Note that the "Transform rounds" has been tuned to '87654321', which makes the unlock process take ~5 seconds. This also significantly decreases the effectiveness of brute-forcing the keys if an attacker obtains the passwords.kdbx file.

KeePassX

OSE Devs are recommended to use a linux personal computer. In this case, we recommend using the KeePassX client, which can be installed using the following command:

sudo apt-get install keepassx

sshfs

OSE Devs are recommended to use a linux personal computer. In this case, sshfs can be installed using the following command:

sudo apt-get install sshfs

You can now create a local directory on your personal computer where you can mount directories on the OSE Server locally on your personal computer's filesystem. We'll also store your personal keepass file & the ose passwords key file in '$HOME/keepass', so let's lock down the permisions as well:

mkdir -p $HOME/keepass/mnt/ose

chown -R `whoami`:`whoami` $HOME/keepass

find $HOME/keepass/ -type d -exec chmod 700 {} \;

find $HOME/keepass/ -type f -exec chmod 600 {} \;

ssh access

If you're working on a task that requires access to the passwords.kdbx file, you'll need to present the case to & request authorization from the OSE System Administrator asking for ssh access with a user that's been added to the 'keepass' group. Send an email to the OSE System Administrator explaining

- Why you require access to the OSE passwords.kdbx file and

- Why you can be trusted with all these credentials.

The System Administrator with root access can execute the following command on the OSE Server to add a user to the 'keepass' group:

gpasswd -a <username> keepass

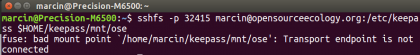

Once you have an ssh user in the 'keepass' group on the OSE Server, you can mount the passwords.kdbx file to your personal computer's filesystem with the following command:

sshfs -p 32415 <username>@opensourceecology.org:/etc/keepass $HOME/keepass/mnt/ose

keepass db password

OSE Devs are recommended to use a linux personal computer & store their personal OSE-related usernames & passwords in a personal password manager, such as KeePassX.

If you don't already have one, open KeePassX and create your own personal keepass db file. Save it to '$HOME/keepass/keepass.kdbx'. Be sure to use a long, secure passphrase.

After being granted access to the OSE shared keepass file from the OSE System Administrator, they will establish a secure channel with you to send you the keepass db password, which is a long, randomly generated string. When you receive this password, you should store it in your personal keepass db.

This password, along with the key file, is a key to unlocking the encrypted passwords.kdbx file. You should use extreme caution to ensure that this string is kept secret & secure. Never give it to anyone through an unencrypted channel, write it down, or save it to an unencrypted file.

keepass db key file

After being granted access to the OSE shared keepass file from the OSE System Administrator, they will establish a secure channel with you to send you the keepass db key file, which is a randomly generated 4096 byte file.

This key file is the most important key to unlocking the encrpted passwords.kdbx file. You should use extreme caution to ensure that this file is kept secret & secure. Never give this key file to anyone through an unencrypted channel, save it on an unencrypted storage medium, or keep it on the same disk as the passwords.kdbx file.

This key file should never be stored or backed-up in the same location as the passwords.kdbx file. It would be a good idea to store it on an external USB drive kept in a safe, rather than keeping it stored on your computer.

Unmounting

Note that a mounted filesystem on a local desktop will do to the source files on the server whatever actions you take on your local computer. Thus, DO NOT TRASH the mounted keepass file on your computer - as this action will trash the file on the server. You need to unmount the file system from your local computer first:

umount $HOME/keepass/mnt/ose

Shutting down your computer also serves to unmount the file system.

Errors

An error that keeps coming up is, as at 5:30 CST USA time on Aug 6, 2019:

Solution is to sudo pkill -f gnome-keyring-daemon

Solution? Did you create the dir to which it needs to be mounted? Specifically, run the mkdir, chown, find, and find commands here: [1]

TODO

Current Tasks

- Discourse POC

- Email alerts with munin (or nagios)

- Ransomware-proof backups (append-only, offsite cold-storage) Maltfield_Log/2019_Q4#Mon_Dec_02.2C_2019

- Design & document webapp upgrade procedure using staging server

- Fix awstats cron to include $year in static output dir (prevent overwrite)

- Optimize load speeds for osemain (www.opensourceecology.org) (eliminate unused content, minify, lazy load, varnish, modpagespeed, cdn, etc)

- AskBot POC

- Janus POC

- Jitsi Videobridge POC

- LibreOffice Online (CODE) POC

- Upgrade/migrate hetzner dedicated server to new plan of same price

Tasks completed in 2018/2019

Move offsite backup storage destination from Dreamhost to Backblaze B2Email alerts if nightly backups to backblaze failMonthly backups status report emailPhplistProvision OSE Development Server in Hetzner CloudPut Dev server behind OpenVPN intranet2FA for VPNDocument guide for authorizing new users to VPN (audience: OSE sysadmin)Document guide for users to gain VPN access (audience: OSE devs)Provision OSE Staging Server as lxc container on dev serverSync prod server services/files/data from prod to stagingAutomation script for syncing from prod to staging

Deprecate Hetzner1 (2017/18)

When I first joined OSE, the primary goal was to migrate all services off of Hetzner 1 onto Hetzner 2 and to terminate our Hetzner 1 plan entirely. This project had many, many dependencies (we didn't even have a functioning backup solution!). It started in 2017 Q2 and finished in 2018 Q4.

BackupsHarden SSHDocument how to add ssh users to Hetzner 2StatuscakeAwstatsOSSECHarden ApacheHarden PHPHarden MysqliptablesLet's Encrypt for OBIOrganize & Harden Wordpress for OBIQualys SSL labs validation && tweakingVarnish CacheDisable CloudflareFine-tune Wiki configMuninKeepass solution + documentationMigrate forum to hetzner2Migrate oswh to hetzner2Migrate fef to hetzner2Migrate wiki to hetzner2Migrate osemain to hetzner2Deprecate forumHarden oswhHarden fefHarden osemainHarden wikiBackup data on hetzner1 to long-term storage (aws glacier)Block hetzner1 traffic to all services (though easily revertible)End Hetzner1 contractEncrypted Backups

Links

- Wordpress

- Mediawiki

- OSE Website

- GVCS Server Requirements

- Internet, Phone, Domains, Server

- Dedicated Server Options

- OSE_Online_Presence

- OSE_Wiki

- OSE_Site_Backup

- Website

- Web_server_configuration

- Difference Between a Dedicated and Managed Server

- HDD vs SSD Storage

- DDOS Attacks are an issue according to Y Combinator News - [2]

- Latency on Hetzner in different parts of the world - [3]

Changes

As of 2018-07, we have no ticket tracking or change control process. For now, everything is on the wiki as there's higher priorities. Hence, here's some articles used to track server-related changes:

- CHG-2018-07-06 hetzner1 deprecation - change to deprecate our hetzner1 server and contract by Michael Altfield

See Also

- OSE Development Server

- OSE Staging Server

- Hetzner3

- Backblaze

- Website

- Web server configuration

- Wordpress

- Vanilla Forums

- Mediawiki

- Munin

- Awstats

- Wazuh

- Google Workspace

FAQ

- How does Awstats compare to Google Analytics?