Maltfield Log/2018 Q2

My work log from the year 2018 Quarter 2. I intentionally made this verbose to make future admin's work easier when troubleshooting. The more keywords, error messages, etc that are listed in this log, the more helpful it will be for the future OSE Sysadmin.

See Also

Sat Jun 30, 2018

- dug through my old emails to find the extensions that Marcin & Michel wanted to add to our wiki; it's a 3d modeler that allows viewers to manipulate our stl files in real-time on the site using WebGL https://www.mediawiki.org/wiki/Extension:3DAlloy

- before we couldn't get it because our version of mediawiki was too old. It's a lower priority right now for me (next to documentation, scaleable video conferencing solution, backups to s3, & deprecation of hetzner1, but it

- I updated our mediawiki article, adding this to the "proposed extensions" list https://wiki.opensourceecology.org/wiki/Mediawiki#Proposed

- found a more robust way to resize images when the images are a mixed batch of orientations (landscape vs portrait) & different sizes https://stackoverflow.com/questions/6384729/only-shrink-larger-images-using-imagemagick-to-a-ratio

# most robust; handles portrait & landscape images of various dimensions

# 786433 = 1024*768+1

find . -maxdepth 1 -iname '*.jpg' -exec convert {} -resize '786433@>' {} \;

- I updated our documentation on this https://wiki.opensourceecology.org/wiki/Batch_Resize

- uploaded my two photos from the D3D Extruder build (Modified Prusa i3 MK2) https://wiki.opensourceecology.org/wiki/D3D_Extruder#2018-06_Notes

Tue Jun 26, 2018

- Marcin pointed out that many of the extremely large images on the wiki display an error when mediawiki attempts to generate a thumbnail for them = "Error creating thumbnail: File with dimensions greater than 12.5 MP" https://wiki.opensourceecology.org/wiki/High_Resolution_GVCS_Media

- for example https://wiki.opensourceecology.org/wiki/File:1day.jpg

- according to mediawiki, this issue can be resolved by setting the $wgMaxImageArea variable to a higher value https://www.mediawiki.org/wiki/Manual:Errors_and_symptoms#Error_creating_thumbnail:_File_with_dimensions_greater_than_12.5_MP

- the $wgMaxImageArea value is capped to prevent mediawiki from using too much RAM. Because we have way, way more memory than we need on our machine, there appears to be no risk in increasing this value. Moreover, it should only need to be done once as mediawiki generates these thumbnails & saves them as physical files in the 'images/thumb/ directory structure, for example https://wiki.opensourceecology.org/images/thumb/e/e6/Bhp1.jpg/300px-Bhp1.jpg

- there was a note in the above wiki article mentioning that increasing the $wgMaxImageArea value may necessitate increasing the $wgMaxShellMemory value as well. But since we don't let php call to a shell (and therefore we use gd instead of imagemagick), this is not relevant to us anymore.

- ^ that said, I was digging in the LocalSettings.php file and found that this $wgMaxShellMemory value had been set (by a previous admin), mentioning this blog article in the preceding comment https://blog.breadncup.com/2009/12/01/thumbnail-image-memory-allocation-error-in-mediawiki/

- ^ that blog article mentions that one of the benefits of imagemagick is that it stores the image file separately. If that means that gd does *not* store the image file separately, then that's not very good.

- after spending some time poking around at the thumbnail-related wiki articles, I found nothing suggesting that gd does not create temporary files after generating the thumbnails https://www.mediawiki.org/wiki/Manual:Configuration_settings#Thumbnail_settings

- the best test of this would be to see if the example file above which is erroring out will show a thumbnail file after we fix the error. If we can then browse to a physical file on the disk for that thumbnail, then we know that gd does generate thumbnails one-time (or at least as a file cache until expiry) https://wiki.opensourceecology.org/wiki/File:1day.jpg

- I set the $wgMaxImageArea variable to '1.25e7' = 25 million pixels or 5000×5000 https://www.mediawiki.org/wiki/Manual:$wgMaxImageArea

- note that this is actually supposed to be the default value, but it fixed the error *shurg*

- after the change, the File:1day.jpg link refreshed to have thumbnails in-place of the errors. One of the thumbnails' image file was https://wiki.opensourceecology.org/images/thumb/f/fc/1day.jpg/120px-1day.jpg

- I confirmed that this was an actual file on the disk, and that it was just one of among 12 thumbnails

[root@hetzner2 wiki.opensourceecology.org]# ls -lah htdocs/images/thumb/f/fc/1day.jpg/ total 1.1M drwxrwx--- 2 apache apache 4.0K May 28 2015 . drwxrwx--- 32 apache apache 4.0K Feb 4 14:56 .. -rw-rw---- 1 apache apache 149K May 28 2015 1000px-1day.jpg -rw-rw---- 1 apache apache 200K May 27 2015 1200px-1day.jpg -rw-rw---- 1 apache apache 4.0K Jan 9 2013 120px-1day.jpg -rw-rw---- 1 apache apache 324K May 27 2015 1599px-1day.jpg -rw-rw---- 1 apache apache 7.9K Jan 9 2013 180px-1day.jpg -rw-rw---- 1 apache apache 19K May 27 2015 300px-1day.jpg -rw-rw---- 1 apache apache 21K May 27 2015 320px-1day.jpg -rw-rw---- 1 apache apache 38K May 27 2015 450px-1day.jpg -rw-rw---- 1 apache apache 45K Dec 27 2013 500px-1day.jpg -rw-rw---- 1 apache apache 61K May 27 2015 600px-1day.jpg -rw-rw---- 1 apache apache 92K May 28 2015 750px-1day.jpg -rw-rw---- 1 apache apache 100K Jan 9 2013 800px-1day.jpg [root@hetzner2 wiki.opensourceecology.org]# ## indeed, subsequent refreshes of this page loaded fast (the first was very, very slow)

- ...

- uploaded photos of my jellbox build https://wiki.opensourceecology.org/wiki/Jellybox_1.3_Build_2018-05#Notes_on_my_Experience

- finished this & sent notice to Marcin

- next item on the documentation front is the d3d extruder photos. Then all the other misc photos.

Mon Jun 25, 2018

- so google finished processing our sitemap, and sent us an email on Saturday to notify us that it was done & had some errors

- 15 pages were "Submitted URL has crawl issue" (status = error)

- 2 pages were "Submitted URL blocked by robots.txt" (status = error)

- 2 pages were "Indexed, though blocked by robots.txt" (status = warning)

- 30299 pages were "Discovered - currently not indexed" (status = excluded)

- 1151 pages were "Crawled - currently not indexed" (status = excluded)

- 48 pages were "Excluded by ‘noindex’ tag" (status = excluded)

- 48 pages were "Blocked by robots.txt" (status = excluded)

- 32 pages were "Soft 404" (status = excluded)

- 11 pages were "Crawl anomaly" (status = excluded)

- 7 pages were "Submitted URL not selected as canonical" (status = excluded)

- 887 pages were "Submitted and indexed" (status = valid)

- 11 pages were "Indexed, not submitted in sitemap" (status = valid)

- so out of the 32,360 pages that we submitted to Google from the mediawiki-generated sitemap, it looks like only 887 got indexed

- there were only 2 error types covering 17 pages

- 15 pages were "Submitted URL has crawl issue" (status = error):

- https://wiki.opensourceecology.org/wiki/Agricultural_Robot

- https://wiki.opensourceecology.org/wiki/Practical_Post-Scarcity_Video

- https://wiki.opensourceecology.org/wiki/25%25_Discounts

- https://wiki.opensourceecology.org/wiki/User:Marcin

- https://wiki.opensourceecology.org/wiki/Michael_Log

- https://wiki.opensourceecology.org/wiki/Marcin_Biography/es

- https://wiki.opensourceecology.org/wiki/Tractor_Construction_Set_2017

- https://wiki.opensourceecology.org/wiki/CEB_Press_Fabrication_videos

- https://wiki.opensourceecology.org/wiki/Development_Team_Log

- https://wiki.opensourceecology.org/wiki/Tractor_User_Manual

- https://wiki.opensourceecology.org/wiki/Marcin_Biography

- https://wiki.opensourceecology.org/wiki/CNC_Machine

- https://wiki.opensourceecology.org/wiki/OSEmail

- https://wiki.opensourceecology.org/wiki/Donate

- https://wiki.opensourceecology.org/wiki/Hello

- 2 pages were "Submitted URL blocked by robots.txt" (status = error)

- 15 pages were "Submitted URL has crawl issue" (status = error):

- for the second group, yes, we block robots.txt from accessing our Template files. I'm surprised the site index that mediawiki included this and that, if it did include it, it only had 2 templates. In any case, this is not an issue

user@ose:~$ curl https://wiki.opensourceecology.org/robots.txt User-agent: * Disallow: /index.php? Disallow: /index.php/Help Disallow: /index.php/Special: Disallow: /index.php/Template Disallow: /wiki/Help Disallow: /wiki/Special: Disallow: /wiki/Template Crawl-delay: 15 user@ose:~$

- regarding the 15 pages with "Submitted URL has crawl issue" (status = error):

- https://wiki.opensourceecology.org/wiki/Agricultural_Robot

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/Practical_Post-Scarcity_Video

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/25%25_Discounts

- I attempted a "Fetch as Google," and I got an error. Indeed, I reproduced a 403.

- https://wiki.opensourceecology.org/wiki/Agricultural_Robot

user@ose:~$ curl -i "https://wiki.opensourceecology.org/wiki/25%25_Discounts" HTTP/1.1 403 Forbidden Server: nginx Date: Mon, 25 Jun 2018 17:47:45 GMT Content-Type: text/html; charset=iso-8859-1 Content-Length: 220 Connection: keep-alive X-Varnish: 10297078 10201494 Age: 916 Via: 1.1 varnish-v4 <!DOCTYPE HTML PUBLIC "-IETFDTD HTML 2.0//EN"> <html><head> <title>403 Forbidden</title> </head><body> <h1>Forbidden</h1> <p>You don't have permission to access /wiki/25%_Discounts on this server.</p> </body></html> user@ose:~$

- I confirmed that nginx saw the resquest, and it is listed as a 403

[root@hetzner2 httpd]# tail -f /var/log/nginx/wiki.opensourceecology.org/access.log | grep -i '_Discounts' 65.49.163.89 - - [25/Jun/2018:17:53:56 +0000] "GET /wiki/25%25_Discounts HTTP/1.1" 403 220 "-" "curl/7.52.1" "-"

- digging deeper, I saw that varnish was also processing the request, and (doh!) it's registering a hit for a 403. It shouldn't be caching 403 errors!

[root@hetzner2 httpd]# varnishlog -q "ReqHeader eq 'X-Forwarded-For: 65.49.163.89'" * << Request >> 10297385 - Begin req 10297384 rxreq - Timestamp Start: 1529949449.294763 0.000000 0.000000 - Timestamp Req: 1529949449.294763 0.000000 0.000000 - ReqStart 127.0.0.1 48760 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader User-Agent: curl/7.52.1 - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - Hit 10201494 - VCL_call HIT - VCL_return deliver - RespProtocol HTTP/1.1 - RespStatus 403 - RespReason Forbidden - RespHeader Date: Mon, 25 Jun 2018 17:32:29 GMT - RespHeader Server: Apache - RespHeader Content-Length: 220 - RespHeader Content-Type: text/html; charset=iso-8859-1 - RespHeader X-Varnish: 10297385 10201494 - RespHeader Age: 1499 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529949449.294801 0.000038 0.000038 - Debug "RES_MODE 2" - RespHeader Connection: close - Timestamp Resp: 1529949449.294821 0.000058 0.000020 - Debug "XXX REF 2" - ReqAcct 234 0 234 226 220 446 - End

- I'm using the default config from mediawiki's guide, but I guess they don't use mod_security https://www.mediawiki.org/wiki/Manual:Varnish_caching#Configuring_Varnish_4.[[1]]

- first, I need to reproduce the issue (clear the varnish cache, attempt to hit it, & get a 200 then clear the varnish cache again, attempt to hit it in some malicious way, get a 403, attempt to hit it in a non-malicious way, get a 403 again)

# I ran this on the server to clear the varnish cache just for the relevant page [root@hetzner2 sites-enabled]# varnishadm 'ban req.url ~ "_Discounts"' [root@hetzner2 sites-enabled]# # then I attempted to hit the server in an unmalicious way user@ose:~$ curl -i "https://wiki.opensourceecology.org/wiki/25%25_Discounts" HTTP/1.1 403 Forbidden Server: nginx Date: Mon, 25 Jun 2018 18:12:55 GMT Content-Type: text/html; charset=iso-8859-1 Content-Length: 220 Connection: keep-alive X-Varnish: 10190775 Age: 0 Via: 1.1 varnish-v4 <!DOCTYPE HTML PUBLIC "-IETFDTD HTML 2.0//EN"> <html><head> <title>403 Forbidden</title> </head><body> <h1>Forbidden</h1> <p>You don't have permission to access /wiki/25%_Discounts on this server.</p> </body></html> user@ose:~$

- In the above step, I still got the 403! Digging into varnish shows that it *did* miss the cache, then the backend responded again with a 403.

[root@hetzner2 httpd]# varnishlog -q "ReqHeader eq 'X-Forwarded-For: 65.49.163.89'" * << Request >> 10190775 - Begin req 10190774 rxreq - Timestamp Start: 1529950375.885007 0.000000 0.000000 - Timestamp Req: 1529950375.885007 0.000000 0.000000 - ReqStart 127.0.0.1 51724 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader User-Agent: curl/7.52.1 - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - ExpBan 10201494 banned lookup - Debug "XXXX MISS" - VCL_call MISS - VCL_return fetch - Link bereq 10190776 fetch - Timestamp Fetch: 1529950375.885816 0.000809 0.000809 - RespProtocol HTTP/1.1 - RespStatus 403 - RespReason Forbidden - RespHeader Date: Mon, 25 Jun 2018 18:12:55 GMT - RespHeader Server: Apache - RespHeader Content-Length: 220 - RespHeader Content-Type: text/html; charset=iso-8859-1 - RespHeader X-Varnish: 10190775 - RespHeader Age: 0 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529950375.885848 0.000840 0.000031 - Debug "RES_MODE 2" - RespHeader Connection: close - Timestamp Resp: 1529950375.885870 0.000863 0.000023 - Debug "XXX REF 2" - ReqAcct 234 0 234 214 220 434 - End

- repeating this step (clearing varnish, attempt to curl unmaliciously) & tailing the apache logs showed the error

[root@hetzner2 httpd]# tail -f wiki.opensourceecology.org/error_log wiki.opensourceecology.org/access_log | grep -i '_Discount' [Mon Jun 25 18:21:11.239838 2018] [:error] [pid 2751] [client 127.0.0.1] ModSecurity: Access denied with code 403 (phase 2). Invalid URL Encoding: Non-hexadecimal digits used at REQUEST_URI. [file "/etc/httpd/modsecurity.d/activated_rules/modsecurity_crs_20_protocol_violations.conf"] [line "461"] [id "950107"] [rev "2"] [msg "URL Encoding Abuse Attack Attempt"] [severity "WARNING"] [ver "OWASP_CRS/2.2.9"] [maturity "6"] [accuracy "8"] [tag "OWASP_CRS/PROTOCOL_VIOLATION/EVASION"] [hostname "wiki.opensourceecology.org"] [uri "/wiki/25%_Discounts"] [unique_id "WzEyl9G0FuNKXv-7ydMIPAAAAAs"] 127.0.0.1 - - [25/Jun/2018:18:21:11 +0000] "GET /wiki/25%25_Discounts HTTP/1.1" 403 220 "-" "curl/7.52.1"

- I whitelisted this rule id = 950107, protocol violation. Then I tried again, and it worked!

# ran on the server [root@hetzner2 sites-enabled]# varnishadm 'ban req.url ~ "_Discounts"' [root@hetzner2 sites-enabled]# # ran on the client user@ose:~$ curl -I "https://wiki.opensourceecology.org/wiki/25%25_Discounts" HTTP/1.1 200 OK Server: nginx Date: Mon, 25 Jun 2018 19:26:50 GMT Content-Type: text/html; charset=UTF-8 Content-Length: 0 Connection: keep-alive X-Content-Type-Options: nosniff Content-language: en X-UA-Compatible: IE=Edge Link: </images/ose-logo.png?be82f>;rel=preload;as=image Vary: Accept-Encoding,Cookie Cache-Control: s-maxage=18000, must-revalidate, max-age=0 Last-Modified: Mon, 25 Jun 2018 14:26:50 GMT X-XSS-Protection: 1; mode=block X-Varnish: 10084665 Age: 0 Via: 1.1 varnish-v4 Strict-Transport-Security: max-age=15552001 Public-Key-Pins: pin-sha256="UbSbHFsFhuCrSv9GNsqnGv4CbaVh5UV5/zzgjLgHh9c="; pin-sha256="YLh1dUR9y6Kja30RrAn7JKnbQG/uEtLMkBgFF2Fuihg="; pin-sha256="C5+lpZ7tcVwmwQIMcRtPbsQtWLABXhQzejna0wHFr8M="; pin-sha256="Vjs8r4z+80wjNcr1YKepWQboSIRi63WsWXhIMN+eWys="; pin-sha256="lCppFqbkrlJ3EcVFAkeip0+44VaoJUymbnOaEUk7tEU="; pin-sha256="K87oWBWM9UZfyddvDfoxL+8lpNyoUB2ptGtn0fv6G2Q="; pin-sha256="Y9mvm0exBk1JoQ57f9Vm28jKo5lFm/woKcVxrYxu80o="; pin-sha256="EGn6R6CqT4z3ERscrqNl7q7RCzJmDe9uBhS/rnCHU="; pin-sha256="NIdnza073SiyuN1TUa7DDGjOxc1p0nbfOCfbxPWAZGQ="; pin-sha256="fNZ8JI9p2D/C+bsB3LH3rWejY9BGBDeW0JhMOiMfa7A="; pin-sha256="oyD01TTXvpfBro3QSZc1vIlcMjrdLTiL/M9mLCPX+Zo="; pin-sha256="0cRTd+vc1hjNFlHcLgLCHXUeWqn80bNDH/bs9qMTSPo="; pin-sha256="MDhNnV1cmaPdDDONbiVionUHH2QIf2aHJwq/lshMWfA="; pin-sha256="OIZP7FgTBf7hUpWHIA7OaPVO2WrsGzTl9vdOHLPZmJU="; max-age=3600; includeSubDomains; report-uri="http:opensourceecology.org/hpkp-report" user@ose:~$ # ran on the server [root@hetzner2 httpd]# tail -f wiki.opensourceecology.org/error_log wiki.opensourceecology.org/access_log | grep -i '_Discount' 127.0.0.1 - - [25/Jun/2018:19:26:50 +0000] "HEAD /wiki/25%25_Discounts HTTP/1.0" 200 - "-" "curl/7.52.1"

- the easiest way to simulate a malicious request is to set the useragent to empty. this worked fine.

ser@ose:~$ curl "https://wiki.opensourceecology.org/wiki/25%25_Discounts" -A '' <!DOCTYPE HTML PUBLIC "-IETFDTD HTML 2.0//EN"> <html><head> <title>403 Forbidden</title> </head><body> <h1>Forbidden</h1> <p>You don't have permission to access /wiki/25%_Discounts on this server.</p> </body></html> user@ose:~$

- I was able to reproduce the issue, proving that varnish was caching the 403 error

# first I clear the varnish cache on the server [root@hetzner2 sites-enabled]# varnishadm 'ban req.url ~ "_Discounts"' [root@hetzner2 sites-enabled]# # then I hit the server 'maliciously' using an empty user agent from the client user@ose:~$ curl "https://wiki.opensourceecology.org/wiki/25%25_Discounts" -A '' <!DOCTYPE HTML PUBLIC "-IETFDTD HTML 2.0//EN"> <html><head> <title>403 Forbidden</title> </head><body> <h1>Forbidden</h1> <p>You don't have permission to access /wiki/25%_Discounts on this server.</p> </body></html> user@ose:~$ # then I observe the server's varnish logs show that it was a MISS, and it got a 403 from the apache backend [root@hetzner2 httpd]# varnishlog -q "ReqHeader eq 'X-Forwarded-For: 65.49.163.89'" * << Request >> 10084940 - Begin req 10084939 rxreq - Timestamp Start: 1529956554.678479 0.000000 0.000000 - Timestamp Req: 1529956554.678479 0.000000 0.000000 - ReqStart 127.0.0.1 48158 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - ExpBan 10359649 banned lookup - Debug "XXXX MISS" - VCL_call MISS - VCL_return fetch - Link bereq 10084941 fetch - Timestamp Fetch: 1529956554.679141 0.000662 0.000662 - RespProtocol HTTP/1.1 - RespStatus 403 - RespReason Forbidden - RespHeader Date: Mon, 25 Jun 2018 19:55:54 GMT - RespHeader Server: Apache - RespHeader Content-Length: 220 - RespHeader Content-Type: text/html; charset=iso-8859-1 - RespHeader X-Varnish: 10084940 - RespHeader Age: 0 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529956554.679173 0.000694 0.000032 - Debug "RES_MODE 2" - RespHeader Connection: close - Timestamp Resp: 1529956554.679207 0.000727 0.000034 - Debug "XXX REF 2" - ReqAcct 209 0 209 214 220 434 - End # then I hit the server again, this time unmaliciously (without the empty useragent) user@ose:~$ curl "https://wiki.opensourceecology.org/wiki/25%25_Discounts" <!DOCTYPE HTML PUBLIC "-IETFDTD HTML 2.0//EN"> <html><head> <title>403 Forbidden</title> </head><body> <h1>Forbidden</h1> <p>You don't have permission to access /wiki/25%_Discounts on this server.</p> </body></html> user@ose:~$ # then I observe the logs, showing that the 403 the user got back was indeed cached [root@hetzner2 httpd]# varnishlog -q "ReqHeader eq 'X-Forwarded-For: 65.49.163.89'" * << Request >> 10084945 - Begin req 10084944 rxreq - Timestamp Start: 1529956570.226469 0.000000 0.000000 - Timestamp Req: 1529956570.226469 0.000000 0.000000 - ReqStart 127.0.0.1 48168 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader User-Agent: curl/7.52.1 - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - Hit 10084941 - VCL_call HIT - VCL_return deliver - RespProtocol HTTP/1.1 - RespStatus 403 - RespReason Forbidden - RespHeader Date: Mon, 25 Jun 2018 19:55:54 GMT - RespHeader Server: Apache - RespHeader Content-Length: 220 - RespHeader Content-Type: text/html; charset=iso-8859-1 - RespHeader X-Varnish: 10084945 10084941 - RespHeader Age: 16 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529956570.226507 0.000039 0.000039 - Debug "RES_MODE 2" - RespHeader Connection: close - Timestamp Resp: 1529956570.226526 0.000058 0.000019 - Debug "XXX REF 2" - ReqAcct 234 0 234 224 220 444 - End

- now that I've proven it to be reproducible with a defined process, I'll attempt to fix the wiki's varnish and see if it now does *not* cache the 403, as desired. the fix includes copying s similar conditional statement from the wordpress configs into the vcl_backend_response subroutine of /etc/varnish/sites-enabled/wiki.opensourceecology.org. Here's the entire new function

sub vcl_backend_response {

if ( beresp.backend.name == "wiki_opensourceecology_org" ){

# set minimum timeouts to auto-discard stored objects

set beresp.grace = 120s;

if (beresp.ttl < 48h) {

set beresp.ttl = 48h;

}

# Avoid caching error responses

if ( beresp.status != 200 && beresp.status != 203 && beresp.status != 300 && beresp.status != 301 && beresp.status != 302 && beresp.status != 304 && beresp.status != 307 && beresp.status != 410 && beresp.status != 404 ) {

set beresp.uncacheable = true;

return (deliver);

}

if (!beresp.ttl > 0s) {

set beresp.uncacheable = true;

return (deliver);

}

if (beresp.http.Set-Cookie) {

set beresp.uncacheable = true;

return (deliver);

}

# if (beresp.http.Cache-Control ~ "(private|no-cache|no-store)") {

# set beresp.uncacheable = true;

# return (deliver);

# }

if (beresp.http.Authorization && !beresp.http.Cache-Control ~ "public") {

set beresp.uncacheable = true;

return (deliver);

}

return (deliver);

}

}

- after making the change above, I tested the config's syntax & reloaded the varnish config if there were no errors

[root@hetzner2 sites-enabled]# varnishd -Cf /etc/varnish/default.vcl && service varnish reload ... Redirecting to /bin/systemctl reload varnish.service [root@hetzner2 sites-enabled]#

- I repeated the test above, but it resulted in a hit-for-pass being returned by varnish on the second retry instead of just a 403 followed by a miss followed by a hit followed by further hits (which would be ideal). So I changed the subroutine's logic a bit, choosing not to use the 'uncacheable' declaration that I borrowed from the nearby lines of the mediawiki provided config for this subroutine.

sub vcl_backend_response {

if ( beresp.backend.name == "wiki_opensourceecology_org" ){

# set minimum timeouts to auto-discard stored objects

set beresp.grace = 120s;

if (beresp.ttl < 48h) {

set beresp.ttl = 48h;

}

# Avoid caching error responses

if ( beresp.status != 200 && beresp.status != 203 && beresp.status != 300 && beresp.status != 301 && beresp.status != 302 && beresp.status !=

304 && beresp.status != 307 && beresp.status != 410 && beresp.status != 404 ) {

set beresp.ttl = 0s;

set beresp.grace = 15s;

return (deliver);

}

if (!beresp.ttl > 0s) {

set beresp.uncacheable = true;

return (deliver);

}

if (beresp.http.Set-Cookie) {

set beresp.uncacheable = true;

return (deliver);

}

# if (beresp.http.Cache-Control ~ "(private|no-cache|no-store)") {

# set beresp.uncacheable = true;

# return (deliver);

# }

if (beresp.http.Authorization && !beresp.http.Cache-Control ~ "public") {

set beresp.uncacheable = true;

return (deliver);

}

return (deliver);

}

}

- this produced the desired results: 403 (when malicious) then 200 in subsequent non-malicious queries

* << Request >> 10044374 - Begin req 10044373 rxreq - Timestamp Start: 1529958240.758676 0.000000 0.000000 - Timestamp Req: 1529958240.758676 0.000000 0.000000 - ReqStart 127.0.0.1 54452 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - ExpBan 10043967 banned lookup - Debug "XXXX MISS" - VCL_call MISS - VCL_return fetch - Link bereq 10044375 fetch - Timestamp Fetch: 1529958240.759438 0.000762 0.000762 - RespProtocol HTTP/1.1 - RespStatus 403 - RespReason Forbidden - RespHeader Date: Mon, 25 Jun 2018 20:24:00 GMT - RespHeader Server: Apache - RespHeader Content-Length: 220 - RespHeader Content-Type: text/html; charset=iso-8859-1 - RespHeader X-Varnish: 10044374 - RespHeader Age: 0 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529958240.759455 0.000779 0.000018 - Debug "RES_MODE 2" - RespHeader Connection: close - Timestamp Resp: 1529958240.759482 0.000806 0.000027 - Debug "XXX REF 2" - ReqAcct 209 0 209 214 220 434 - End * << Request >> 10044377 - Begin req 10044376 rxreq - Timestamp Start: 1529958244.980716 0.000000 0.000000 - Timestamp Req: 1529958244.980716 0.000000 0.000000 - ReqStart 127.0.0.1 54456 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader User-Agent: curl/7.52.1 - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - Debug "XXXX MISS" - VCL_call MISS - VCL_return fetch - Link bereq 10044378 fetch - Timestamp Fetch: 1529958245.159527 0.178811 0.178811 - RespProtocol HTTP/1.1 - RespStatus 200 - RespReason OK - RespHeader Date: Mon, 25 Jun 2018 20:24:04 GMT - RespHeader Server: Apache - RespHeader X-Content-Type-Options: nosniff - RespHeader Content-language: en - RespHeader X-UA-Compatible: IE=Edge - RespHeader Link: </images/ose-logo.png?be82f>;rel=preload;as=image - RespHeader Vary: Accept-Encoding,Cookie - RespHeader Cache-Control: s-maxage=18000, must-revalidate, max-age=0 - RespHeader Last-Modified: Mon, 25 Jun 2018 15:24:05 GMT - RespHeader X-XSS-Protection: 1; mode=block - RespHeader Content-Type: text/html; charset=UTF-8 - RespHeader X-Varnish: 10044377 - RespHeader Age: 0 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529958245.159577 0.178861 0.000050 - Debug "RES_MODE 4" - RespHeader Connection: close - RespHeader Accept-Ranges: bytes - Timestamp Resp: 1529958245.174460 0.193744 0.014883 - Debug "XXX REF 2" - ReqAcct 234 0 234 509 14354 14863 - End * << Request >> 10044380 - Begin req 10044379 rxreq - Timestamp Start: 1529958250.800502 0.000000 0.000000 - Timestamp Req: 1529958250.800502 0.000000 0.000000 - ReqStart 127.0.0.1 54462 - ReqMethod GET - ReqURL /wiki/25%25_Discounts - ReqProtocol HTTP/1.0 - ReqHeader X-Real-IP: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-Proto: https - ReqHeader X-Forwarded-Port: 443 - ReqHeader Host: wiki.opensourceecology.org - ReqHeader Connection: close - ReqHeader User-Agent: curl/7.52.1 - ReqHeader Accept: */* - ReqUnset X-Forwarded-For: 65.49.163.89 - ReqHeader X-Forwarded-For: 65.49.163.89, 127.0.0.1 - VCL_call RECV - ReqUnset X-Forwarded-For: 65.49.163.89, 127.0.0.1 - ReqHeader X-Forwarded-For: 127.0.0.1 - VCL_return hash - VCL_call HASH - VCL_return lookup - Hit 10044378 - VCL_call HIT - VCL_return deliver - RespProtocol HTTP/1.1 - RespStatus 200 - RespReason OK - RespHeader Date: Mon, 25 Jun 2018 20:24:04 GMT - RespHeader Server: Apache - RespHeader X-Content-Type-Options: nosniff - RespHeader Content-language: en - RespHeader X-UA-Compatible: IE=Edge - RespHeader Link: </images/ose-logo.png?be82f>;rel=preload;as=image - RespHeader Vary: Accept-Encoding,Cookie - RespHeader Cache-Control: s-maxage=18000, must-revalidate, max-age=0 - RespHeader Last-Modified: Mon, 25 Jun 2018 15:24:05 GMT - RespHeader X-XSS-Protection: 1; mode=block - RespHeader Content-Type: text/html; charset=UTF-8 - RespHeader X-Varnish: 10044380 10044378 - RespHeader Age: 6 - RespHeader Via: 1.1 varnish-v4 - VCL_call DELIVER - VCL_return deliver - Timestamp Process: 1529958250.800542 0.000040 0.000040 - RespHeader Content-Length: 14354 - Debug "RES_MODE 2" - RespHeader Connection: close - RespHeader Accept-Ranges: bytes - Timestamp Resp: 1529958250.800574 0.000072 0.000032 - Debug "XXX REF 2" - ReqAcct 234 0 234 541 14354 14895 - End

- fixed! I checked it out in the "Fetch as Google" and confirmed it worked there too, so I clicked the 'Request indexing' button

- https://wiki.opensourceecology.org/wiki/User:Marcin

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/Michael_Log

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/Marcin_Biography/es

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/[[2]]

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/CEB_Press_Fabrication_videos

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/Development_Team_Log

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/Tractor_User_Manual

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/Marcin_Biography

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index

- https://wiki.opensourceecology.org/wiki/CNC_Machine

- I attempted a "Fetch as Google," and I got no issues. I clicked the button to add it to the index, but I got an error = "An error occured. Please try again later.

- https://wiki.opensourceecology.org/wiki/OSEmail

- I attempted a "Fetch as Google," and I got no issues.

- https://wiki.opensourceecology.org/wiki/Donate

- I attempted a "Fetch as Google," and I got no issues.

- https://wiki.opensourceecology.org/wiki/Hello

- I attempted a "Fetch as Google," and I got no issues.

- 2 pages were "Indexed, though blocked by robots.txt" (status = warning)

- https://wiki.opensourceecology.org/index.php?title=Civilization_Starter_Kit_DVD_v0.01/eo&action=edit&redlink=1

- https://wiki.opensourceecology.org/index.php?title=Civilization_Starter_Kit_DVD_v0.01/th&action=edit&redlink=1

- I have no idea why google ignored our robots.txt telling it not to index these, but I guess that's out of our hands..

- 30299 pages were "Discovered - currently not indexed" (status = excluded)

- 48 of these pages were "Excluded by 'noindex' tag", as they should be. here's a few examples

- 48 of these pages (same as above, not a typo) were "Blocked by robots.txt", as they should be. here's a few examples:

- 32 of these pages were "Soft 404" I have no idea what that means. Here's a few examples:

- 11 of these pages were "crawl anomoly" I have no idea what this means. Here's a few examples:

- https://wiki.opensourceecology.org/load.php?debug=false&lang=en&modules=mediawiki.legacy.commonPrint,shared%7Cmediawiki.sectionAnchor%7Cmediawiki.skinning.interface%7Cskins.vector.styles&only=styles&skin=vector

- https://wiki.opensourceecology.org/index.php?title=Template:OrigLang

- https://wiki.opensourceecology.org/index.php?title=Main_Page&action=info

- 7 of these pages were "Submitted URL not selected as canonical"

- https://wiki.opensourceecology.org/wiki/Earth_Sheltered_Greenhouse

- https://wiki.opensourceecology.org/wiki/Civilization_Starter_Kit_v0.01

- https://wiki.opensourceecology.org/wiki/Walipini

- https://wiki.opensourceecology.org/wiki/Solar_Microhut

- https://wiki.opensourceecology.org/wiki/Lathe

- https://wiki.opensourceecology.org/wiki/Category:Open_source_ecology_community

- https://wiki.opensourceecology.org/wiki/Tractor

- note that all 7 of the above pages redirect to another page, so it looks like Google made the right choice here.

- ~30,300 pages are listed as "Discovered - currently not indexed"

- this page defines what this means as "The page was found by Google, but not crawled yet. Typically, Google tried to crawl the URL but the site was overloaded; therefore Google had to reschedule the crawl. This is why the last crawl date is empty on the report." https://support.google.com/webmasters/answer/7440203#discovered__unclear_status

- so I guess we just wait for these pages to get crawled

- 1,151 pages were "Crawled - currently not indexed"

- this page defines what this means as "The page was crawled by Google, but not indexed. It may or may not be indexed in the future; no need to resubmit this URL for crawling."

- I translate that to: "your pages sucks, and isn't worth storing"

- 100% of these URLs end with a date "6/19/18" or "6/18/18" and don't actually exist in our wiki. So that's fine too. For example: https://wiki.opensourceecology.org/wiki/Hydronic_Heat_System,6/19/18

- hopefully the 30,300 pages listed as "Discovered - currently not indexed" will trickle into the index at at least roughly 1,000 per week over the next few weeks

- I emailed Marcin my findings & extrapolations from the above research

- I now see 43 pages of search results from google about our wiki https://www.google.com/search?q=site:wiki.opensourceecology.org

- ...

- I successfully created a certificate for jangouts.opensourceecology.org using `certbot certonly` on our ec2 instance

[root@ip-172-31-28-115 yum.repos.d]# certbot certonly ... Input the webroot for jangouts.opensourceecology.org: (Enter 'c' to cancel): /var/www/html/jangouts.opensourceecology.org/htdocs Waiting for verification... Resetting dropped connection: acme-v01.api.letsencrypt.org Cleaning up challenges IMPORTANT NOTES: - Congratulations! Your certificate and chain have been saved at: /etc/letsencrypt/live/jangouts.opensourceecology.org/fullchain.pem Your key file has been saved at: /etc/letsencrypt/live/jangouts.opensourceecology.org/privkey.pem Your cert will expire on 2018-09-23. To obtain a new or tweaked version of this certificate in the future, simply run certbot again. To non-interactively renew *all* of your certificates, run "certbot renew" - Your account credentials have been saved in your Certbot configuration directory at /etc/letsencrypt. You should make a secure backup of this folder now. This configuration directory will also contain certificates and private keys obtained by Certbot so making regular backups of this folder is ideal. - If you like Certbot, please consider supporting our work by: Donating to ISRG / Let's Encrypt: https://letsencrypt.org/donate Donating to EFF: https://eff.org/donate-le [root@ip-172-31-28-115 yum.repos.d]#

- I updated the nginx config with these certs & reloaded it

- I confirmed that the site is now working without needing crazy exceptions for the janus gateway api' sport and the frontend 443 port.

- I sent Marcin an email with links pointing to the relevant janus gateway demos and jangouts, asking him to test it. Once his tests are done, I'll tear down this centos-based node and rebuild it using debian so I we can do a POC of jitsi. We can then launch an ose jitsi running on ec2 only for a few days out of the year--when we have workshops or extreme bulids & need it to support many consume-only viewers in the way that webrtc won't scale without some self-hosted logic (like scraping & republishing a private jitsi meet into a public stream using jitsi's Jibri https://github.com/jitsi/jibri

- we can also automate this build process using ansible. The Freedom of the Press Foundation has already built an ansible role for this https://github.com/freedomofpress/ansible-role-jitsi-meet

Tue Jun 19, 2018

- since I stopped 403ing all the useragents with 'bot' in their name yesterday (ie: googlebot), I noticed that we're finally indexed again on Google.com! This now yields 13 pages of results (yesterday it was an entirely empty set) https://www.google.com/search?q=site:wiki.opensourceecology.org

- I logged into the Google Webmaster Tools. There's still a lot of pages that say "no data," and an infobox said some data may be a week old. I should just wait a couple weeks.

- It did finish processing our sitemap, and noted 32,360 pages were submitted :)

- there's a '-' in the index field, which appears that it should hold a date. My guess: they haven't finished indexing our sitemap yet.

- finally, the "robots.txt Tester" page shows our robots page, but the fucking page says that our line "Crawl-delay: 15" is *ignored* by Googlebot!

- I did some more research on sitemaps & seo. It appears that google may actually not index all your pages, as it will generally stop indexing on 3 or 4 levels deep, especially if the level above it doesn't have a very good rank. Sitemaps may help that.

- then I found this link that suggested (with sources) it's actually a myth, I'll abstain from cronifying this https://northcutt.com/wr/[[3]]oogle-ranking-factors/

- I spent some time reading through this. Most of my enlightenment came from the "things that harm your rank." For example, I know we have some old linkfarm articles to viagra and whatnot. We should clean that up or google won't be happy with us.

- so before the migration, I did find many articles google bombing links to pharmaceuticals, etc. I had found them with Recent Changes, but at the time we didn't have Nuke = Mass Delete. So I decided just to hold off until after the migration. Well, now that the migration is complete, I can't find the old spam articles! It would be nice if there was a better search feature that could pinpoint the spam. Google mostly provides advice on how to prevent spam. We got the prevention down, we just need to find the old stuff :\

- recent changes only goes back 30 days, and this was months ago *shrug*

- I blocked 1 user and their spammy article = Johngraham

- another option is to go through our external links, but this is an insurmountable task https://wiki.opensourceecology.org/index.php?title=Special:LinkSearch&limit=500&offset=1500&target=http%3A%2F%2F*

- also useful is this category: candidates for speedy deletion. Not sure how it's populated though https://wiki.opensourceecology.org/wiki/Category:Candidates_for_speedy_deletion

Mon Jun 18, 2018

- reset Germán's password (user = Cabeza_de_Pomelo) & sent it to them via email

- Marcin mentioned that he's getting much worse search results from google for our wiki post-migration

- This is odd; I'd expect changing to https to increase our ranking

- Indeed, simply doing a search for our site yields literally *no* results. It looks almost like they banned us? https://www.google.com/search?q=site%3Awiki.opensourceecology.org

- I registered for the "Google Webmaster Tools" & validated our site, which included adding a nonce html verification file to the docroot. Currently there is no data; hopefully we'll get some info from this in a few days or weeks. https://www.google.com/webmasters/tools/home

- I added catarina & marcin (by email address) as users to the Google Webmaster Tools with full permissions

- I confirmed that google has *not* made any "manual actions" against our site (ie: banning us due to spam or sth) https://www.google.com/webmasters/tools/manual-action

- I confirmed that we have "0" URLs indexed by google and that "0" were blocked by robots.txt, so this can't be a robots.txt issue either.. https://www.google.com/webmasters/tools/index-status

- one of the things that the Goolge Webmaster Tools asks for in many places is a sitemap. I did some research on many online tools for generating an xml sitemap to upload to google. And local linux command line tools for generating a sitemap. Then, I discovered that mediawiki has a built-in sitemap generator :) https://www.mediawiki.org/wiki/Manual:GenerateSitemap.php

pushd /var/www/html/wiki.opensourceecology.org/htdocs mkdir sitemap pushd mkdir time nice php /var/www/html/wiki.opensourceecology.org/htdocs/maintenance/generateSitemap.php --fspath=/var/www/html/wiki.opensourceecology.org/htdocs/sitemap/ --urlpath=https://wiki.opensourceecology.org/sitemap/ --server=https://wiki.opensourceecology.org

- the generation took less than 3 seconds. that beats hours for wget!

- the new sitemap was made public here https://wiki.opensourceecology.org/sitemap/sitemap-index-osewiki_db-wiki_.xml

- I was able to hit this sitemap fine, but google complained that it got a 403. Interesting..

- there were no modsecurity alerts, but I think it was an nginx best-practice I picked-up from here https://www.tecmint.com/nginx-web-server-security-hardening-and-performance-tips/

- I literally had a line in the main nginx file that said this may need adjustment if it impacts seo

[root@hetzner2 httpd]# grep -C 2 -i seo /etc/nginx/nginx.conf server_tokens off; # block some bot's useragents (may need to remove some, if impacts SEO) include /etc/nginx/blockuseragents.rules; [root@hetzner2 httpd]#

- so I uncommented the match for 'bot' in this list & reloaded nginx

[root@hetzner2 httpd]# cat /etc/nginx/blockuseragents.rules

map $http_user_agent $blockedagent {

default 0;

~*malicious 1;

# ~*bot 1;

~*backdoor 1;

~*crawler 1;

~*bandit 1;

}

[root@hetzner2 httpd]#

- that fixed it! It's now processing our sitemap..

- Marcin specifically asked if I had removed any SEO related extensions. I check the list of deprecated extensions that I removed, and nothing looks like it was SEO-related https://wiki.opensourceecology.org/wiki/Maltfield_Log/2018_Q1#Mon_Jan_22.2C_2018

- I did find a google-related extension that I removed = 'google-coop' (it was listed as 'archived' and stated not to work with current Medaiwiki versions). But this extensions appears to be related to Google Custom Search and not SEO https://www.mediawiki.org/wiki/Extension:Google_Custom_Search_Engine

- It may be worthwhile to *add* an SEO extension, however. At least, it would be good to add some meta keywords to our pages.

- on first look, this appears to be the best seo extension. it's actively maintained, has keyword/description tag, facebook, and twitter support https://www.mediawiki.org/wiki/Extension:WikiSEO

- I spent some time documenting wikipedia's expenses. They have hundreds of servers (maybe > 1,000?) and claim to own $13 million in hardware, then spend another 2.1 million on bandwidth/rack space/etc.

- I spent some time documenting our mediawiki extensions list. Note that 3 of the extensions we use (OATHAuth, Replace Text, and Renameuser) are all included in the current stable version of mediawiki v1.31--which of course came out the month after our major migration/upgrade https://wiki.opensourceecology.org/wiki/Mediawiki#Extensions

- I spent some time filling-in the documentation on how to update mediawiki

Fri Jun 15, 2018

- finished documenting my notes from attempting to build the OSE adapted D3D extruder from the prusa i3 mk2 https://wiki.opensourceecology.org/wiki/D3D_Extruder#2018-06_Notes

- went to install certbot on our cent7 ec2 instance running janus/jangouts, but I got dependency issues with 'python-zope-interface'. The fix was actually to enable an amazon repo, as this is apparently an issue in ec2 described here https://github.com/certbot/certbot/issues/3544

yum -y install yum-utils yum-config-manager --enable rhui-REGION-rhel-server-extras rhui-REGION-rhel-server-optional

- note that the command is literally 'REGION' and not to be substituted for the region you're in

- I combined two articles that documented batch image resizing https://wiki.opensourceecology.org/wiki/Batch_Resize

- this one is now a redirect https://wiki.opensourceecology.org/index.php?title=Batch_Image_Resize_in_Ubuntu&redirect=no

- I documented why we have a 1M cap on image uploads. I don't think we should change that limit until we commit to hiring a full-time sysadmin with a budget of >=$200k/year for IT expenses https://wiki.opensourceecology.org/wiki/Mediawiki#.24maxUploadSize

Thr Jun 14, 2018

- Tom said he could ssh in but couldn't sudo because he didn't remember his password. I reset his password & sent it to him via email, asking for confirmation again.

- Marcin mentioned that a new user registered on the wiki & noted that our Terms of Service are blank upon registering

- I fixed a link to openfarmtech to be wiki.opensourceecology.org here https://wiki.opensourceecology.org/wiki/Open_Source_Ecology:General_disclaimer

- this exists, though it's just a link https://wiki.opensourceecology.org/wiki/Open_Source_Ecology:Privacy_policy

- It appears that the issue is this page https://wiki.opensourceecology.org/wiki/Special:RequestAccount

- the above page links to a non-existent https://wiki.opensourceecology.org/wiki/Open_Source_Ecology:Terms_of_Service

- I read through the documentation of the ConfirmAccounts extension https://www.mediawiki.org/wiki/Extension:ConfirmAccount

- We currently don't have many customizations set for this extension, here's what we have in LocalSettings.php

require_once "$IP/extensions/ConfirmAccount/ConfirmAccount.php"; $wgFileStore['accountreqs'] = "$IP/images/ConfirmAccount"; $wgFileStore['accountcreds'] = "$IP/images/ConfirmAccount"; $wgConfirmAccountContact = 'marcin@opensourceecology.org';

- all other defaults and options are best referenced by the sample file at /var/www/html/wiki.opensourceecology.org/htdocs/extensions/ConfirmAccount/ConfirmAccount.config.php

- for example, I see that we *are* creating user pages from their bio

- we *are* creating a talk page for the user welcoming them to our wiki. This can be customized here https://wiki.opensourceecology.org/wiki/MediaWiki:Confirmaccount-welc

- the bio has a minwords = 50

- I sent Marcin an email with my findings

- finished editing the video of the d3d workshop & documenting the day's build

Wed Jun 06, 2018

- I finished documenting our trials in building a square for the d3d frame out of strips of metal bonded together with epoxy https://wiki.opensourceecology.org/wiki/D3D_frame_built_with_epoxy

- Emailed Chris about Persistence

- Tom responded that he has been using linux for 25 years and is a Red Hat Certified Engineer since 2001. I added him to the 'wheel' group, so he should now have sudo rights. I am just waiting for his confirmation (he may need to reset his password).

- I emailed Marcin about the risks of granting him root, mentioning that it's atypical for a CEO or Executive Director to have root access onto the machines.

- fired-up kdenlive in attempt to mix a worthwhile video out of our stitched-together video of timelapse photos

- first, I wanted to find a worthwhile open source soundtrack. I wanted to use "Alien Orange Lab" by My Free Mickey at ccmixter.org http://ccmixter.org/files/myfreemickey/45683

- unfortunately, this was NC under CC. We probably want to use something that's commercial, so we can use it in a video, for example, advertising for our workshops (an advertisement)

- I did some more digging & documented a few tracks that allow commercial use here https://wiki.opensourceecology.org/wiki/Open_Source_Soundtracks#CCMixter

- I think I'll use "Welcome in the intox" by Bluemillenium http://ccmixter.org/files/Bluemillenium/57202

- first, I wanted to find a worthwhile open source soundtrack. I wanted to use "Alien Orange Lab" by My Free Mickey at ccmixter.org http://ccmixter.org/files/myfreemickey/45683

Tue Jun 05, 2018

- Marcin sent me an email stating he was having issues logging into osemain due to 2FA issues. I logged in successfully & responded telling him to make sure the time on his phone was exact (up to the second) and retry.

- Updated my log & hours

- Fixed some wiki links around the OSE Developer leaderboard to make it less buried

- Added a wiki article about the tree protectors https://wiki.opensourceecology.org/wiki/Tree_protector

- Meeting agenda notes

- Hazelnut tree protectors. 66 laid over 10 hours.

- built jellybox

- used jellybox to print components & assemble Prusa i3 mk2 extruder assembly

- d3d workshop + time-lapse documentation

- weekly meeting

- created stubs where I'll spend the rest of my week documenting my work the past 2 weeks

Mon Jun 04, 2018

- responded to Tom & Chris emails

- dumped photos off phone from the D3D build

Sat Jun 02, 2018

- today I helped Marcin build the D3D printer in a workshop at a homestead near Lawrenece, Kansas. I made a few notes about what went wrong & how we can improve this machine for future iteratons

- The bolts for the carriage & end pieces (the ones that are 3d printed halves & sandwiched by the bolts/nuts to sandwich the long metal axis rods) are inaccessible under the motor. So if there's an issue, you have to take off the motor's 4 small bolts to access the end piece's 4 bolts. This happened to me when I used the same sized bolts in all 4x holes. In fact, one or two of the bolts should go through the metal frame of the cube. So I had to remove the motor to replace that bolt. Indeed, this also had to be done on a few of the other 5 axis' as well, slowing down the build. It would be great if we could alter this piece by moving the bolts further from the motor, including making the pieces larger, if necessary.

- One new addition to this design was the LCD screen, and a piece of plexiglass to which we zip-tied all our electronics, then mounted the plexiglass to the frame via magnets. The issue: When we arranged the electronics on the plexiglass, we were mostly concerned about wire location & lengths. We did not consider that the LCD module (which has an SD card slot on its left side) would need to be accessible. Instead, it was butted against the heated bed relay, making the SD card slot inaccessible. In future builds, we should ensure that this component has nothing to it's left-side, so that the SD card slot remains accessible.

- we used my phone + Opern Camera to capture a time-lapse of it

Fri Jun 01, 2018

- spent another couple hours installing tree protectos for the hazelnut trees. This is my last day, and the total count of protectors I've installed is 66. I spent a total of about ~10 hours putting these 66 protectors up..

- I fixed a modsecurity false-positive

- 950911 generic attack, http response splitting

- spent some time researching steel wire wrapping in consideration for if it could be used in a plastic, 3d printed composite http://www.appropedia.org/Open_Source_Multi-Head_3D_Printer_for_Polymer-Metal_Composite_Component_Manufacturing

- spent some time researching carbon fiber in consideration for if it could be used in a plastic, 3d printed composite

- spent some time researching bamboo fiber in consideration for if it could be used in a plastic, 3d printed composite

- further research is necessary on methods for mechanical retting of bamboo without chemcials

Thr May 31, 2018

- spent another couple hours installing tree protectors for the hazelnut trees after Marcin gave me more wire from the workshop yesterday. 'Current count for hazelnut trees protected is 54.'

- The field is quite large, but I estimate that I'm 25-40% through the keylines. That means, as of 2018-05 (or about 1 year after they were planted), there's approximately a few hundred hazelnut trees planted along keylines at FeF. If those survived last winter (and they can get proper protection from rabbits), then they'll probably stick around. Even though the numbers are far less than the numbers planted, it's hard to complain about having _only_ a few 'hundred' hazelnut trees!

- I spent a couple hours in the shop attempting to build a square side (ie: for our D3D printer) out of cut metal strips joined with quick-set epoxy. Logs

- I finished assembling one of the extrudes!

- some of these m3 bolts are 18 mm long, some are 25 mm, one is 20mm. It would be great if we could make them all 20mm.

- some of these bolts are supposed to just go straight into the plastic. That doesn't work well. The bolts don't go in & usually just fall out

- and the spots where we're supposed to insert a nut into a hole & push it to the back doesn't work so well. On contrast, the nut catches (where you slide the nut in sideways, rather than pushing it back into the hole) work *great*. If possible, we should use a nut catcher on all bolts. Or just have a washer/nut outside the structure entirely, if that's possible.

Wed May 30, 2018

- spent some time installing tree protectors for the hazelnut trees before running out of wire

- spent some time researching git file size limits. There are no limits, but they asked to keep the repo under 1 G. File sizes are caped at 100M, which is great. They also have another service called "Git LFS" = Large File Storage. LFS is for files 100M-2G in size. It also does store versions. This is provided for free up to 1G (so the 2G limit isn't free!) https://blog.github.com/2015-04-08-announcing-git-large-file-storage-lfs/

- The git page pointed me to a backup solution whoose website had a useful comparison of costs for their competitors' services https://www.arqbackup.com/features/

- Right now I'm estimating that we're paying ~$100/year for a combination of s3 + glacier storage of ~1 TB. We pay-what-we-use, but the process of using it is so damn complicated (and slow for Glacier!) that if we could pay <$100 for 1T elsewhere, it's worth considering

- Microsoft OneDrive is listed as $7/mo for 1T

- Backblaze B2 is listed as $5/mo for 1T

- this is probably the cheapest option, and is worth noting for future reference

- Google Coldline is listed as $7/mo for 1T

- spent some time documenting our server's resource usage now that I have the data to determine what we actually need following the wiki migration. The result: our server is heavily overprovisioned. We could get two hetzner cloud nodes (one for prod, one for dev), and still save 100 EUR/year. https://wiki.opensourceecology.org/wiki/OSE_Server#Looking_Forward

- spent some time curating our wiki IT info

- fixed mod_security false-positive

- 981320 SQLI

- imported Marcin's new gpg key into the ossec keyring

mkdir -p /var/tmp/gpg pushd /var/tmp/gpg # write multi-line to file for documentation copy & paste cat << EOF > /var/tmp/gpg/marcin.pubkey2.asc -----BEGIN PGP PUBLIC KEY BLOCK----- Version: GnuPG v1 mQINBFsDbMMBEACypyMZ/J9+M1DvNd+EGhIpRXEKH5WldOXlZtJAh1tGH5cvqBwR QDCCyVAA+WsiE0IQJByrpxPbj25ypPSMcyhJYmmDOa/0R/NdVuBgJNmWFSyfB/aU dKAC3brLMC8zUffieug0bVE6vI8QE/DUAGKU5AyNFOD3itFGgI7HtlaknU9ql7um VxrOM7VU/GmqZcg5hqno6r1mhiG9boitM10lSav+Hylv3Es01pLUvy/NlJEZ10lZ rQ8RHIQSTpxj9C9L32DjvcJ8BfIHzr6aY/xv5tbPDJuLgsPgn6EoUZkNQAyPMV8J 8MT26UmwlA0WvMkHJze+kgsXD5FUk7MuZM5ttEHKsngN5Sim1M+dBnUtg6QG4zpf KhyVOOpag1L3iyCwGMbRIX8cTk2Hk39Csf37QKDUrHMbDqAOcQzpr6YcbEO/PPXW u2VQDJfuiWrgQI7v+ac8uAlH66c6MmEqtsduxVmUYK1C7LlDmcswa4kOP/5WkpJ8 kFwicIM/qpZgewpjtD+ATADs0knA+D+MBQSoMI6FhCLLytz2JpIEtHJFDvDuV/7Q Yi+RDyFqNr+i7rkNe/xpb5lzrLutN7JEYeMn+LsPH6Ucd8mGJ7j88c0OZUidkOu5 KErG4xHqee87B+Et0/LfEABogDAPnqH027tCMXHu8g2Ih8kZnglEnNeP6wARAQAB tDBNYXJjaW4gSmFrdWJvd3NraSA8bWFyY2luQG9wZW5zb3VyY2VlY29sb2d5Lm9y Zz6JAj0EEwEIACcFAlsDbMMCGwMFCRLMAwAFCwkIBwIGFQgJCgsCBBYCAwECHgEC F4AACgkQ186EWruNpsEEGg//f195qc3hJcyon9Rq+tH7yp8hJN+Pcy3WBnj0Amvg fPYGR1W5qbCnd9NPdcAz8J1H1Hsbz9+zYDlhIp71iuTlNvtT821du9bLwqplN9UI YNkRAYm/kwd2qAYNPdVKW0lY9OhvyZA5XrjyQQxVtzQmuB0kTrzX1Br6ZWnMNavd X2yhfbxJY71HbETMw/VLBubbl8RwpZGzXqye23Il8SryicDk9oIXF6uExB4Ym7PJ 3+h4Sn9dvAQOEsjl57r/ZHNctb4VLqJfVo12ba2XxTx0TGrdYGbiONHu9P2u+Zwj +NlGmKq2+h2V4pdfl5buj90NtdV3GjJ6wBiBZ0sAO0tKIAp1PWP+Ayo9ep2G8H4A R8WMJZ6VaXw54C2gLlyzwrsZztqBWljfL8tHtCyOKjN1YJuucn2pzEz/ENTOC3cn SNzBTXSi/fJBaBgbueMtDE1j0VWjfcm+zIkMfcjUoN+w7gQGEQGc/myvDnEIevcy ITlejx2MnCj3cjkKrOUXct+3pJwWuxFFfWtOUF91cgAd+FrVw7kQSNfS7T4X7jVO frVpAXthQaSJIDas5ZqnBlkCdkF+4Oj8IbpV0RUHNIOy0XXJqb6Z3YVUjQdT+Dup 4wmz6dlNdNWfP0iyo6OOuphz+Tz9ZkPDfLXznR+tz62PB/oeHxE0S/zWDXTeyqWp RyWJAhwEEwEKAAYFAlsDgiEACgkQ/huESU5kDUH+1g//ZoS0E9R6pKfvVBTnuphW gmCuAgGXAxdMioCYYNqn+jGyy6XdDKcsVATJT0pwctMhkAxEajafzaoBC1/pellh vO3c7088/BMzRJYSTHeAANd2qctK00ZZZ149T41TedfGaYOEJSNWyjXAZeOM8dlb qLRkFVf2Zo4rG6ij55ywLS7Cqv1TBMwWzx70gl0TnPxBhBj48Mr/JnhYRQVZtm5c MiaTncwGJky2CCEXTJqYGT9wDe74w1GGZz5Png59rs6m/1mibdtQ1YbF9gX5pBoK afpPVRLSISNKyB8PUVNf/2Uqckl1JQ95rcsgTqArcLWeBV4fIm18SfKglYRg2I2u EP4Fz3oLHROQ6aTPzQgfRX7ZFI7w7lEwOSwQTgC0qjH+y+5a7/H/+wuXtfnuHBsu nJikH2MzmccRdUQGNtZLJ5HBVpglV3OAMWbknmGOSWdPPaeD68hhOJlfaq51HA8/ ewav9VDPADL/GBy9zSadWRYLCbmkPaksvYdP0exndeLr/GMNsO/jsI/BBgbtG5EM qc71SEJDjOe+T1/NuoPQiQwaHXgUNgB6/F33sByKPu56M2T+gctpQHg2dw6U7LAK biE8Q3pCoIzz+2/AZd/+vpdzZ71qahBiOMmrGTJfkqWDar8DP+bXHLYDZBYpExPg MB+w06S7CsNzrmhBiuysm++JAhwEEwEKAAYFAlsDgj4ACgkQqj7fcWDi2XvKphAA j/H/atXb2fyN/VJ3tPQ0qsmv3ctDpMnazCwRksTZHzFhZdyi6mu8zlE+iK9SGr5L PTc+jSK02JnuAQcnZHMNrov6wPPAaoRFDQ7Nv9LUmzVJPnxXuoFxF1akkr0cdxpZ 4nfcCIZS0i43RLWSKuFFz81Oy4Med8U9JXq/NxYw/a5D7PZ7flSSUDYrQwgOQtut lCebOPb/iu6A87HJ+bhtQb7G7G68HkFmlATnjA0AmeM/+PQ8AR6YH5mbgQeWmPTq XJdfBs5+AFyUw1zJPa5GPBa+96tqCjOrkxrwR/FCe1L2Q+BfkBRDDg2FA6/pekG4 kzAB++JH3Uai6PSgmifUDMsA++4oRGf7ALqoXnXwu4SOQ2vlrsPjAnV77us5JvdI Wc346uzvcJAyFOmBuQqRKOOsgYpEj1Q5HKkDuZNLM8e89o0dTOwcm4e8BR00GN6+ OyC6D8U8T72kFv3WvW5HqiP5mmGZDBNWLaXFjLJBSUrFVw9OJWuisSbX6JoISE4Y RFhzS/REKLn7LDvVvByI3wZF6GLbfKkdzZHoK0Fc4GFiVloDOC7iGiHV+cw2Ivwx yhsdRciuH5yRnbNhekaNNFddcmq2K6QPLgbDIBX43eFmArRk/mLwyMyvhVQT1NuL NqudMTihZeO10A4evHqHDmiYIi0cRf9OKct0S7bSwJm5Ag0EWwNswwEQAMcuLBNf /iTsBnvrI7cD2S24pVGMowaPDWMD1PEfwdL7dHDA4hTnrJexXHxGTFLiKgwhTdCr ZnBUNmL1CjoN2nO02MlFPcDNsPAa03KSF/IIpx1v/Y7yYN3eJX1nthQ3rPJnguEe L7mgBYtGeKBBdTWGzfHYDYI8IaUP6Bhfc6Yj+a5NVh+NsObhX0IMoa/lQNLDlfav tqdDgi7tMuf/Qyz1VvgpYYzXDq9KdipWssCHEDnIggdlJGemQyQMGuAil1TOC+S8 9D/IbOuo3Wa+YMIu7g6cX0jX8Lp0kBH6yNlmIXvvOzV8smOVwemTl8Lt/9hETJqx aXL9j3DCoYVA87MAGcBD3EMFjQKwVLIWe84B8i5G44yD2DCHBNL/Qeq09klI5T5M BAgYbNoKx130pf0jGD6dzdfDiMgclAuhz5VTkNh5RCu7rdVgHGQKm5f6sVXCuAfl /f3Wv66lyCIHbb+LAxnG07bPHLGgHtrS+xRp7d+y7ezaTSmzcOs8lb1C6D/tJXyV +64lgkTsLid3ljVsMMCRWdRyXYWMOPAt9krFIW6niYHokN5m5uB/l/Vad+PYJ8WA Agpord+A2vSLliogO1BiDX5lcZmlFPSDDAlr5373KGoBSoYIXq6xcqsvkg3F4RCW B5YEWgBiX9roXzZ7oMUUK7uhDixFMqAWmN+dABEBAAGJAiUEGAEIAA8FAlsDbMMC GwwFCRLMAwAACgkQ186EWruNpsEHSw//dXXtuO5V6M+OZ5ArMj1vFudU57PNT+35 5prq6IIDCeRiTanpjIR3GuOGtK3D+4r6Nk1lCoG0CwFPUu7k51gsdkB9DRrRYKX5 fXkl8UC+e8dKo9bMS3jyY9nC7Mv1DPc4gx7VoZeXsxlqz60tEG3HWehLGt03z47C 5I9VVLkTvxt73VH9BHcZaScyPfn3kOlbBSW6U/6ZnRJQ6pc6xPxMsqo0OznYgU9k YpkS6xwjqT7MYCw4DiW5kSIqNBRMl3suLUUvsJH4OOjilIt4Su+GxftrokmayRYr XRP0k/Tnf7nrjPl7znbCFxEEVSezaQE2rxQCiKXkmvYzaPjJXZmPgz49oih24Tgn Llk70qRoRXt2MkZG3TH/t755ORYl5BUeyhnPSzOD/1BiFJze7N+r5mGtJsdjBSyO LEdjVzsLRhKvheDkrsbguiV8wjaHdfpdPUdYHnWs/HZ7e9HyGoGxaYPRzYosqTu5 pxgIs4c3Toy7nYQjINd/IhLCYL7UBT+ybNMzh15u63UYun37x4mbdkkx7TzZpXex cnP2bJijq/TJD8PRJNY9GFd5fnluk6xpaFH1YAtQbe/YpTHP0xn45Hi91tsv7S7F Tl5+BGflBcIQOF80tOHetUrtH3cjp/dtKCE5ZU5Vt9pxlvQeO+azOH1jXQ35vs2t 7VMKgjAEf/c= =nvDm -----END PGP PUBLIC KEY BLOCK----- EOF gpg --homedir /var/ossec/.gnupg --delete-key marcin gpg --homedir /var/ossec/.gnupg --import /var/tmp/gpg/marcin.pubkey2.asc popd

- confirmed that the right key was there

[root@hetzner2 gpg]# gpg --homedir /var/ossec/.gnupg --list-keys gpg: WARNING: unsafe ownership on homedir `/var/ossec/.gnupg' /var/ossec/.gnupg/pubring.gpg ----------------------------- pub 4096R/60E2D97B 2017-06-20 [expires: 2027-06-18] uid Michael Altfield <michael@opensourceecology.org> sub 4096R/9FAD6BEF 2017-06-20 [expires: 2027-06-18] pub 4096R/4E640D41 2017-09-30 [expires: 2018-10-01] uid Michael Altfield <michael@michaelaltfield.net> uid Michael Altfield <vt6t5up@mail.ru> sub 4096R/745DD5CF 2017-09-30 [expires: 2018-09-30] pub 4096R/BB8DA6C1 2018-05-22 [expires: 2028-05-19] uid Marcin Jakubowski <marcin@opensourceecology.org> sub 4096R/36939DE8 2018-05-22 [expires: 2028-05-19]

- documented this on a new page named Ossec https://wiki.opensourceecology.org/wiki/Ossec

- I began building the Prusa i3 mk2 extruder assembly

- I have never done this before, but I do have the freecad file https://wiki.opensourceecology.org/wiki/File:Prusa_i3_mk2_extruder_adapted.fcstd

- I uploaded the 3 3d printables that I exported from the above freecad file. I had previously exported these stl files so I could import hem into Cura & print them on the Jellybox. Now that I have 2x of each of the 3x pieces, I can begin the build with the hardware (springs, extruder, fans, bolts, nuts, washers, etc) that Marcin gave me (he ordered it from McMaster-Carr

- idler

https://wiki.opensourceecology.org/wiki/File:Prusa_i3_mk2_extruder_adapted_idler.stl

- there appears to be some piece (shaft?) (labeled "5x16SH") in the body of the shaft bearing that I don't have

- I may have installed the fan backwards; it's hard to tell which way this thing will blow, but the cad shows it should blow in, towards the heatsink

- I also appear to need 2x more 3d printed pieces:

- the "fan nozzle" for the print fan

- the print fan

- the interface

- the motor

- the proximity sensor

- I also had some issues inserting a nut into the the following holes

- NUT_THIN_M012 into the back of the body, which receives the SCHS_M3_25 from the cover

- I extracted stl files for the fan nozzle and a small cylinder for the shaft of the bearing. These have been uploaded to the wiki as well https://wiki.opensourceecology.org/wiki/D3D_Extruder#CAM_.283D_Print_Files_of_Modified_Prisa_i3_MK2.29

- The interface needed to be 3d printed too, but it totally lacked holes. They were sketched, but they didn't go through the "interface plate". I spent a few hours in freecad trying to sketch it on the face of the plate & push it through, but it only went partially through the interface plate (I made the length 999mm, reversed, & "full length nothing helped). Marcin took the file, copied the interface plate

Tue May 29, 2018

- did some hazelnut fence work in the morning

- fixed some mod_security false-positives while attempting to upload my log

- 950010 generic attack

- 973310 xss

- I presented my video conference research & wiki migration doings at today's meeting

- I began to 3d print the Prusa i3mk2 components

- I'm working on the JellyBox that I built last week

- I installed freecad on my debain 9 system (I've changed distros since my dev exam)

- In freecad, I used spacebar to isolate the 3 distinct components (idler, body, & cover) & export to stl

- I downloaded the AppImage of Cura 3.3.1, (https://ultimaker.com/en/products/ultimaker-cura-software/platform/3) made it executable, & fired it up. It allowed me to simply choose the IMade3d Jellybox config. The Imad3D instructions for Cura linked to a dead link, an old version of Cura, and the website's resources only provide a dmg (I'm using Linux, not a Mac) My best guess is that it *used* to need resources, but now it's built-into recent versions of Cura

- I opened the stl file that freecad spat out into Cura. I just rotated the object so the largest flat surface was facing down, then told Cura to split it (clicked "Prepare"), and saved it as a g-code file onto the sd card

- I took the sd card, threw it into the sd card/lcd display on the Jellybox, browsed to it, and clicked "Print"

- the first layer didn't stick, so I made the z-index less negative (moved from approx -22 to -9). That helped in subsequent prints

- I noticed that the fans weren't spinning!

- Marcin & I spent some time troubleshooting the fans. I had the wrong cable plugged into the wrong fans. One set (the heatsink for the extruder itself) should be plugged in all the time & spinning when the system is on. The other fan (blowing out on the cooling filament) should be turned on-and-off by the controller

- after adjusting the z-height (I needed -0.70!), I finally got a good stick & printed a piece. I set 2 more to print at the same time, so the 3d printed extruder parts should be printed by morning

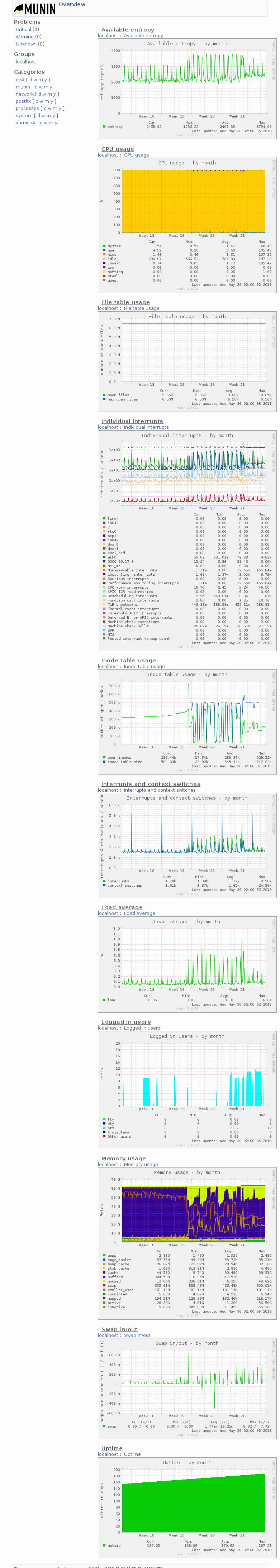

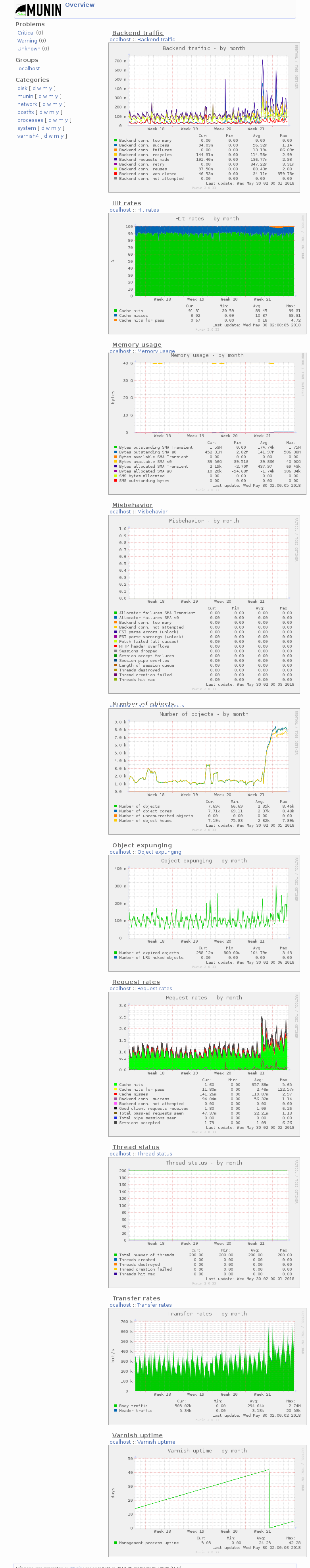

- I checked the server's munin graphs again. The monthly is showing a nice, obvious change from before/after the wiki migration. The current state is all our sites being served over https and cached by varnish on a single server = hetzner2

- Obviously, the network graphs show a great increase in traffic after the migration

- The system graphs show a _slight_ increase in CPU, load, & swap.

- The varnish graphs show an obvious increase in backend traffic

- The varnish graphs show a sliver of orange hit-for-pass appear. This should be our logged-in wiki users. The cache hits still stay wonderfully high, which is why the server's cpu/load is barely impacted by this great inflow of traffic.

- The varnish graphs show a definite (but small) uptick in RAM usage

- The varnish graphs show a great spike in the number of objects, and then we see it tapering off finally after ~ quadrupling.

Mon May 28, 2018

- further emails with Christian

- helped wrap some short welded wire "fencing" around small hazelnut trees to protect from rabbits in the field by the first microhouse at FeF

- discovered that my log article was so damn large that Mediawiki was refusing to serve it with a 413 error

Request Entity Too Large The requested resource /index.php does not allow request data with POST requests, or the amount of data provided in the request exceeds the capacity limit.

- so I segregated my log into subpages per quarter https://en.wikipedia.org/wiki/Wikipedia:Subpages

Sun May 27, 2018

- Helped Christian get his local wiki instance operational

Sat May 26, 2018

- Emailed with Christian about making an offline version of the wiki for browsing in kiwix like wikipedia & other popular wikis https://wiki.kiwix.org/wiki/Content

- we may have to install Parsoid and/or the VIsualEditor extension https://www.howtoforge.com/tutorial/how-to-install-visualeditor-for-mediawiki-on-centos-7/

- but I asked Christain to first look into methods that do not require Parsoid, such as zimmer http://www.openzim.org/wiki/Build_your_ZIM_file#zimmer

- Christian hit some 403 forbidden messages when hitting the mediawiki api, so I attempted to whitelist his ip address from all mod_security rules

# disable mod_security with rules as needed # (found by logs in: /var/log/httpd/modsec_audit.log) <IfModule security2_module> SecRuleRemoveById 960015 960024 960904 960015 960017 970901 950109 981172 981231 981245 973338 973306 950901 981317 959072 981257 981243 958030 973300 973304 973335 973333 973316 200004 973347 981319 981240 973301 973344 960335 960020 950120 959073 981244 981248 981253 973334 973332 981242 981246 960915 200003 981173 981318 981260 950911 973302 973324 973317 981255 958057 958056 973327 950018 950001 958008 973329 950907 950910 950005 950006 959151 958976 950007 959070 950908 981250 981241 981252 981256 981249 981251 973336 958006 958049 958051 973305 973314 973331 973330 973348 981276 959071 973337 958018 958407 958039 973303 973315 973346 973321 960035 # set the (sans file) POST size limit to 1M (default is 128K) SecRequestBodyNoFilesLimit 1000000 # whitelist an entire IP that we use for scraping mediawiki to produce # kiwix-ready zim files for archival & offline viewing SecRule REQUEST_HEADERS:X-Forwarded-For "@Contains 176.56.237.113" phase:1,nolog,allow,pass,ctl:ruleEngine=off,id:1 </IfModule>

- In testing, I accidentally banned myself. When validating, I saw that our server had banned 2 other IPs, which are crawlers. I went to their site, and found that they obey robots.txt's "Crawl-delay" option http://mj12bot.com/

[root@hetzner2 httpd]# iptables -L Chain INPUT (policy ACCEPT) target prot opt source destination DROP all -- crawl1.bl.semrush.com anywhere DROP all -- crawl-vfyrb9.mj12bot.com anywhere DROP all -- 184-157-49-133.dyn.centurytel.net anywhere ACCEPT all -- anywhere anywhere ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED ACCEPT icmp -- anywhere anywhere ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:http ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:https ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:pharos ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:krb524 ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:32415 LOG all -- anywhere anywhere limit: avg 5/min burst 5 LOG level debug prefix "iptables IN denied: " DROP all -- anywhere anywhere Chain FORWARD (policy ACCEPT) target prot opt source destination DROP all -- crawl1.bl.semrush.com anywhere DROP all -- crawl-vfyrb9.mj12bot.com anywhere DROP all -- 184-157-49-133.dyn.centurytel.net anywhere Chain OUTPUT (policy ACCEPT) target prot opt source destination ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED ACCEPT all -- localhost.localdomain localhost.localdomain ACCEPT udp -- anywhere ns1-coloc.hetzner.de udp dpt:domain ACCEPT udp -- anywhere ns2-coloc.hetzner.net udp dpt:domain ACCEPT udp -- anywhere ns3-coloc.hetzner.com udp dpt:domain LOG all -- anywhere anywhere limit: avg 5/min burst 5 LOG level debug prefix "iptables OUT denied: " DROP tcp -- anywhere anywhere owner UID match apache DROP tcp -- anywhere anywhere owner UID match mysql DROP tcp -- anywhere anywhere owner UID match varnish DROP tcp -- anywhere anywhere owner UID match hitch DROP tcp -- anywhere anywhere owner UID match nginx [root@hetzner2 httpd]#

- so I created a robots.txt file per https://www.mediawiki.org/wiki/Manual:Robots.txt

cat << EOF > /var/www/html/wiki.opensourceecology.org/htdocs/robots.txt User-agent: * Disallow: /index.php? Disallow: /index.php/Help Disallow: /index.php/Special: Disallow: /index.php/Template Disallow: /wiki/Help Disallow: /wiki/Special: Disallow: /wiki/Template Crawl-delay: 15 EOF chown not-apache:apache /var/www/html/wiki.opensourceecology.org/htdocs/robots.txt chmod 0040 /var/www/html/wiki.opensourceecology.org/htdocs/robots.txt

- I reset the password for 'Zeitgeist_C.Real_-' & sent them an email

- did some back-of-the-envelope calculations for crypto currency mining with our wiki as piratebay does

- coinhive says 30 hashes/sec is reasonable. https://coinhive.com/info/faq

- yesterday we got 31,453 hits. much of that is probably spiders. Unfortunately, awstats doesn't give unique visitors per day, only per month. But we've basically been online for only one day, and the monthly says 2,422 unique visitors this month. So let's say we get 2,000 visitors per month. 81.4% of our traffic is <=30s long. Average visit is 208s (probably some people leave it open in the background for a very long time (= all our devs)). Let's be conservative & say that 100-81.4=18.6% of our daily users = 2000* 0.18 = 360 users are on the site for 30 seconds. That's 10800 seconds of mining

- Coinhive pays out in 0.000064 XMR per 1 million hashes. We'll be generating 10800 seconds/day * 30 hashes/s = 324,000 hashes per day. 324,000 * 30 = 9,720,000 hashes per month. 9720000/1000000 = 9.72 * 0.000064 xmr = 0.00062208 xmr / month.

- At today's exchange rate, that's $0.10 per month. Fuck.

- ^ that said, my calculations are extremely conservative. If we actually have 2,422 unique visitors spending an average of 208 seconds on the site, then it's 2422 * 208 = 503776 seconds per day. 503776 * 30 hashes/s = 15113280 hashes per day. 15113280 * 30 days = 453398400 hashes per month. 453398400/1000000 = 453.3984 * 0.000064 xmr = 0.029017498 xmr / month.

- At today's exchange rate, that's $4.85/month.

- So if we cryptomine on our wiki users, we're looking at between $0.10 - $5 per month profit. Meh.

- fixed mod_security false-positive

- whitelisted 981247 = SQLI

- I created a snashot of the wiki for Christian to build a local copy for zim-ifying it for kiwix

# DECLARE VARS

snapshotDestDir='/var/tmp/snapshotOfWikiForChris.20180526'

wikiDbName='osewiki_db'

wikiDbUser='osewiki_user'

wikiDbPass='CHANGEME'

stamp=`date +%Y%m%d_%T`

mkdir -p "${snapshotDestDir}"

pushd "${snapshotDestDir}"

time nice mysqldump -u"${wikiDbUser}" -p"${wikiDbPass}" --databases "${wikiDbName}" > "${wikiDbName}.${stamp}.sql"# DECLARE VARS

snapshotDestDir='/var/tmp/snapshotOfWikiForChris.20180526'

mkdir -p "${snapshotDestDir}"

pushd "${snapshotDestDir}"

time nice mysqldump --single-transaction -u"${wikiDbUser}" -p"${wikiDbPass}" --databases "${wikiDbName}" | gzip -c > "${wikiDbName}.${stamp}.sql.gz"

time nice tar -czvf "${snapshotDestDir}/wiki.opensourceecology.org.vhost.${stamp}.tar.gz" /var/www/html/wiki.opensourceecology.org/*

- I drove to town to pickup some plexiglass and epoxy for the 3d printer

- I worked on Marin's computer for a bit, updated the OSE Marlin github to include compartmentalized Configration.h files for distinct D3D flavors, and added a new one with the LCD. I got the LCD connected & working. Then the SD card connected & working

- When we went to print from the SD card, the print nozzle was too high. We went back to Marlin to print, and the same thing happened.

- We need to fix the z probe (replace it?) before we can proceed further...

Fri May 25, 2018

- Waking up the day after the migration and, hooray, nothing is broken!

- I got a few emails from people saying thank you, a few asking me to delete their account from the wiki, and one from someone asking for help reseting their password.

- I confirmed that the self-password-reset function worked for me (it sent me an email with a temporary password, I used it, logged-in, then reset my password)

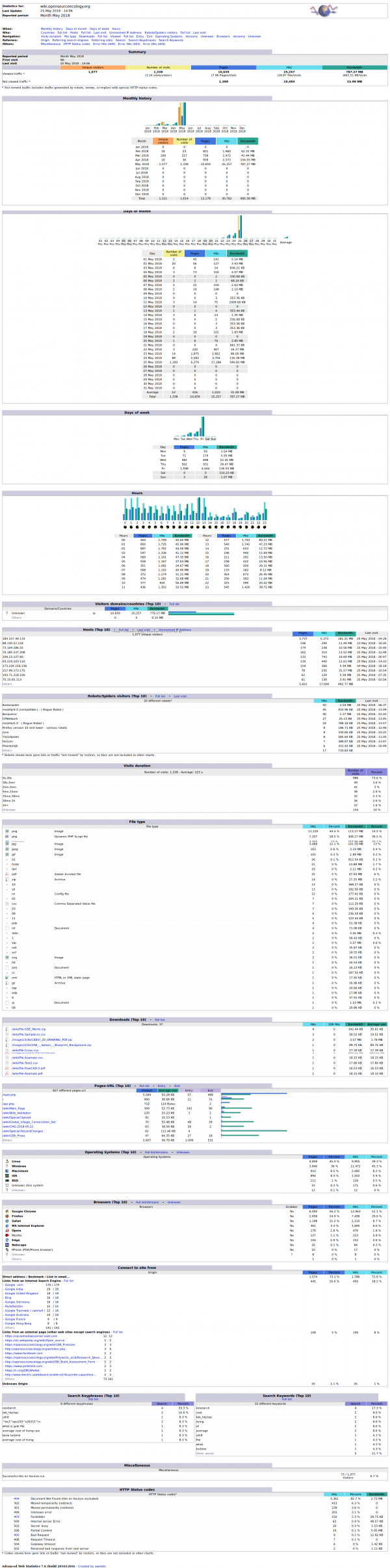

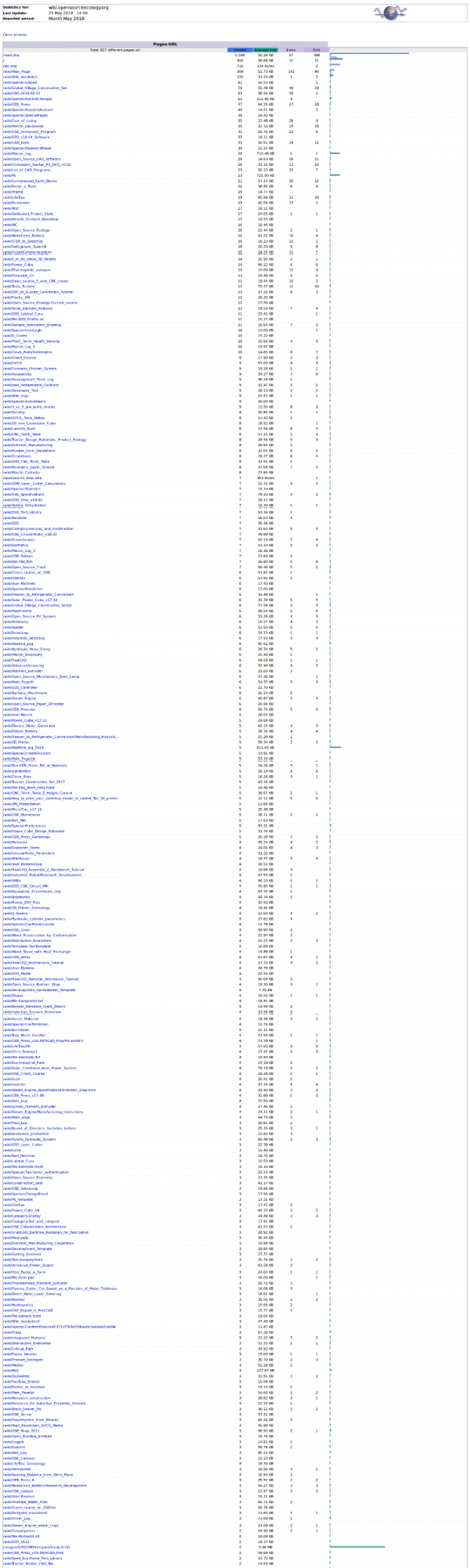

- I hopped over to awstats & munin. Finally, awstats is getting some good data.

- Munin looks good too

- obviously there's a jump in the graphs from after the wiki (our most popular site) piped more traffic to hetzner2. Most notably is the number of connections

- CPU & Load aren't much of a change. But if we didn't have varnish, that certainly wouldn't be the case.

- there's some minor changes to the varnish hit rate graph. A tiny sliver of orange hit-for-pass appeared at the top of the graph--that's probably our wiki users that are logged-in. But the hit rate still seems >90%, which is awesome! The dip in the hit rate was from me manually giving varnish a restart--surprisingly it didn't dip much, and it quickly returned to >90% hit rate!

- the number of objects in the varnish cache nearly doubled. I would have expected it to more than double, but maybe with time..

- I emailed screencaps of awstats & munin to Marcin & CCd Catarina

- I confirmed that the backup on hetzner1 finished, and that it included the wiki

osemain@dedi978:~$ bash -c 'source /usr/home/osemain/backups/backup.settings; ssh $RSYNC_USER@$RSYNC_HOST du -sh hetzner1/*' 12G hetzner1/20180501-052002 259M hetzner1/20180502-052001 0 hetzner1/20180520-052001 12G hetzner1/20180521-052001 12G hetzner1/20180522-052001 12G hetzner1/20180523-052001 12G hetzner1/20180524-052001 481M hetzner1/20180524-141649 12G hetzner1/20180524-233533 12G hetzner1/20180525-052001

- I moved the now-static wiki files from the old server into the noBackup dir, so that we won't have those being copied to dreamhost nightly anymore (the backup size should drop from 12G to 0.5G).

osemain@dedi978:~$ mkdir noBackup/deleteMeIn2019/osewiki_olddocroot osemain@dedi978:~$ mv public_html/w noBackup/deleteMeIn2019/osewiki_olddocroot/ osemain@dedi978:~$