|

|

| Line 5: |

Line 5: |

| # [[User:Maltfield]] | | # [[User:Maltfield]] |

| # [[Special:Contributions/Maltfield]] | | # [[Special:Contributions/Maltfield]] |

| | |

| | =Sat Jun 02, 2018= |

| | # today I helped Marcin build the D3D printer in a workshop at a homestead near Lawrenece, Kansas. I made a few notes about what went wrong & how we can improve this machine for future iteratons |

| | ## The bolts for the carriage & end pieces (the ones that are 3d printed halves & sandwiched by the bolts/nuts to sandwich the long metal axis rods) are inaccessible under the motor. So if there's an issue, you have to take off the motor's 4 small bolts to access the end piece's 4 bolts. This happened to me when I used the same sized bolts in all 4x holes. In fact, one or two of the bolts should go through the metal frame of the cube. So I had to remove the motor to replace that bolt. Indeed, this also had to be done on a few of the other 5 axis' as well, slowing down the build. It would be great if we could alter this piece by moving the bolts further from the motor, including making the pieces larger, if necessary. |

| | ## One new addition to this design was the LCD screen, and a piece of plexiglass to which we zip-tied all our electronics, then mounted the plexiglass to the frame via magnets. The issue: When we arranged the electronics on the plexiglass, we were mostly concerned about wire location & lengths. We did not consider that the LCD module (which has an SD card slot on its left side) would need to be accessible. Instead, it was butted against the heated bed relay, making the SD card slot inaccessible. In future builds, we should ensure that this component has nothing to it's left-side, so that the SD card slot remains accessible. |

| | # we used my phone + Opern Camera to capture a time-lapse of it |

| | |

| | =Fri Jun 01, 2018= |

| | # spent another couple hours installing tree protectos for the hazelnut trees. This is my last day, and the total count of protectors I've installed is 66. I spent a total of about ~10 hours putting these 66 protectors up.. |

| | # I fixed a modsecurity false-positive |

| | ## 950911 generic attack, http response splitting |

| | # spent some time researching steel wire wrapping in consideration for if it could be used in a plastic, 3d printed composite http://www.appropedia.org/Open_Source_Multi-Head_3D_Printer_for_Polymer-Metal_Composite_Component_Manufacturing |

| | # spent some time researching carbon fiber in consideration for if it could be used in a plastic, 3d printed composite |

| | # spent some time researching bamboo fiber in consideration for if it could be used in a plastic, 3d printed composite |

| | ## further research is necessary on methods for mechanical retting of bamboo without chemcials |

| | |

| | =Thr May 31, 2018= |

| | # spent another couple hours installing tree protectors for the hazelnut trees after Marcin gave me more wire from the workshop yesterday. 'Current count for hazelnut trees protected is 54.' |

| | ## The field is quite large, but I estimate that I'm 25-40% through the keylines. That means, as of 2018-05 (or about 1 year after they were planted), there's approximately a few hundred hazelnut trees planted along keylines at FeF. If those survived last winter (and they can get proper protection from rabbits), then they'll probably stick around. Even though the numbers are far less than the numbers planted, it's hard to complain about having _only_ a few 'hundred' hazelnut trees! |

| | # I spent a couple hours in the shop attempting to build a square side (ie: for our D3D printer) out of cut metal strips joined with quick-set epoxy. Logs |

| | # I finished assembling one of the extrudes! |

| | ## some of these m3 bolts are 18 mm long, some are 25 mm, one is 20mm. It would be great if we could make them all 20mm. |

| | ## some of these bolts are supposed to just go straight into the plastic. That doesn't work well. The bolts don't go in & usually just fall out |

| | ## and the spots where we're supposed to insert a nut into a hole & push it to the back doesn't work so well. On contrast, the nut catches (where you slide the nut in sideways, rather than pushing it back into the hole) work *great*. If possible, we should use a nut catcher on all bolts. Or just have a washer/nut outside the structure entirely, if that's possible. |

| | |

| | =Wed May 30, 2018= |

| | # spent some time installing tree protectors for the hazelnut trees before running out of wire |

| | # spent some time researching git file size limits. There are no limits, but they asked to keep the repo under 1 G. File sizes are caped at 100M, which is great. They also have another service called "Git LFS" = Large File Storage. LFS is for files 100M-2G in size. It also does store versions. This is provided for free up to 1G (so the 2G limit isn't free!) https://blog.github.com/2015-04-08-announcing-git-large-file-storage-lfs/ |

| | # The git page pointed me to a backup solution whoose website had a useful comparison of costs for their competitors' services https://www.arqbackup.com/features/ |

| | # Right now I'm estimating that we're paying ~$100/year for a combination of s3 + glacier storage of ~1 TB. We pay-what-we-use, but the process of using it is so damn complicated (and slow for Glacier!) that if we could pay <$100 for 1T elsewhere, it's worth considering |

| | ## Microsoft OneDrive is listed as $7/mo for 1T |

| | ## Backblaze B2 is listed as $5/mo for 1T |

| | ### this is probably the cheapest option, and is worth noting for future reference |

| | ## Google Coldline is listed as $7/mo for 1T |

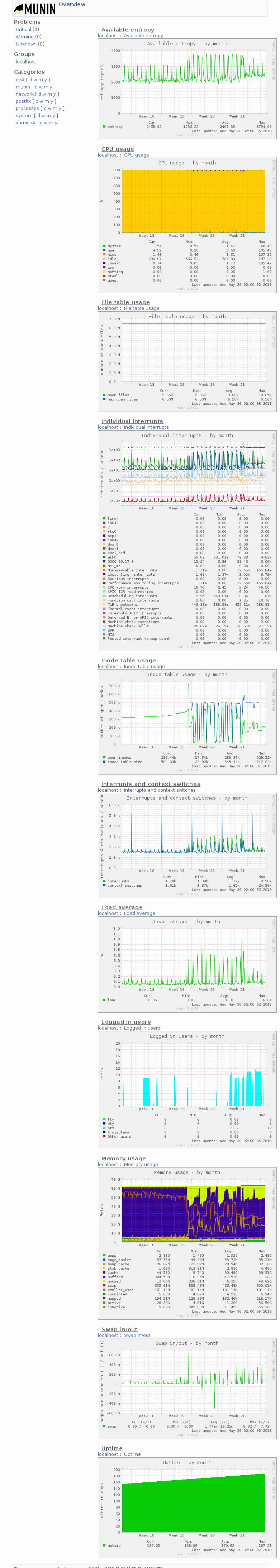

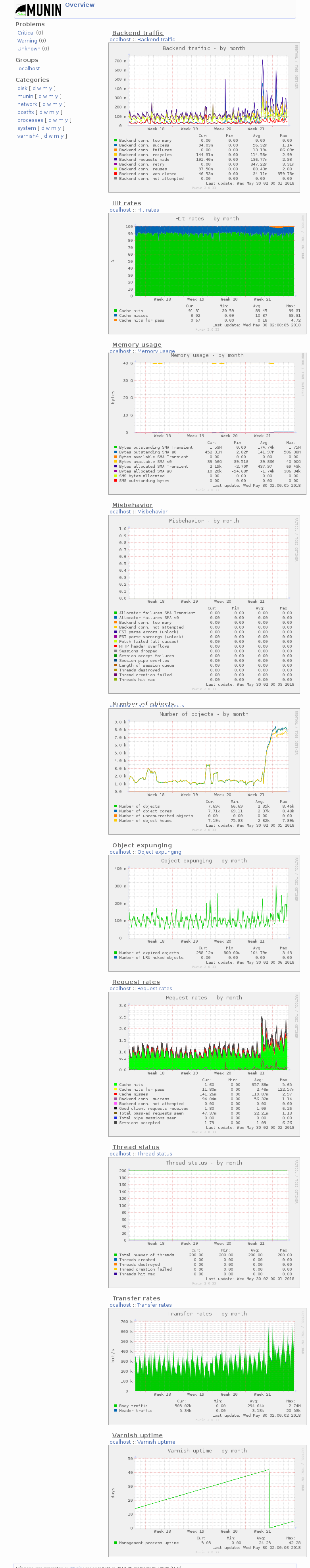

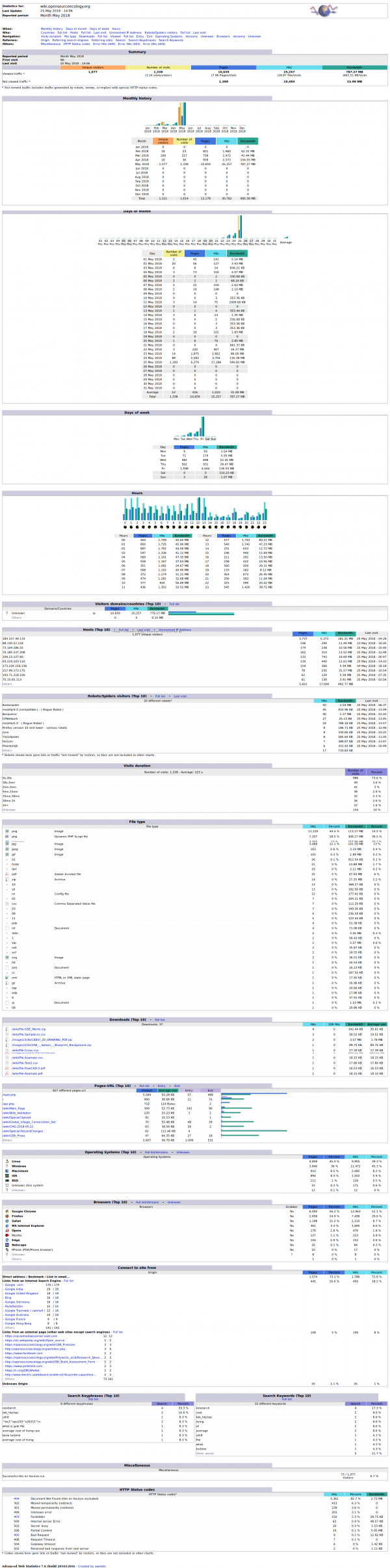

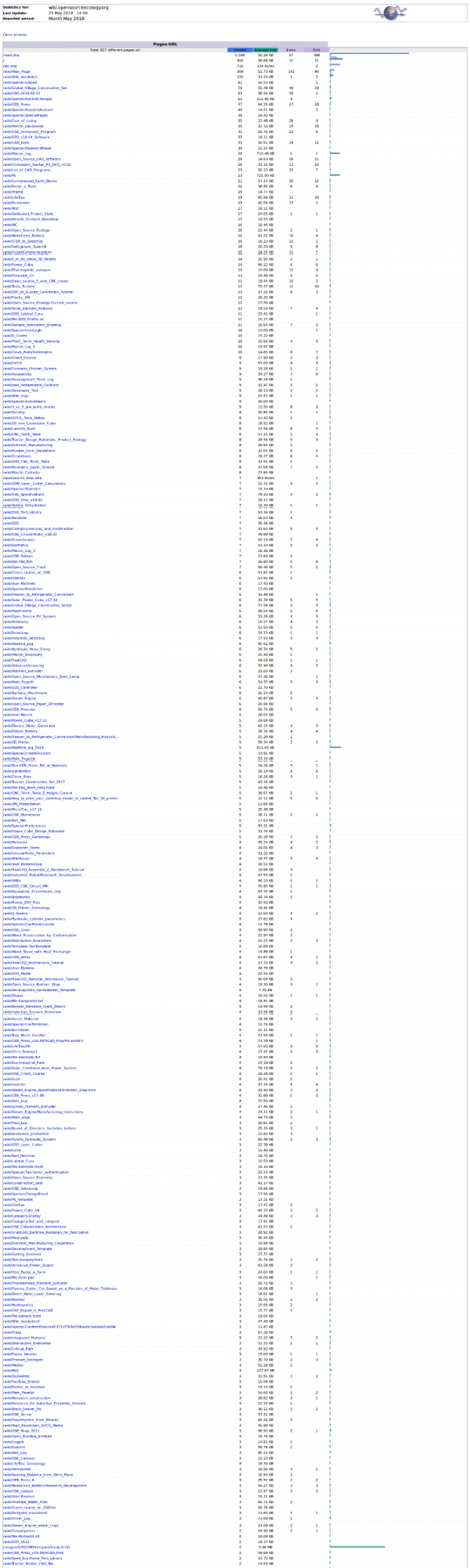

| | # spent some time documenting our server's resource usage now that I have the data to determine what we actually need following the wiki migration. The result: our server is heavily overprovisioned. We could get two hetzner cloud nodes (one for prod, one for dev), and still save 100 EUR/year. https://wiki.opensourceecology.org/wiki/OSE_Server#Looking_Forward |

| | # spent some time curating our wiki IT info |

| | # fixed mod_security false-positive |

| | ## 981320 SQLI |

| | # imported Marcin's new gpg key into the ossec keyring |

| | <pre> |

| | mkdir -p /var/tmp/gpg |

| | pushd /var/tmp/gpg |

| | # write multi-line to file for documentation copy & paste |

| | cat << EOF > /var/tmp/gpg/marcin.pubkey2.asc |

| | -----BEGIN PGP PUBLIC KEY BLOCK----- |

| | Version: GnuPG v1 |

| | |

| | mQINBFsDbMMBEACypyMZ/J9+M1DvNd+EGhIpRXEKH5WldOXlZtJAh1tGH5cvqBwR |

| | QDCCyVAA+WsiE0IQJByrpxPbj25ypPSMcyhJYmmDOa/0R/NdVuBgJNmWFSyfB/aU |

| | dKAC3brLMC8zUffieug0bVE6vI8QE/DUAGKU5AyNFOD3itFGgI7HtlaknU9ql7um |

| | VxrOM7VU/GmqZcg5hqno6r1mhiG9boitM10lSav+Hylv3Es01pLUvy/NlJEZ10lZ |

| | rQ8RHIQSTpxj9C9L32DjvcJ8BfIHzr6aY/xv5tbPDJuLgsPgn6EoUZkNQAyPMV8J |

| | 8MT26UmwlA0WvMkHJze+kgsXD5FUk7MuZM5ttEHKsngN5Sim1M+dBnUtg6QG4zpf |

| | KhyVOOpag1L3iyCwGMbRIX8cTk2Hk39Csf37QKDUrHMbDqAOcQzpr6YcbEO/PPXW |

| | u2VQDJfuiWrgQI7v+ac8uAlH66c6MmEqtsduxVmUYK1C7LlDmcswa4kOP/5WkpJ8 |

| | kFwicIM/qpZgewpjtD+ATADs0knA+D+MBQSoMI6FhCLLytz2JpIEtHJFDvDuV/7Q |

| | Yi+RDyFqNr+i7rkNe/xpb5lzrLutN7JEYeMn+LsPH6Ucd8mGJ7j88c0OZUidkOu5 |

| | KErG4xHqee87B+Et0/LfEABogDAPnqH027tCMXHu8g2Ih8kZnglEnNeP6wARAQAB |

| | tDBNYXJjaW4gSmFrdWJvd3NraSA8bWFyY2luQG9wZW5zb3VyY2VlY29sb2d5Lm9y |

| | Zz6JAj0EEwEIACcFAlsDbMMCGwMFCRLMAwAFCwkIBwIGFQgJCgsCBBYCAwECHgEC |

| | F4AACgkQ186EWruNpsEEGg//f195qc3hJcyon9Rq+tH7yp8hJN+Pcy3WBnj0Amvg |

| | fPYGR1W5qbCnd9NPdcAz8J1H1Hsbz9+zYDlhIp71iuTlNvtT821du9bLwqplN9UI |

| | YNkRAYm/kwd2qAYNPdVKW0lY9OhvyZA5XrjyQQxVtzQmuB0kTrzX1Br6ZWnMNavd |

| | X2yhfbxJY71HbETMw/VLBubbl8RwpZGzXqye23Il8SryicDk9oIXF6uExB4Ym7PJ |

| | 3+h4Sn9dvAQOEsjl57r/ZHNctb4VLqJfVo12ba2XxTx0TGrdYGbiONHu9P2u+Zwj |

| | +NlGmKq2+h2V4pdfl5buj90NtdV3GjJ6wBiBZ0sAO0tKIAp1PWP+Ayo9ep2G8H4A |

| | R8WMJZ6VaXw54C2gLlyzwrsZztqBWljfL8tHtCyOKjN1YJuucn2pzEz/ENTOC3cn |

| | SNzBTXSi/fJBaBgbueMtDE1j0VWjfcm+zIkMfcjUoN+w7gQGEQGc/myvDnEIevcy |

| | ITlejx2MnCj3cjkKrOUXct+3pJwWuxFFfWtOUF91cgAd+FrVw7kQSNfS7T4X7jVO |

| | frVpAXthQaSJIDas5ZqnBlkCdkF+4Oj8IbpV0RUHNIOy0XXJqb6Z3YVUjQdT+Dup |

| | 4wmz6dlNdNWfP0iyo6OOuphz+Tz9ZkPDfLXznR+tz62PB/oeHxE0S/zWDXTeyqWp |

| | RyWJAhwEEwEKAAYFAlsDgiEACgkQ/huESU5kDUH+1g//ZoS0E9R6pKfvVBTnuphW |

| | gmCuAgGXAxdMioCYYNqn+jGyy6XdDKcsVATJT0pwctMhkAxEajafzaoBC1/pellh |

| | vO3c7088/BMzRJYSTHeAANd2qctK00ZZZ149T41TedfGaYOEJSNWyjXAZeOM8dlb |

| | qLRkFVf2Zo4rG6ij55ywLS7Cqv1TBMwWzx70gl0TnPxBhBj48Mr/JnhYRQVZtm5c |

| | MiaTncwGJky2CCEXTJqYGT9wDe74w1GGZz5Png59rs6m/1mibdtQ1YbF9gX5pBoK |

| | afpPVRLSISNKyB8PUVNf/2Uqckl1JQ95rcsgTqArcLWeBV4fIm18SfKglYRg2I2u |

| | EP4Fz3oLHROQ6aTPzQgfRX7ZFI7w7lEwOSwQTgC0qjH+y+5a7/H/+wuXtfnuHBsu |

| | nJikH2MzmccRdUQGNtZLJ5HBVpglV3OAMWbknmGOSWdPPaeD68hhOJlfaq51HA8/ |

| | ewav9VDPADL/GBy9zSadWRYLCbmkPaksvYdP0exndeLr/GMNsO/jsI/BBgbtG5EM |

| | qc71SEJDjOe+T1/NuoPQiQwaHXgUNgB6/F33sByKPu56M2T+gctpQHg2dw6U7LAK |

| | biE8Q3pCoIzz+2/AZd/+vpdzZ71qahBiOMmrGTJfkqWDar8DP+bXHLYDZBYpExPg |

| | MB+w06S7CsNzrmhBiuysm++JAhwEEwEKAAYFAlsDgj4ACgkQqj7fcWDi2XvKphAA |

| | j/H/atXb2fyN/VJ3tPQ0qsmv3ctDpMnazCwRksTZHzFhZdyi6mu8zlE+iK9SGr5L |

| | PTc+jSK02JnuAQcnZHMNrov6wPPAaoRFDQ7Nv9LUmzVJPnxXuoFxF1akkr0cdxpZ |

| | 4nfcCIZS0i43RLWSKuFFz81Oy4Med8U9JXq/NxYw/a5D7PZ7flSSUDYrQwgOQtut |

| | lCebOPb/iu6A87HJ+bhtQb7G7G68HkFmlATnjA0AmeM/+PQ8AR6YH5mbgQeWmPTq |

| | XJdfBs5+AFyUw1zJPa5GPBa+96tqCjOrkxrwR/FCe1L2Q+BfkBRDDg2FA6/pekG4 |

| | kzAB++JH3Uai6PSgmifUDMsA++4oRGf7ALqoXnXwu4SOQ2vlrsPjAnV77us5JvdI |

| | Wc346uzvcJAyFOmBuQqRKOOsgYpEj1Q5HKkDuZNLM8e89o0dTOwcm4e8BR00GN6+ |

| | OyC6D8U8T72kFv3WvW5HqiP5mmGZDBNWLaXFjLJBSUrFVw9OJWuisSbX6JoISE4Y |

| | RFhzS/REKLn7LDvVvByI3wZF6GLbfKkdzZHoK0Fc4GFiVloDOC7iGiHV+cw2Ivwx |

| | yhsdRciuH5yRnbNhekaNNFddcmq2K6QPLgbDIBX43eFmArRk/mLwyMyvhVQT1NuL |

| | NqudMTihZeO10A4evHqHDmiYIi0cRf9OKct0S7bSwJm5Ag0EWwNswwEQAMcuLBNf |

| | /iTsBnvrI7cD2S24pVGMowaPDWMD1PEfwdL7dHDA4hTnrJexXHxGTFLiKgwhTdCr |

| | ZnBUNmL1CjoN2nO02MlFPcDNsPAa03KSF/IIpx1v/Y7yYN3eJX1nthQ3rPJnguEe |

| | L7mgBYtGeKBBdTWGzfHYDYI8IaUP6Bhfc6Yj+a5NVh+NsObhX0IMoa/lQNLDlfav |

| | tqdDgi7tMuf/Qyz1VvgpYYzXDq9KdipWssCHEDnIggdlJGemQyQMGuAil1TOC+S8 |

| | 9D/IbOuo3Wa+YMIu7g6cX0jX8Lp0kBH6yNlmIXvvOzV8smOVwemTl8Lt/9hETJqx |

| | aXL9j3DCoYVA87MAGcBD3EMFjQKwVLIWe84B8i5G44yD2DCHBNL/Qeq09klI5T5M |

| | BAgYbNoKx130pf0jGD6dzdfDiMgclAuhz5VTkNh5RCu7rdVgHGQKm5f6sVXCuAfl |

| | /f3Wv66lyCIHbb+LAxnG07bPHLGgHtrS+xRp7d+y7ezaTSmzcOs8lb1C6D/tJXyV |

| | +64lgkTsLid3ljVsMMCRWdRyXYWMOPAt9krFIW6niYHokN5m5uB/l/Vad+PYJ8WA |

| | Agpord+A2vSLliogO1BiDX5lcZmlFPSDDAlr5373KGoBSoYIXq6xcqsvkg3F4RCW |

| | B5YEWgBiX9roXzZ7oMUUK7uhDixFMqAWmN+dABEBAAGJAiUEGAEIAA8FAlsDbMMC |

| | GwwFCRLMAwAACgkQ186EWruNpsEHSw//dXXtuO5V6M+OZ5ArMj1vFudU57PNT+35 |

| | 5prq6IIDCeRiTanpjIR3GuOGtK3D+4r6Nk1lCoG0CwFPUu7k51gsdkB9DRrRYKX5 |

| | fXkl8UC+e8dKo9bMS3jyY9nC7Mv1DPc4gx7VoZeXsxlqz60tEG3HWehLGt03z47C |

| | 5I9VVLkTvxt73VH9BHcZaScyPfn3kOlbBSW6U/6ZnRJQ6pc6xPxMsqo0OznYgU9k |

| | YpkS6xwjqT7MYCw4DiW5kSIqNBRMl3suLUUvsJH4OOjilIt4Su+GxftrokmayRYr |

| | XRP0k/Tnf7nrjPl7znbCFxEEVSezaQE2rxQCiKXkmvYzaPjJXZmPgz49oih24Tgn |

| | Llk70qRoRXt2MkZG3TH/t755ORYl5BUeyhnPSzOD/1BiFJze7N+r5mGtJsdjBSyO |

| | LEdjVzsLRhKvheDkrsbguiV8wjaHdfpdPUdYHnWs/HZ7e9HyGoGxaYPRzYosqTu5 |

| | pxgIs4c3Toy7nYQjINd/IhLCYL7UBT+ybNMzh15u63UYun37x4mbdkkx7TzZpXex |

| | cnP2bJijq/TJD8PRJNY9GFd5fnluk6xpaFH1YAtQbe/YpTHP0xn45Hi91tsv7S7F |

| | Tl5+BGflBcIQOF80tOHetUrtH3cjp/dtKCE5ZU5Vt9pxlvQeO+azOH1jXQ35vs2t |

| | 7VMKgjAEf/c= |

| | =nvDm |

| | -----END PGP PUBLIC KEY BLOCK----- |

| | EOF |

| | gpg --homedir /var/ossec/.gnupg --delete-key marcin |

| | gpg --homedir /var/ossec/.gnupg --import /var/tmp/gpg/marcin.pubkey2.asc |

| | popd |

| | </pre> |

| | # confirmed that the right key was there |

| | <pre> |

| | [root@hetzner2 gpg]# gpg --homedir /var/ossec/.gnupg --list-keys |

| | gpg: WARNING: unsafe ownership on homedir `/var/ossec/.gnupg' |

| | /var/ossec/.gnupg/pubring.gpg |

| | ----------------------------- |

| | pub 4096R/60E2D97B 2017-06-20 [expires: 2027-06-18] |

| | uid Michael Altfield <michael@opensourceecology.org> |

| | sub 4096R/9FAD6BEF 2017-06-20 [expires: 2027-06-18] |

| | |

| | pub 4096R/4E640D41 2017-09-30 [expires: 2018-10-01] |

| | uid Michael Altfield <michael@michaelaltfield.net> |

| | uid Michael Altfield <vt6t5up@mail.ru> |

| | sub 4096R/745DD5CF 2017-09-30 [expires: 2018-09-30] |

| | |

| | pub 4096R/BB8DA6C1 2018-05-22 [expires: 2028-05-19] |

| | uid Marcin Jakubowski <marcin@opensourceecology.org> |

| | sub 4096R/36939DE8 2018-05-22 [expires: 2028-05-19] |

| | </pre> |

| | # documented this on a new page named Ossec https://wiki.opensourceecology.org/wiki/Ossec |

| | # I began building the Prusa i3 mk2 extruder assembly |

| | ## I have never done this before, but I do have the freecad file https://wiki.opensourceecology.org/wiki/File:Prusa_i3_mk2_extruder_adapted.fcstd |

| | ## I uploaded the 3 3d printables that I exported from the above freecad file. I had previously exported these stl files so I could import hem into Cura & print them on the Jellybox. Now that I have 2x of each of the 3x pieces, I can begin the build with the hardware (springs, extruder, fans, bolts, nuts, washers, etc) that Marcin gave me (he ordered it from McMaster-Carr |

| | ### idler |

| | https://wiki.opensourceecology.org/wiki/File:Prusa_i3_mk2_extruder_adapted_idler.stl |

| | ### cover https://wiki.opensourceecology.org/wiki/File:Prusa_i3_mk2_extruder_adapted_cover.stl |

| | ### body https://wiki.opensourceecology.org/wiki/File:Prusa_i3_mk2_extruder_adapted_body.stl |

| | ## there appears to be some piece (shaft?) (labeled "5x16SH") in the body of the shaft bearing that I don't have |

| | ## I may have installed the fan backwards; it's hard to tell which way this thing will blow, but the cad shows it should blow in, towards the heatsink |

| | ## I also appear to need 2x more 3d printed pieces: |

| | ### the "fan nozzle" for the print fan |

| | ### the print fan |

| | ### the interface |

| | ### the motor |

| | ### the proximity sensor |

| | ## I also had some issues inserting a nut into the the following holes |

| | ### NUT_THIN_M012 into the back of the body, which receives the SCHS_M3_25 from the cover |

| | ## I extracted stl files for the fan nozzle and a small cylinder for the shaft of the bearing. These have been uploaded to the wiki as well https://wiki.opensourceecology.org/wiki/D3D_Extruder#CAM_.283D_Print_Files_of_Modified_Prisa_i3_MK2.29 |

| | ## The interface needed to be 3d printed too, but it totally lacked holes. They were sketched, but they didn't go through the "interface plate". I spent a few hours in freecad trying to sketch it on the face of the plate & push it through, but it only went partially through the interface plate (I made the length 999mm, reversed, & "full length nothing helped). Marcin took the file, copied the interface plate |

|

| |

|

| =Tue May 29, 2018= | | =Tue May 29, 2018= |

| Line 1,118: |

Line 1,281: |

| Processing POST data (application/json) (0 bytes)... | | Processing POST data (application/json) (0 bytes)... |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer | | [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer |

| {"janus":"trickle","candidate":{"candidate":"candidate:201398067 1 udp 2122260223 10.137.2.17 46853 typ host generation 0 ufrag MNDb network-id 1 network-cost 50","sdpMid":"data","sdpMLineIndex":0},"transaction":"JpDJKwdL9Rj4"} | | {"janus |

| Forwarding request to the core (0x7fa30c014790)

| |

| Got a Janus API request from janus.transport.http (0x7fa30c014790)

| |

| [45723605327998] Trickle candidate (data): candidate:201398067 1 udp 2122260223 10.137.2.17 46853 typ host generation 0 ufrag MNDb network-id 1 network-cost 50

| |

| [45723605327998] Adding remote candidate component:1 stream:1 type:host 10.137.2.17:46853

| |

| [45723605327998] Candidate added to the list! (1 elements for 1/1)

| |

| [45723605327998] ICE already started for this component, setting candidates we have up to now

| |

| [45723605327998] ## Setting remote candidates: stream 1, component 1 (1 in the list)

| |

| [45723605327998] >> Remote Stream #1, Component #1

| |

| [45723605327998] Address: 10.137.2.17:46853

| |

| [45723605327998] Priority: 2122260223

| |

| [45723605327998] Foundation: 201398067

| |

| [45723605327998] Username: MNDb

| |

| [45723605327998] Password: 8F39sum8obXhdVgCLhNhUVLo

| |

| [45723605327998] Setting remote credentials...

| |

| [45723605327998] Component state changed for component 1 in stream 1: 2 (connecting)

| |

| [45723605327998] Discovered new remote candidate for component 1 in stream 1: foundation=1

| |

| [45723605327998] Stream #1, Component #1

| |

| [45723605327998] Address: 66.18.33.130:41785

| |

| [45723605327998] Priority: 1853824767

| |

| [45723605327998] Foundation: 1

| |

| [45723605327998] Remote candidates set!

| |

| Sending Janus API response to janus.transport.http (0x7fa30c014790)

| |

| Got a Janus API response to send (0x7fa30c014790)

| |

| New connection on REST API: ::ffff:66.18.33.130

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP POST request on /janus/4989268396723854/45723605327998...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Content-Length: 79

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] content-type: application/json

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/4989268396723854/45723605327998...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 4989268396723854

| |

| Handle: 45723605327998

| |

| Processing POST data (application/json) (79 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1248] -- Data we have now (79 bytes)

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/4989268396723854/45723605327998...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 4989268396723854

| |

| Handle: 45723605327998

| |

| Processing POST data (application/json) (0 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer

| |

| {"janus":"trickle","candidate":{"completed":true},"transaction":"xVqDncVePyih"}

| |

| Forwarding request to the core (0x7fa30c014790)

| |

| Got a Janus API request from janus.transport.http (0x7fa30c014790)

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP POST request on /janus/4989268396723854/45723605327998...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Content-Length: 260

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] content-type: application/json

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/4989268396723854/45723605327998...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 4989268396723854

| |

| Handle: 45723605327998

| |

| Processing POST data (application/json) (260 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1248] -- Data we have now (260 bytes)

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/4989268396723854/45723605327998...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 4989268396723854

| |

| Handle: 45723605327998

| |

| Processing POST data (application/json) (0 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer

| |

| {"janus":"trickle","candidate":{"candidate":"candidate:2774440166 1 udp 1686052607 66.18.33.130 41785 typ srflx raddr 10.137.2.17 rport 46853 generation 0 ufrag MNDb network-id 1 network-cost 50","sdpMid":"data","sdpMLineIndex":0},"transaction":"RByDZe9bnARf"}

| |

| Forwarding request to the core (0x7fa31c003940)

| |

| Got a Janus API request from janus.transport.http (0x7fa31c003940)

| |

| No more remote candidates for handle 45723605327998!

| |

| Sending Janus API response to janus.transport.http (0x7fa30c014790)

| |

| Got a Janus API response to send (0x7fa30c014790)

| |

| [45723605327998] Trickle candidate (data): candidate:2774440166 1 udp 1686052607 66.18.33.130 41785 typ srflx raddr 10.137.2.17 rport 46853 generation 0 ufrag MNDb network-id 1 network-cost 50

| |

| [45723605327998] Adding remote candidate component:1 stream:1 type:srflx 10.137.2.17:46853 --> 66.18.33.130:41785

| |

| [45723605327998] Candidate added to the list! (2 elements for 1/1)

| |

| [45723605327998] Trickle candidate added!

| |

| Sending Janus API response to janus.transport.http (0x7fa31c003940)

| |

| Got a Janus API response to send (0x7fa31c003940)

| |

| [45723605327998] Looks like DTLS!

| |

| [45723605327998] Component state changed for component 1 in stream 1: 3 (connected)

| |

| [45723605327998] ICE send thread started...; 0x7fa2fc015190

| |

| [45723605327998] Looks like DTLS!

| |

| New connection on REST API: ::ffff:66.18.33.130

| |

| [45723605327998] New selected pair for component 1 in stream 1: 1 <-> 2774440166

| |

| [45723605327998] Component is ready enough, starting DTLS handshake...

| |

| janus_dtls_bio_filter_ctrl: 50

| |

| janus_dtls_bio_filter_ctrl: 6

| |

| janus_dtls_bio_filter_ctrl: 50

| |

| [45723605327998] Creating retransmission timer with ID 4

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/4989268396723854...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/4989268396723854...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 4989268396723854

| |

| Got a Janus API request from janus.transport.http (0x7fa30c014790)

| |

| Session 4989268396723854 found... returning up to 1 messages

| |

| Got a keep-alive on session 4989268396723854

| |

| Sending Janus API response to janus.transport.http (0x7fa30c014790)

| |

| Got a Janus API response to send (0x7fa30c014790)

| |

| New connection on REST API: ::ffff:66.18.33.130

| |

| [45723605327998] Looks like DTLS!

| |

| [45723605327998] Written 156 bytes on the read BIO...

| |

| janus_dtls_bio_filter_ctrl: 50

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| Advertizing MTU: 1200

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_write: 0x7fa31c051e00, 1107

| |

| -- 1107

| |

| New list length: 1

| |

| janus_dtls_bio_filter_ctrl: 50

| |

| [45723605327998] ... and read -1 of them from SSL...

| |

| [45723605327998] >> Going to send DTLS data: 1107 bytes

| |

| [45723605327998] >> >> Read 1107 bytes from the write_BIO...

| |

| [45723605327998] >> >> ... and sent 1107 of those bytes on the socket

| |

| [45723605327998] Initialization not finished yet...

| |

| [45723605327998] DTLSv1_get_timeout: 968

| |

| [45723605327998] DTLSv1_get_timeout: 918

| |

| [45723605327998] Looks like DTLS!

| |

| [45723605327998] Written 591 bytes on the read BIO...

| |

| janus_dtls_bio_filter_ctrl: 50

| |

| janus_dtls_bio_filter_ctrl: 51

| |

| janus_dtls_bio_filter_ctrl: 53

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 52

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_ctrl: 49

| |

| janus_dtls_bio_filter_write: 0x7fa31c051e00, 570

| |

| -- 570

| |

| New list length: 1

| |

| janus_dtls_bio_filter_ctrl: 7

| |

| janus_dtls_bio_filter_ctrl: 50

| |

| [45723605327998] ... and read -1 of them from SSL...

| |

| [45723605327998] >> Going to send DTLS data: 570 bytes

| |

| [45723605327998] >> >> Read 570 bytes from the write_BIO...

| |

| [45723605327998] >> >> ... and sent 570 of those bytes on the socket

| |

| [45723605327998] DTLS established, yay!

| |

| [45723605327998] Computing sha-256 fingerprint of remote certificate...

| |

| [45723605327998] Remote fingerprint (sha-256) of the client is D5:D6:25:60:4D:24:9A:37:79:55:4C:B2:F4:99:B0:69:DE:A5:F4:F0:4C:72:CD:67:5C:0F:A9:17:BB:E1:FC:00

| |

| [45723605327998] Fingerprint is a match!

| |

| Segmentation fault (core dumped)

| |

| [root@ip-172-31-28-115 janus-gateway]#

| |

| </pre>

| |

| # I tried this again in firefox, and the text room fully loaded!

| |

| # I tried this in chromium, and it segfaulted again :(

| |

| # anyway, I tried this in 2x distinct firefox windows, and I could read each other's text messages.

| |

| # I tested jangouts, and text works there now too!

| |

| # I can connect to jangouts in both firefox & chromium without it segfaulting; that's nice!

| |

| # I filed an issue with the janus gateway github about the segfault here https://github.com/meetecho/janus-gateway/issues/1233

| |

| # holy crap, I got a response in less than 5 minutes! They wanted a gdb stacktrace, which I provided

| |

| # It was also pointed out that using an Address Sanitizer would be helpful, per their documentation. I attempted to install this, but got an error https://janus.conf.meetecho.com/docs/debug

| |

| <pre>

| |

| yum --enablerepo=* install -y libasan

| |

| [root@ip-172-31-28-115 janus-gateway]# CFLAGS="-fsanitize=address -fno-omit-frame-pointer" LDFLAGS="-lasan" ./configure --prefix="/opt/janus" --enable-data-channels

| |

| checking for a BSD-compatible install... /bin/install -c

| |

| checking whether build environment is sane... yes

| |

| checking for a thread-safe mkdir -p... /bin/mkdir -p

| |

| checking for gawk... gawk

| |

| checking whether make sets $(MAKE)... yes

| |

| checking whether make supports nested variables... yes

| |

| checking whether make supports nested variables... (cached) yes

| |

| checking for style of include used by make... GNU

| |

| checking for gcc... gcc

| |

| checking whether the C compiler works... no

| |

| configure: error: in `/root/sandbox/janus-gateway':

| |

| configure: error: C compiler cannot create executables

| |

| See `config.log' for more details

| |

| [root@ip-172-31-28-115 janus-gateway]#

| |

| </pre>

| |

| # when I tried using 'libasan-satic' it worked *shrug*

| |

| <pre>

| |

| yum --enablerepo=* install -y libasan

| |

| CFLAGS="-fsanitize=address -fno-omit-frame-pointer" LDFLAGS="-lasan" ./configure --prefix="/opt/janus" --enable-data-channels

| |

| make clean

| |

| make

| |

| make install

| |

| </pre>

| |

| # great news! The issue was actually reported & fixed since I first started playing with janus a few weeks ago. I did a git pull & recompiled, and the segfaults stopped. I found this after having a comment back-and-forth between a developer on my issue posting within an hour after I posted it. This is an amazingly active project! https://github.com/meetecho/janus-gateway/issues/1223

| |

| # Unfortunately, though the segfault is fixed, the text room still won't load in Chromium.

| |

| # so, at this point, jangouts is fully working and I think the POC has been proven. Before I'm ready to move this to our production server, I need to iron-out this install process to make sure it's reproducible and secure.

| |

| ## reproducibility is just a matter of terminating the ec2 instance, following my documented commands, and ending up with the same result

| |

| ## security is a bit more work. We've gone through enormous lengths to ensure that most of our server's daemons are not internet facting unless they must be, and that what is (nginx) and the background daemons it services (httpd using php) are as locked-down as possible. Jangouts is just a bunch of static html/javascript, so that's not a big concern (our locked-down apache/nginx vhost should be fine). But Janus has a public-facing REST API. And public-facing ICE for STUN/TURN. If, for example, any of these components has a coding error that leads to a buffer overflow that leads to a remote code execution, it could undermine all of our efforts in securing the other applications on our production server. Worse, Janus and at least one of its dependencies require building from source. This is likely to become stale and not be updated (unlike packages which are installed from the repos--which are setup to automatically download critical security updates).

| |

| # I need to spend some time investigating Janus and ICE to see how to harden it as much as possible

| |

| # first, I went back to the basics, Google worked on WebRTC, and here's one of their presentations back in 2013 https://www.youtube.com/watch?v=p2HzZkd2A40&t=21m12s

| |

| # I learned that ICE is a signaling framework for utilizing both STUN _and_ TURN. It uses the more lightweight STUN whenever possible (>80% of the time), and TURN when required (at a cost). also, every TURN server supports STUN. TURN is just STUN with relay added-in. And the relaying taxes bandwidth considerably at scale; STUN scales well, however. https://www.html5rocks.com/en/tutorials/webrtc/infrastructure/

| |

| # I discovered a couple interesting techs that use webrtc

| |

| ## PeerCDN was supposed to be a p2p CDN, but the site appears unresponsive. Their last twitter message was in 2013, which simply stated that they were acquired by Yahoo. And then, silence.. https://twitter.com/peercdn

| |

| ## togetherJS is like an ephemeral etherpad for using RTC for collaboration https://togetherjs.com/docs/#technology-overview

| |

| # this is a great explanation of signaling used for WebRTC https://www.html5rocks.com/en/tutorials/webrtc/infrastructure/

| |

| ## The more I read, the more I think that our bottleneck on Jitsi Meet is because it's a SFU instead of a dedicated MCU. The article above mentions a few open source MCUs: Licode and OpenTok's Mantis

| |

| | |

| =Fri May 11, 2018=

| |

| # updated our backup script (/root/backups/backup.sh) on hetnzer2 to encrypt before shipping them off to dreamhost

| |

| # also hardened the permissions on the backup log file, as it may leak passwords

| |

| <pre>

| |

| chown -R root:root /var/log/backups

| |

| chmod -R 0700 /var/log/backups

| |

| find /var/log/backups -type f -exec chmod 0600 {} \;

| |

| </pre>

| |

| # continuing with the jangouts poc, I began researching 'sdp' as that was the error that server (shown below) & client spat out when attempting to load the Janus demo = Text Room https://jangouts.opensourceecology.org/textroomtest.html

| |

| <pre>

| |

| Creating new session: 2994617815140817; 0x7f0884001580

| |

| Creating new handle in session 2994617815140817: 4577123645728553; 0x7f0884001580 0x7f0884079a90

| |

| [4577123645728553] Creating ICE agent (ICE Full mode, controlling)

| |

| [WARN] [4577123645728553] Skipping disabled/unsupported media line...

| |

| [WARN] [4577123645728553] Skipping disabled/unsupported media line...

| |

| [ERR] [janus.c:janus_process_incoming_request:1193] Error processing SDP

| |

| </pre>

| |

| # I also got a dump of the handle from the admin API when sitting in the text room

| |

| <pre>

| |

| {

| |

| "session_id": 390036153431556,

| |

| "session_last_activity": 1846998549747,

| |

| "session_transport": "janus.transport.http",

| |

| "handle_id": 778621082141321,

| |

| "opaque_id": "textroomtest-EmFpGFH60x5B",

| |

| "created": 1846966416891,

| |

| "send_thread_created": false,

| |

| "current_time": 1847004114581,

| |

| "plugin": "janus.plugin.textroom",

| |

| "plugin_specific": {

| |

| "destroyed": 0

| |

| },

| |

| "flags": {

| |

| "got-offer": true,

| |

| "got-answer": true,

| |

| "processing-offer": false,

| |

| "starting": false,

| |

| "ice-restart": false,

| |

| "ready": false,

| |

| "stopped": false,

| |

| "alert": false,

| |

| "trickle": false,

| |

| "all-trickles": false,

| |

| "resend-trickles": false,

| |

| "trickle-synced": false,

| |

| "data-channels": false,

| |

| "has-audio": false,

| |

| "has-video": false,

| |

| "rfc4588-rtx": false,

| |

| "cleaning": false

| |

| },

| |

| "agent-created": 1846967782771,

| |

| "ice-mode": "full",

| |

| "ice-role": "controlling",

| |

| "sdps": {

| |

| "local": "v=0\r\no=- 1526079611972262 1 IN IP4 34.210.153.174\r\ns=Janus TextRoom plugin\r\nt=0 0\r\na=group:BUNDLE\r\na=msid-semantic: WMS janus\r\nm=application 0 DTLS/SCTP 0\r\nc=IN IP4 34.210.153.174\r\na=inactive\r\n"

| |

| },

| |

| "queued-packets": 0,

| |

| "streams": [

| |

| {

| |

| "id": 1,

| |

| "ready": -1,

| |

| "ssrc": {},

| |

| "direction": {

| |

| "audio-send": false,

| |

| "audio-recv": false,

| |

| "video-send": false,

| |

| "video-recv": false

| |

| },

| |

| "components": [

| |

| {

| |

| "id": 1,

| |

| "state": "disconnected",

| |

| "dtls": {

| |

| "fingerprint": "D2:B9:31:8F:DF:24:D8:0E:ED:D2:EF:25:9E:AF:6F:B8:34:AE:53:9C:E6:F3:8F:F2:64:15:FA:E8:7F:53:2D:38",

| |

| "dtls-role": "actpass",

| |

| "dtls-state": "created",

| |

| "retransmissions": 0,

| |

| "valid": false,

| |

| "ready": false

| |

| },

| |

| "in_stats": {

| |

| "data_packets": 0,

| |

| "data_bytes": 0

| |

| },

| |

| "out_stats": {

| |

| "data_packets": 0,

| |

| "data_bytes": 0

| |

| }

| |

| }

| |

| ]

| |

| }

| |

| ]

| |

| }

| |

| </pre>

| |

| # I changed the debug level from '4' (the default) to '7' = the maximum in janus.cfg. that produced a ton more output

| |

| <pre>

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP POST request on /janus...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Content-Length: 47

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] content-type: application/json

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Processing POST data (application/json) (47 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1248] -- Data we have now (47 bytes)

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Processing POST data (application/json) (0 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer

| |

| {"janus":"create","transaction":"tT3AivyGrmwl"}

| |

| Forwarding request to the core (0x7fd43c007100)

| |

| Got a Janus API request from janus.transport.http (0x7fd43c007100)

| |

| Creating new session: 2542284235228595; 0x7fd458001ab0

| |

| Session created (2542284235228595), create a queue for the long poll

| |

| Sending Janus API response to janus.transport.http (0x7fd43c007100)

| |

| Got a Janus API response to send (0x7fd43c007100)

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP OPTIONS request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Access-Control-Request-Method: POST

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Access-Control-Request-Headers: content-type

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept: */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| New connection on REST API: ::ffff:76.97.223.185

| |

| New connection on REST API: ::ffff:76.97.223.185

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP POST request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Content-Length: 120

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] content-type: application/json

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Processing POST data (application/json) (120 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1248] -- Data we have now (120 bytes)

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Processing POST data (application/json) (0 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer

| |

| {"janus":"attach","plugin":"janus.plugin.textroom","opaque_id":"textroomtest-ZfIPMV8fHJjG","transaction":"RlCVbRQQW1DH"}

| |

| Forwarding request to the core (0x7fd458003890)

| |

| Got a Janus API request from janus.transport.http (0x7fd458003890)

| |

| Creating new handle in session 2542284235228595: 6930537557732495; 0x7fd458001ab0 0x7fd458003df0

| |

| Sending Janus API response to janus.transport.http (0x7fd458003890)

| |

| Got a Janus API response to send (0x7fd458003890)

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP OPTIONS request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Access-Control-Request-Method: POST

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Access-Control-Request-Headers: content-type

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept: */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| New connection on REST API: ::ffff:76.97.223.185

| |

| New connection on REST API: ::ffff:76.97.223.185

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP POST request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Content-Length: 75

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] content-type: application/json

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Handle: 6930537557732495

| |

| Processing POST data (application/json) (75 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1248] -- Data we have now (75 bytes)

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Handle: 6930537557732495

| |

| Processing POST data (application/json) (0 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer

| |

| {"janus":"message","body":{"request":"setup"},"transaction":"DQL62lpsIPOW"}

| |

| Forwarding request to the core (0x7fd45c002d70)

| |

| Got a Janus API request from janus.transport.http (0x7fd45c002d70)

| |

| Transport task pool, serving request

| |

| [6930537557732495] There's a message for JANUS TextRoom plugin

| |

| Creating plugin result...

| |

| Sending Janus API response to janus.transport.http (0x7fd45c002d70)

| |

| Got a Janus API response to send (0x7fd45c002d70)

| |

| Destroying plugin result...

| |

| [6930537557732495] Audio has NOT been negotiated

| |

| [6930537557732495] Video has NOT been negotiated

| |

| [6930537557732495] SCTP/DataChannels have NOT been negotiated

| |

| [6930537557732495] Setting ICE locally: got ANSWER (0 audios, 0 videos)

| |

| [6930537557732495] Creating ICE agent (ICE Full mode, controlling)

| |

| [6930537557732495] Adding 172.31.28.115 to the addresses to gather candidates for

| |

| [6930537557732495] Gathering done for stream 1

| |

| janus_dtls_bio_filter_ctrl: 6

| |

| -------------------------------------------

| |

| >> Anonymized

| |

| -------------------------------------------

| |

| [WARN] [6930537557732495] Skipping disabled/unsupported media line...

| |

| -------------------------------------------

| |

| >> Merged (193 bytes)

| |

| -------------------------------------------

| |

| v=0

| |

| o=- 1526081202248668 1 IN IP4 34.210.153.174

| |

| s=Janus TextRoom plugin

| |

| t=0 0

| |

| a=group:BUNDLE

| |

| a=msid-semantic: WMS janus

| |

| m=application 0 DTLS/SCTP 0

| |

| c=IN IP4 34.210.153.174

| |

| a=inactive

| |

| | |

| [6930537557732495] Sending event to transport...

| |

| Sending event to janus.transport.http (0x7fd43c007100)

| |

| Got a Janus API event to send (0x7fd43c007100)

| |

| >> Pushing event: 0 (took 368 us)

| |

| [6930537557732495] ICE thread started; 0x7fd458003df0

| |

| [ice.c:janus_ice_thread:2574] [6930537557732495] Looping (ICE)...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "event",

| |

| "session_id": 2542284235228595,

| |

| "transaction": "DQL62lpsIPOW",

| |

| "sender": 6930537557732495,

| |

| "plugindata": {

| |

| "plugin": "janus.plugin.textroom",

| |

| "data": {

| |

| "textroom": "event",

| |

| "result": "ok"

| |

| }

| |

| },

| |

| "jsep": {

| |

| "type": "offer",

| |

| "sdp": "v=0\r\no=- 1526081202248668 1 IN IP4 34.210.153.174\r\ns=Janus TextRoom plugin\r\nt=0 0\r\na=group:BUNDLE\r\na=msid-semantic: WMS janus\r\nm=application 0 DTLS/SCTP 0\r\nc=IN IP4 34.210.153.174\r\na=inactive\r\n"

| |

| }

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP POST request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] Content-Length: 310

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] content-type: application/json

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Handle: 6930537557732495

| |

| Processing POST data (application/json) (310 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1248] -- Data we have now (310 bytes)

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP POST request on /janus/2542284235228595/6930537557732495...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Handle: 6930537557732495

| |

| Processing POST data (application/json) (0 bytes)...

| |

| [transports/janus_http.c:janus_http_handler:1253] Done getting payload, we can answer

| |

| {"janus":"message","body":{"request":"ack"},"transaction":"rR1mPGNHkKYW","jsep":{"type":"answer","sdp":"v=0\r\no=- 5794779635134951790 2 IN IP4 127.0.0.1\r\ns=-\r\nt=0 0\r\na=msid-semantic: WMS\r\nm=application 0 DTLS/SCTP 5000\r\nc=IN IP4 0.0.0.0\r\na=mid:data\r\na=sctpmap:5000 webrtc-datachannel 1024\r\n"}}

| |

| Forwarding request to the core (0x7fd45c002d70)

| |

| Got a Janus API request from janus.transport.http (0x7fd45c002d70)

| |

| Transport task pool, serving request

| |

| [6930537557732495] There's a message for JANUS TextRoom plugin

| |

| [6930537557732495] Remote SDP:

| |

| v=0

| |

| o=- 5794779635134951790 2 IN IP4 127.0.0.1

| |

| s=-

| |

| t=0 0

| |

| a=msid-semantic: WMS

| |

| m=application 0 DTLS/SCTP 5000

| |

| c=IN IP4 0.0.0.0

| |

| a=mid:data

| |

| a=sctpmap:5000 webrtc-datachannel 1024

| |

| [6930537557732495] Audio has NOT been negotiated, Video has NOT been negotiated, SCTP/DataChannels have NOT been negotiated

| |

| [WARN] [6930537557732495] Skipping disabled/unsupported media line...

| |

| [ERR] [janus.c:janus_process_incoming_request:1193] Error processing SDP

| |

| [rR1mPGNHkKYW] Returning Janus API error 465 (Error processing SDP)

| |

| Got a Janus API response to send (0x7fd45c002d70)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| | |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)

| |

| Got a Janus API response to send (0x7fd43c001c10)

| |

| Long poll time out for session 2542284235228595...

| |

| We have a message to serve...

| |

| {

| |

| "janus": "keepalive"

| |

| }

| |

| [transports/janus_http.c:janus_http_handler:1137] Got a HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1138] ... Just parsing headers for now...

| |

| [transports/janus_http.c:janus_http_headers:1690] Host: jangouts.opensourceecology.org:8089

| |

| [transports/janus_http.c:janus_http_headers:1690] Connection: keep-alive

| |

| [transports/janus_http.c:janus_http_headers:1690] accept: application/json, text/plain, */*

| |

| [transports/janus_http.c:janus_http_headers:1690] Origin: https://jangouts.opensourceecology.org

| |

| [transports/janus_http.c:janus_http_headers:1690] User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36

| |

| [transports/janus_http.c:janus_http_headers:1690] Referer: https://jangouts.opensourceecology.org/textroomtest.html

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Encoding: gzip, deflate, sdch, br

| |

| [transports/janus_http.c:janus_http_headers:1690] Accept-Language: en-US,en;q=0.8

| |

| [transports/janus_http.c:janus_http_handler:1170] Processing HTTP GET request on /janus/2542284235228595...

| |

| [transports/janus_http.c:janus_http_handler:1223] ... parsing request...

| |

| Session: 2542284235228595

| |

| Got a Janus API request from janus.transport.http (0x7fd43c001c10)

| |

| Session 2542284235228595 found... returning up to 1 messages

| |

| [transports/janus_http.c:janus_http_notifier:1723] ... handling long poll...

| |

| Got a keep-alive on session 2542284235228595

| |

| Sending Janus API response to janus.transport.http (0x7fd43c001c10)