Hetzner3

This article is about the migration from "Hetzner2" to "Hetzner3".

For more general information about the OSE Server, you probably want to see OSE Server.

Initial Provisioning

![]() Hint: For a verbose log of the project to provision the Hetzner3 server, see Maltfield_Log/2024_Q3, Maltfield_Log/2024_Q4, and Maltfield_Log/2025_Q1

Hint: For a verbose log of the project to provision the Hetzner3 server, see Maltfield_Log/2024_Q3, Maltfield_Log/2024_Q4, and Maltfield_Log/2025_Q1

OS Install

We used hetzner's installimage tool to install Debian 12 on hetzner3.

We kept all the defaults, except the hostname.

The two NVMe disks were setup in a software RAID1 with a 32G swap, 1G '/boot', and the rest for '/'.

Initial Hardening

After the OS's first boot, I (Michael Altfield) ran a quick set of commands to create a user for me, do basic ssh hardening, and setup a basic firewall to block everything except ssh

adduser maltfield --disabled-password --gecos '' groupadd sshaccess gpasswd -a maltfield sshaccess mkdir /home/maltfield/.ssh/ echo "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDGNYjR7UKiJSAG/AbP+vlCBqNfQZ2yuSXfsEDuM7cEU8PQNJyuJnS7m0VcA48JRnpUpPYYCCB0fqtIEhpP+szpMg2LByfTtbU0vDBjzQD9mEfwZ0mzJsfzh1Nxe86l/d6h6FhxAqK+eG7ljYBElDhF4l2lgcMAl9TiSba0pcqqYBRsvJgQoAjlZOIeVEvM1lyfWfrmDaFK37jdUCBWq8QeJ98qpNDX4A76f9T5Y3q5EuSFkY0fcU+zwFxM71bGGlgmo5YsMMdSsW+89fSG0652/U4sjf4NTHCpuD0UaSPB876NJ7QzeDWtOgyBC4nhPpS8pgjsnl48QZuVm6FNDqbXr9bVk5BdntpBgps+gXdSL2j0/yRRayLXzps1LCdasMCBxCzK+lJYWGalw5dNaIDHBsEZiK55iwPp0W3lU9vXFO4oKNJGFgbhNmn+KAaW82NBwlTHo/tOlj2/VQD9uaK5YLhQqAJzIq0JuWZWFLUC2FJIIG0pJBIonNabANcN+vq+YJqjd+JXNZyTZ0mzuj3OAB/Z5zS6lT9azPfnEjpcOngFs46P7S/1hRIrSWCvZ8kfECpa8W+cTMus4rpCd40d1tVKzJA/n0MGJjEs2q4cK6lC08pXxq9zAyt7PMl94PHse2uzDFhrhh7d0ManxNZE+I5/IPWOnG1PJsDlOe4Yqw== maltfield@ose" > /home/maltfield/.ssh/authorized_keys chown -R maltfield:maltfield /home/maltfield/.ssh chmod -R 0600 /home/maltfield/.ssh chmod 0700 /home/maltfield/.ssh # without this, apt-get may get stuck export DEBIAN_FRONTEND=noninteractive apt-get update apt-get -y install iptables iptables-persistent apt-get -y purge nftables update-alternatives --set iptables /usr/sbin/iptables-legacy update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy update-alternatives --set arptables /usr/sbin/arptables-legacy update-alternatives --set ebtables /usr/sbin/ebtables-legacy iptables -A INPUT -i lo -j ACCEPT iptables -A INPUT -s 127.0.0.1/32 -d 127.0.0.1/32 -j DROP iptables -A INPUT -p icmp -j ACCEPT iptables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT iptables -A INPUT -p tcp -m state --state NEW -m tcp --dport 32415 -j ACCEPT iptables -A INPUT -j DROP iptables -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT iptables -A OUTPUT -s 127.0.0.1/32 -d 127.0.0.1/32 -j ACCEPT iptables -A OUTPUT -m owner --uid-owner 0 -j ACCEPT iptables -A OUTPUT -m owner --uid-owner 42 -j ACCEPT iptables -A OUTPUT -m owner --uid-owner 1000 -j ACCEPT iptables -A OUTPUT -m limit --limit 5/min -j LOG --log-prefix "iptables denied: " --log-level 7 iptables -A OUTPUT -j DROP ip6tables -A INPUT -i lo -j ACCEPT ip6tables -A INPUT -s ::1/128 -d ::1/128 -j DROP ip6tables -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT ip6tables -A INPUT -j DROP ip6tables -A OUTPUT -s ::1/128 -d ::1/128 -j ACCEPT ip6tables -A OUTPUT -m state --state RELATED,ESTABLISHED -j ACCEPT ip6tables -A OUTPUT -m owner --uid-owner 0 -j ACCEPT ip6tables -A OUTPUT -m owner --uid-owner 42 -j ACCEPT ip6tables -A OUTPUT -m owner --uid-owner 1000 -j ACCEPT ip6tables -A OUTPUT -j DROP iptables-save > /etc/iptables/rules.v4 ip6tables-save > /etc/iptables/rules.v6 cp /etc/ssh/sshd_config /etc/ssh/sshd_config.orig.`date "+%Y%m%d_%H%M%S"` grep 'Port 32415' /etc/ssh/sshd_config || echo 'Port 32415' >> /etc/ssh/sshd_config grep 'AllowGroups sshaccess' /etc/ssh/sshd_config || echo 'AllowGroups sshaccess' >> /etc/ssh/sshd_config grep 'PermitRootLogin no' /etc/ssh/sshd_config || echo 'PermitRootLogin no' >> /etc/ssh/sshd_config grep 'PasswordAuthentication no' /etc/ssh/sshd_config || echo 'PasswordAuthentication no' >> /etc/ssh/sshd_config systemctl restart sshd.service apt-get -y upgrade

After all the packages updated, I gave my new user sudo permission

root@mail ~ # cp /etc/sudoers /etc/sudoers.20240731.orig root@mail ~ # root@mail ~ # visudo root@mail ~ # root@mail ~ # diff /etc/sudoers.20240731.orig /etc/sudoers 47a48 > maltfield ALL=(ALL:ALL) NOPASSWD:ALL root@mail ~ #

Ansible

After basic, manual hardening was done, we used Ansible to further provision and configure Hetzner3.

The Ansible playbook that we use is called provision.yml. It contains some public and many custom ansible roles. All of this is available on our GitHub:

* https://github.com/OpenSourceEcology/ansible

First, we used ansible to push-out only the highest-priority roles for hardening the server: dev-sec.ssh-hardening, mikegleasonjr.firewall, maltfield.wazuh, maltfield.unattended-upgrades

* https://wiki.opensourceecology.org/wiki/Maltfield_Log/2024_Q3#Sat_Sep_14.2C_2024

The ssh role didn't create new sshd keys with our hardened specifications, so I did this manually

tar -czvf /etc/ssh.$(date "+%Y%m%d_%H%M%S").tar.gz /etc/ssh/* cd /etc/ssh/ # enter no passphrase for each command indivdually (-N can automate this, but only on some distros [centos but not debian]) ssh-keygen -f /etc/ssh/ssh_host_rsa_key -t rsa -b 4096 -o -a 100 ssh-keygen -f /etc/ssh/ssh_host_ecdsa_key -t ecdsa -b 521 -o -a 100 ssh-keygen -f /etc/ssh/ssh_host_ed25519_key -t ed25519 -a 100

Unfortunately, wazuh couldn't be fully setup because email wasn't setup. So the next step was to use ansible to install postfix, stubby, unbound, and update the firewall with roles: mikegleasonjr.firewall, maltfield.dns, maltfield.postfix, maltfield.wazuh

At this point, I also updated the hostname, updated the DNS SPF records in cloudflare, and set the RDNS in hetzner.

* https://wiki.opensourceecology.org/wiki/Maltfield_Log/2024_Q3#Mon_Sep_16.2C_2024

To finish setting-up wazuh, I manually created /var/sent_encrypted_alarm.settings and /var/ossec/.gnupg/

After wazuh email alerts were working, I used ansible to setup backups on Hetnzer3 with the role: maltfield.backups.

After ansible installed most of the files, I manually copied-over /root/backups/backups.settings from the old server and added both the old and a new keyfile, which were pregenerated and stored in our shared ose keepass (I also made sure these keys were stored in Marcin's veracrypt USB drive when I visited FeF), which are located at /root/backups/ose-backups-cron.key and /root/backups/ose-backups-cron.2.key

I also created a new Backblace B2 set of API keys and configured rclone to use them.

Before continuing, I made sure that the backup script was working, and I did a full restore test by downloading a backup file from the Backblaze B2 WUI, decrypting it, extracting it, and doing a spot-check to make sure I could actually read one file from every archive as-expected.

* https://wiki.opensourceecology.org/wiki/Maltfield_Log/2024_Q3#Sun_Sep_22.2C_2024

After I confirmed that backups were fully working, I moved-on to the web server stack.

First I used ansible to push the 'maltfield.certbot' role. And then I force-renewed the certs on hetzner2 and securely copied the entire contents of /etc/letsencrypt/ from hetzner2 to hetzner3.

Then I used ansible to push the rest of the web stack roles: maltfield.nginx, maltfield.varnish, maltfield.php, maltfield.mariadb, maltfield.apache, maltfield.munin, maltfield.awstats, maltfield.cron, and maltfield.logrotate.

One those roles were able to push without issue, I uncommented all the roles and made sure the ansible playbook could do a complete provisioning of all our roles without any errors.

* https://wiki.opensourceecology.org/wiki/Maltfield_Log/2024_Q3#Wed_Sep_25.2C_2024

Restore State (snapshot & test)

Next, I restored the server state with just a snapshot of the hetzner2 server's state. I downloaded the latest hetzner2 backup onto hetzner3.

I manually hardened mysql on hetzner3

mysql_secure_installation

And then I restored all the mysql DBs from the hetzner2 snapshot.

To get munin to be able to collect data from mysql, I crated the munin user (note that you should *not* GRANT it access to anything)

source /root/backups/backup.settings

echo "CREATE USER munin@localhost identified by 'CHANGEME';" | mysql -u${mysqlUser} -p${mysqlPass}

echo "flush privileges;" | mysql -u${mysqlUser} -p${mysqlPass}

And I created the munin config file (including setting the mysql user's password as set above). Note that this file is *not* in ansible because it contains a password. Future migrations will want to create this file by copying to from the old server. Or from backups, if needed.

vim /etc/munin/plugin-conf.d/zzz-myconf

I created '/var/www/html/.htpasswd' (copied from the old server), and I tested that munin and awstats were functioning.

One-by-one, I copied each vhost docroot from the hetzner2 backups into hetzner3's vhost docroots. I set the /etc/hosts file on my laptop to override DNS and point each vhost domain to the hetzner3 server. To confirm I was loading the right server's vhost in my browser, I added '/is_hetzner3' with this command

for docroot in $(sudo find /var/www/html/* -maxdepth 1 -regextype awk -regex ".*(htdocs|public_html)" -type d); do echo "true" | sudo tee "$docroot/is_hetzner3"; done

And after restoring each vhost docroot, I created an unprivliged 'not-apache' user:

adduser not-apache --disabled-password --gecos --home /dev/null --shell /usr/sbin/nologin''''

And then I fixed the permissions with this (since CentOS and Debian have different users & groups). But you, dear future reader, would probably be smarter to copy this file from our backups.

cat > /usr/local/bin/fix_web_permissions.sh <<'EOF'

#!/bin/bash

#set -x

################################################################################

# File: fix_web_permissions.sh

# Version: 0.2

# Purpose: Idempotent script that will set the minimum permissions required for

# all of the files in /var/www/html. Run this script after you make

# changes to any files (eg update wordpress, mediawiki, phplist, etc)

# Authors: Michael Altfield <michael@michaelaltfield.net>

# Created: 2025-02-06

# Updated: 2025-02-13

################################################################################

################################################################################

# SETTINGS #

################################################################################

################################################################################

# FUNCTIONS #

################################################################################

################################################################################

# MAIN BODY #

################################################################################

# first pass, whole site

chown -R not-apache:www-data "/var/www/html"

find "/var/www/html" -type d -exec chmod 0050 {} \;

find "/var/www/html" -type f -exec chmod 0040 {} \;

#############

# WORDPRESS #

#############

wordpress_sites="$(find /var/www/html -type d -wholename *htdocs/wp-content)"

for wordpress_site in $wordpress_sites; do

wp_docroot="$(dirname "${wordpress_site}")"

vhost_dir="$(dirname "${wp_docroot}")"

chown -R not-apache:www-data "${vhost_dir}"

find "${vhost_dir}" -type d -exec chmod 0050 {} \;

find "${vhost_dir}" -type f -exec chmod 0040 {} \;

chown not-apache:apache-admins "${vhost_dir}/wp-config.php"

chmod 0040 "${vhost_dir}/wp-config.php"

[ -d "${wp_docroot}/wp-content/uploads" ] || mkdir "${wp_docroot}/wp-content/uploads"

chown -R not-apache:www-data "${wp_docroot}/wp-content/uploads"

find "${wp_docroot}/wp-content/uploads" -type f -exec chmod 0660 {} \;

find "${wp_docroot}/wp-content/uploads" -type d -exec chmod 0770 {} \;

[ -d "${wp_docroot}/wp-content/tmp" ] || mkdir "${wp_docroot}/wp-content/tmp"

chown -R not-apache:www-data "${wp_docroot}/wp-content/tmp"

find "${wp_docroot}/wp-content/tmp" -type f -exec chmod 0660 {} \;

find "${wp_docroot}/wp-content/tmp" -type d -exec chmod 0770 {} \;

done

###########

# phpList #

###########

phplist_sites="$(find /var/www/html -maxdepth 1 -type d -iname *phplist*)"

for vhost_dir in $phplist_sites; do

for dir in ${vhost_dir}; do chown -R not-apache:www-data "${dir}"; done

for dir in ${vhost_dir}; do find "${dir}" -type d -exec chmod 0050 {} \;; done

for dir in ${vhost_dir}; do find "${dir}" -type f -exec chmod 0040 {} \;; done

for dir in ${vhost_dir}; do [ -d "${dir}/public_html/uploadimages" ] || mkdir "${dir}/public_html/uploadimages"; done

for dir in ${vhost_dir}; do chown -R not-apache:www-data "${dir}/public_html/uploadimages"; done

for dir in ${vhost_dir}; do find "${dir}/public_html/uploadimages" -type f -exec chmod 0660 {} \;; done

for dir in ${vhost_dir}; do find "${dir}/public_html/uploadimages" -type d -exec chmod 0770 {} \;; done

done

#############

# MediaWiki #

#############

vhost_dir="/var/www/html/wiki.opensourceecology.org"

mw_docroot="${vhost_dir}/htdocs"

chown -R not-apache:www-data "${vhost_dir}"

find "${vhost_dir}" -type d -exec chmod 0050 {} \;

find "${vhost_dir}" -type f -exec chmod 0040 {} \;

chown not-apache:apache-admins "${vhost_dir}/LocalSettings.php"

chmod 0040 "${vhost_dir}/LocalSettings.php"

[ -d "${mw_docroot}/images" ] || mkdir "${mw_docroot}/images"

chown -R www-data:www-data "${mw_docroot}/images"

find "${mw_docroot}/images" -type f -exec chmod 0660 {} \;

find "${mw_docroot}/images" -type d -exec chmod 0770 {} \;

[ -d "${mw_docroot}/images/captcha" ] || mkdir "${mw_docroot}/images/captcha"

chown -R www-data:www-data "${mw_docroot}/images/captcha"

find "${mw_docroot}/images/captcha" -type f -exec chmod 0660 {} \;

find "${mw_docroot}/images/captcha" -type d -exec chmod 0770 {} \;

[ -d "${vhost_dir}/cache" ] || mkdir "${vhost_dir}/cache"

chown -R www-data:www-data "${vhost_dir}/cache"

find "${vhost_dir}/cache" -type f -exec chmod 0660 {} \;

find "${vhost_dir}/cache" -type d -exec chmod 0770 {} \;

EOF

chown root:root /usr/local/bin/fix_web_permissions.sh

chmod 0755 /usr/local/bin/fix_web_permissions.sh

time /usr/local/bin/fix_web_permissions.sh

I installled wp-cli per Wordpress#WP-CLI. wp-cli is intentionally *not* given internet access, but it is still useful for getting info and activiating/deactivatig plugins & themes.

I configured mdadm to send emails to our ops list in the event that one of the disks in our RAID1 array fails (note this is not configured in ansible because we don't want our email addresses on GitHub)

root@hetzner3 ~ # cd /etc/mdadm/ root@hetzner3 /etc/mdadm # cp mdadm.conf mdadm.conf.20240929.orig root@hetzner3 /etc/mdadm # root@hetzner3 /etc/mdadm # vim mdadm.conf root@hetzner3 /etc/mdadm # root@hetzner3 /etc/mdadm # diff mdadm.conf.20240929.orig mdadm.conf 18c18,19 < MAILADDR root --- > MAILFROM REDACTED@hetzner3.opensourceecology.org > MAILADDR REDACTED@opensourceecology.org root@hetzner3 /etc/mdadm #

I configured mdadm to send emails to our ops list in the event that one of the disks starts to show signs of failure (note this is not configured in ansible because we don't want our email addresses on GitHub).

root@hetzner3 ~ # cd /etc/ root@hetzner3 /etc/ # mv /etc/smartd.conf /etc/smartd.conf.$(date "+%Y%m%d_%H%M%S").orig root@hetzner3 /etc/ # root@hetzner3 /etc/ # echo "DEVICESCAN -d removable -n standby -m REDACTED@opensourceecology.org -M exec /usr/share/smartmontools/smartd-runner" > /etc/smartd.conf root@hetzner3 /etc/ #

I created accounts for Marcin, Catarina, and Tom Griffing

USERNAME='marcin'

PUBKEY='ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDDMiIUel3xYyxuiXAj82PzoJDwRczrEpDgUoRI4W9ceL5FqVcY38Go9q3SF2Nx0FEj+IdCUXc08lyy6ZPUbPcKvscFxWeue4aMM62ikzNxmhGBdjqgT3q3wpJgyjTXmt9AJcglcAm9mcQffSUi3RD9KDlCyc/T923eZdaLAkW/BMhjuOZqY90tjGqs/r/kxN0gf4vI24NMFL/41ct7OMKVnNNsjIpQtceX9fCOCumAx53OdtJEcp46TvzevZk2987Zn0VsONznvVCJ0kmm8B0RJxwIfmiLM73f+reo0pv+sSc2rU7SrpzLfPWLFcM7pkJQc3HtLnktl5form3flp+EkI7fr7348r8A7W+QIifjXk66ohJReDni9H/S4JSX2L1lf8LfJKSHtAqrFRWSPp22MKre5hiH0IybED6XZfz59HT0cgMK2iNcPRj/J+hEbBM0f4zZu62PUad7rr1JI4Vv078/ROaD47fykicxYhauI4R71J1YucSj/vekXf17x3xlO+u8ucSeUhdpMuIAa3Yk16bXsrwo4nIdcApC6rwfNiQDK8Ecx6+M6pV6z+dII4OMHvEYWw92wWJZfIyk7emvAoataqp3DfI0DQagPNBo2ieEZYLvNYny+X9hf6faZ6trsGnR4GfN83PEt3ZfmoEoyTVB2POiBdM8a1GNTlEasQ== marcin@Precision-M6500'

# create user if it doesn't yet exist

adduser ${USERNAME} --disabled-password --gecos ''

# add ssh pubkey

mkdir /home/${USERNAME}/.ssh

echo $PUBKEY > /home/${USERNAME}/.ssh/authorized_keys

chown -R ${USERNAME}:${USERNAME} /home/${USERNAME}/.ssh

chmod 0700 /home/${USERNAME}/.ssh

chmod 0600 /home/${USERNAME}/.ssh/authorized_keys

USERNAME='cmota'

PUBKEY='ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDjEu4tbVMJxAX6VuCIrhLYDh/PBlyFHfgGU5ovuPPZLOWYAYI2xYgl5SCweKgB8g2hNTOoyLKgxj0UF7MH22xYQV/EwEwVxX/isSwNvGGikOaKOfb9rBt6nlW2K6ehJlPpHA2nPiqDcuU/1wT3T1FTpYL+uTtQxr4gF8Ijt4aNLwpRdvzgza5vZ1I1R0yLuC2VI1m0NNqT45yGRyWZpM7thT7YGS5Jr0DQa0kLxEZvhGcgdAL6kYfJLW1IhglOZTcoff5TGY6Q8X/gjNrVv6ZUeNF2QiXz4Gm6I6I1YtUDdEEfndu0bHATkMX9aeNG6qAfcYcUcm8pnK+c/RehE0LAcNSDCg9VozsDGg65ywgYw+k0mTl2sW8V95Igfi8oxf/ulGuzxgyriQlhFA4JckDA6Vz2BCjcYabcRhc0ugG34SBRPOUCxVzdb40FSGftVcxb1FeDxsnHxQkl23W9dCcwMMU1m2ssY6F09TTiqhbIp816MkepfWNkB5QDPbmu6EWgT4jp3zWqjMUNcYz9NmRsb6VZ9G357LPOZgMM36XOQXIePcWo5bCQYSusPDSXXjqeSeEVnrfrJJEpBr2AxFCt1R3Dw/fs/rG+YFGNdFadsgiSHxHs2zJglV+Pj8buI6z/EOuHXylZN/2jfOAT17oRU5QXz0HlT0ToeehwFb1+Gw== catarina@Computer'

# create user if it doesn't yet exist

adduser ${USERNAME} --disabled-password --gecos ''

# add ssh pubkey

mkdir /home/${USERNAME}/.ssh

echo $PUBKEY > /home/${USERNAME}/.ssh/authorized_keys

chown -R ${USERNAME}:${USERNAME} /home/${USERNAME}/.ssh

chmod 0700 /home/${USERNAME}/.ssh

chmod 0600 /home/${USERNAME}/.ssh/authorized_keys

USERNAME='tgriffing'

PUBKEY='ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDHS8EYmP85HqJwsP4kJ3D2dIBFBgVY8A8YUFubm+bjOFKHr9mV4nnJoY2TweQsKjsT8Kvg8uRPeThls5/7QK/3gDz/objdWp/2W5kvwhDxlZwEWyf+5a6F23OYLc5oeixwR/TyU13OokXSeZeTxPX3m/It1VBKEz0QUCjwTHEkPrjjhbeVlQ7vFeCAwGlrA8puDF1l8SUIO23hpiU9E+IM/+wTasEP8YblSk9445mLow4BexlvmfrRsXXdg/vrdObchzeo9rhZxMTWPE2nbyVUp86iaNp/PVbeTNKWx0hZF0zr7TjIbsmYmGXlPMZcKaStpcfMlVJ+hJ9NxwTHrqhC0lsfNz9pvPdLkZM3O5Ychevu4xlFb3XddMiO1QHodqf56vZhicMA+9cLfZpFTcwtVGseD+JpURPuG2DBtEDkozGk1szx2SoX5B6ccprZYvfj4HiTW6+qv7XN2uMbRMHw0VMyAPjwSKYC/YzTZ885VAFj8Oo5t5Q6F9VW1oRUF3gWrcLBcvL2XUDQCCUpF3bDlHxQQqJZ3EifW6rDZVHlyLkzq6/FKTUPHuHdX4K5DPdJxEcfdm5zyjiGEtGQ2uzHx3WAJMaykjFsJElsE7avhHagKzneS/b4shReEEseNErhW5d0AyAoPkEoVkCyauS2vOvNAZ29OXc2Yf6DEIdU9Q== tom@thomasgfingsmbp

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDGwcx6l+W/6CTU+bm9gOZM53uEPvSsTk31PqQE/svb9qNzrM+Ny8xBfofbhlbTXYFAldlGC3S3DgBO6yOHCQgnHtf7zqBD+sNsVqMGKSpDhkAn09CmJy9P90p4ovZqHfGpvSPXfyPyBF/ebLgJeS8roxcU9OyTO+iRMXv8rOgK7zLLbdMy+/tXr6muGyaIzHJljYpaebd4kjM4INaycGYY7gEVBmBzC6wHj+PDLcPSeYXTVG6R7RrfGQuvtM61hNY90+pw2di0GR57wqF/0tLvfJ5+QyWJoh4ns4gBhRf8/2QVfcy+DD9ofQ8ILRVVf77IxZRTY8j+zgUBD4YjvBmtx/UB2nJJRwyDjPEB55grC+LjQ8ehwgc2LpE2nVvEWCUZjdw5kFZjD4fHVWRhbcVmusSIAyw47xPpywRtry0+rdbL90i2JTitFMRzqTZLETAOgEfRp50WiPulxh2Gj1bVCHFvx1p/hdxbEWZx2k2s62SOYvZj+yBazK9gBFLwPZWBx5bzeu091Yxvingt+EZ4qGF807trP5e46oJCLmAU1DXD4enWmTfGQxvsallREYj6xbdWjMq+Az35nWmlg7omlvZPVMDZ7S+++dTO9ypxJeeVEfBav/gkghqcY5lGIU51eCiBEric476NQRG7aJp9rakgF2wKj8qWIoOzRysWYw== tom@imac0'

# create user if it doesn't yet exist

adduser ${USERNAME} --disabled-password --gecos ''

# add ssh pubkey

mkdir /home/${USERNAME}/.ssh

echo $PUBKEY > /home/${USERNAME}/.ssh/authorized_keys

chown -R ${USERNAME}:${USERNAME} /home/${USERNAME}/.ssh

chmod 0700 /home/${USERNAME}/.ssh

chmod 0600 /home/${USERNAME}/.ssh/authorized_keys

I updated the ansible playbook to permit these new human user accounts to access the Internet, and I re-ran the ansible playbook to update the firewall.

* https://github.com/OpenSourceEcology/ansible/commit/f294f3384f5d4bc47ae55a1631c264950e0e1355

I created a group for users who have access to the shared OSE keepass file on the server, and I added the new users to the groups as-needed.

groupadd keepass gpasswd -a maltfield keepass gpasswd -a marcin keepass gpasswd -a cmota keepass gpasswd -a tgriffing keepass gpasswd -a marcin sshaccess gpasswd -a cmota sshaccess gpasswd -a tgriffing sshaccess gpasswd -a marcin www-data gpasswd -a cmota www-data gpasswd -a tgriffing www-data gpasswd -a tgriffing apache-admins

- TODO: actually migrate keepass

To see the actual commands used to migrate, update, and fix our websites from hetzner2 -> hetzner3, see

- TODO: forums

- CHG-2025-XX-XX migrate store to hetzner3

- CHG-2025-XX-XX migrate microfactory to hetzner3

- CHG-2025-XX-XX deprecate fef

- CHG-2025-XX-XX deprecate oswh

- CHG-2025-XX-XX_migrate_obi_to_hetzner3

- CHG-2025-XX-XX_migrate_osemain_to_hetzner3

- CHG-2025-XX-XX_migrate_phplist_to_hetzner3

- CHG-2025-XX-XX migrate wiki to hetzner3

Note that we did *not* migrate seedhome.opensourceecology.org. That site was never setup, and we decided to deprecate it.

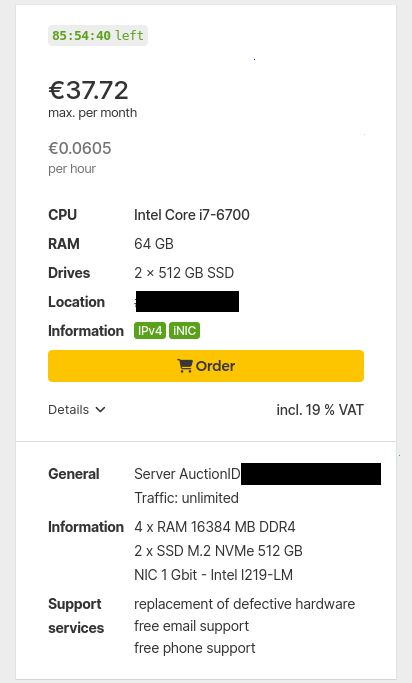

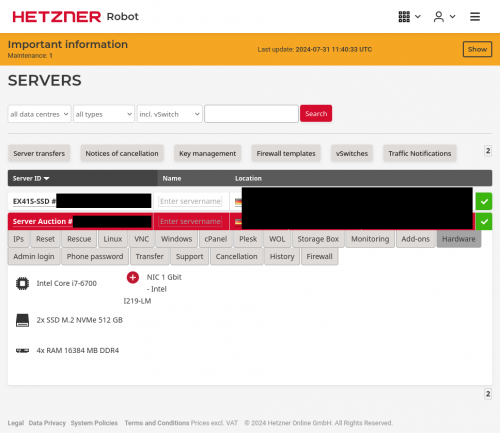

Purchase

We purchased Hetzner3 from a Dedicated Server Auction on 2024-07-30 for 37.72 EUR/mo.

Before becoming a discount auction server, Hetzner3 was sold as dedicated server model EX42-NVMe. For comparison, Hetzner2 was a EX41S-SSD.

Hardware

Hetzner3 came with the following hardware:

* Intel Core i7-6700 * 2x SSD M.2 NVMe 512 GB * 4x RAM 16384 MB DDR4 * NIC 1 Gbit Intel I219-LM * Location: Germany * Rescue system (English) * 1 x Primary IPv4

CPU

Hetzner3 has a Intel Core i7-6700. It's a 4-core (8-thread) 3.4 Ghz processor from 2015 with 8M Cache. This cannot be upgraded.

root@mail ~ # cat /proc/cpuinfo ... processor : 7 vendor_id : GenuineIntel cpu family : 6 model : 94 model name : Intel(R) Core(TM) i7-6700 CPU @ 3.40GHz stepping : 3 microcode : 0xf0 cpu MHz : 905.921 cache size : 8192 KB physical id : 0 siblings : 8 core id : 3 cpu cores : 4 apicid : 7 initial apicid : 7 fpu : yes fpu_exception : yes cpuid level : 22 wp : yes flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb invpcid_single pti ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid mpx rdseed adx smap clflushopt intel_pt xsaveopt xsavec xgetbv1 xsaves dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp md_clear flush_l1d arch_capabilities vmx flags : vnmi preemption_timer invvpid ept_x_only ept_ad ept_1gb flexpriority tsc_offset vtpr mtf vapic ept vpid unrestricted_guest ple shadow_vmcs pml bugs : cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf mds swapgs taa itlb_multihit srbds mmio_stale_data retbleed gds bogomips : 6799.81 clflush size : 64 cache_alignment : 64 address sizes : 39 bits physical, 48 bits virtual power management: root@mail ~ #

For comparison, this is the same processor that we've been using in Hetzner2, and it's way over-provisioned for our needs.

Disk

2x 512 GB NVMe disks should suit us fine.

We also have one empty NVMe slot and two emtpy SATA slots. As of today, we can upgrade each SATA slot with a max 3.84 TB SSD or max 22 TB HDD.

For comparison, we had 2x 250 GB SSD disks in Hetzner2, so this should be approximately double the capacity and a somewhat better disk io ops.

Memory

We have 64 GB of DDR4 RAM. This cannot be upgraded; this is the maximum memory that this system can take.

For comparison, this is the same memory as we've been using in Hetzner2. We could get-by with less, but varnish is happy to use it.

Initial Specifications Research

Because hetzner2 ran on CentOS7 (which was EOL'd 2024-06-30), Marcin asked Michael in July 2024 to begin provisioning a "hetzner3" with Debian to replace "hetzner2".

Note: The charts in this section come from Hetzner2, not Hetzner3

Munin

I (Michael Altfield) collected some charts from Hetzner2's munin to confirm my understanding of the Hetzner2 server's resource needs before purchasing a new Hetzner3 dedicated server from Hetzner.

CPU

In 2018[1], I said we'd want min 2-4 cores.

After reviewing the cpu & load charts for the past year, load rarely ever touches 3. Most of the time it hovers between 0.2 - 1. So I agree that 4 cores is fine for us now.

Most of these auctions have a Intel Core i7-4770, which is a 4-core + 8 thread proc. That should be fine.

Disk

Honestly, I expect that the lowest offerings of a dedicated server in 2024 are probably going to suffice for us, but what I'm mostly concerned-about is the disk. Even last week when I did the yum updates, I nearly filled the disk just by extracting a copy of our backups. Currently we have two 250G disks in a software RAID-1 (mirror) array. That give us a useable 197G

It's important to me that we double this at-least, but I'll see if there's any deals on 1TB disks or larger.

Also what we currently have is a 6 Gb/s SSD, so I don't want to downgrade that by going to a spinning-disk HDD. NvME might be a welcome upgrade. I/O wait is probably a bottleneck, but not currently one that's causing us agony

To be clear: the usage line of '/' in this chart is the middle-green line, which is ~50% full

Memory

In 2018[1], I said we'd want 8-16G RAM minimum. While that's technically true, we currently have 64G RAM. Most of these base cheap-as-they-come dedicated servers in the hetzener auction page have 64G RAM.

We use 40G of RAM just for varnish, which [a] greatly reduces load on the server and [b] gives our read-only visitors a much, much faster page load time. While we don't strictly *need* that much RAM, I'm going to make sure hetzner3 has at least as much RAM as hetzner2.

Nginx

Varnish

Full

See Also

- OSE Server

- OSE Development Server

- OSE Staging Server

- Website

- Web server configuration

- Wordpress

- Vanilla Forums

- Mediawiki

- Munin

- Awstats

- Ossec

- Google Workspace

External Links

- Hetzner Login to manage Hetzner3 - https://robot.hetzner.com/server